iPhone + Hyperstream can turn the world of streaming and live video from painful low-quality 2020 anxiety source – into something absolutely eye popping and beautiful and surprisingly easy.

Okay, I think anything involve streams, screening, cameras, or video of any kind at this point is bordering on aversion therapy in 2020. But –

Remember when video was fun?

That’s the idea here, because Hyperstream does a number of cool things all at once – provided you have an iPhone as the source. (I’ll have to ponder how this might work on Android, as I have a decent Huawei handset, too.)

And this doesn’t have to mean “livestreams.” It’s something you could use to record video, VJ, or make video art, too – basically, they’re all going to involve similar needs. People are also using it on still art and animations:

What Hyperstream does for you:

- It makes your camera an easy source for your computer. Wired or wireless. Windows or macOS. OBS, HDMI, AirPlay.

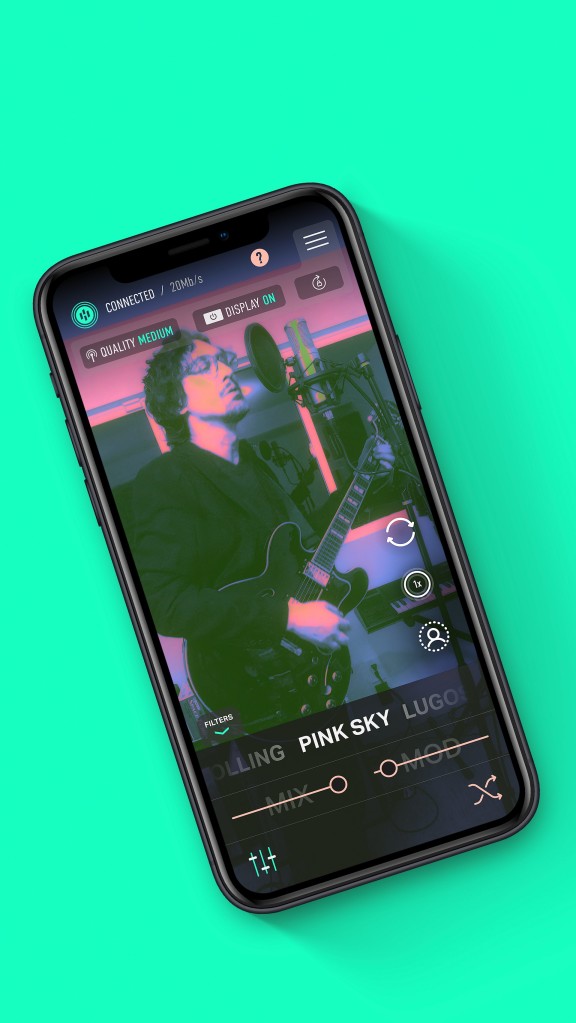

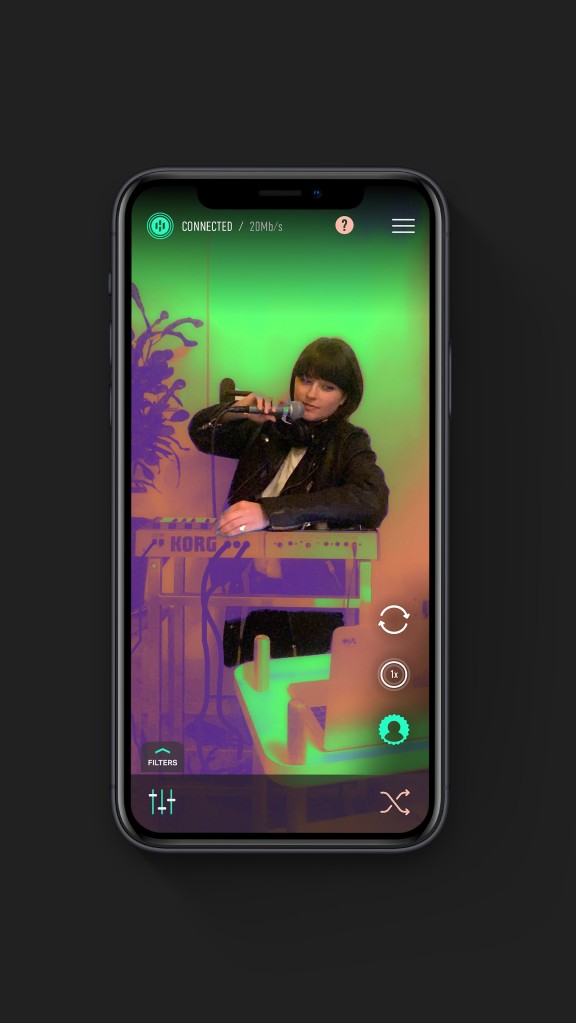

- It adds groovy filters to your video. 50 filters are available.

- It uses AR so you don’t need a green screen. This is actually a reason to choose the iPhone over other handsets – more on that soon – but what’s special here is that some of those filters work like green screen special effects without any extra hardware.

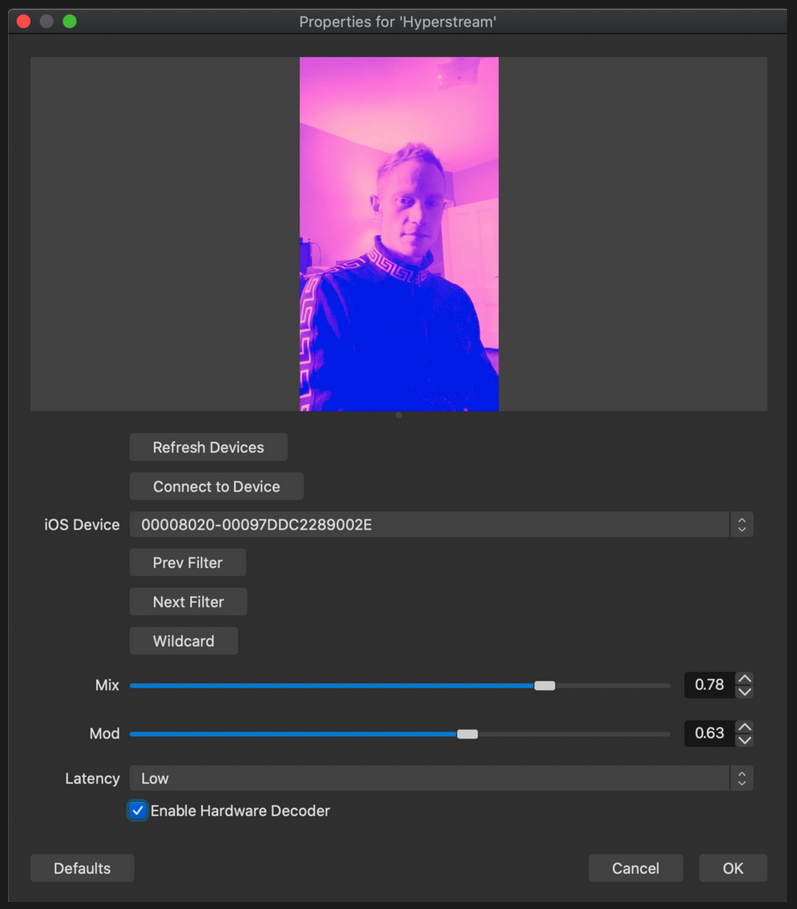

- It makes your camera a live source inside OBS – with interactive control. Here’s the part that’s especially useful for live streaming – this lets your iPhone show up inside Hyperstream and you can even control Hyperstream’s filter settings.

Full explanation:

https://www.hyperstream.app/gettingstarted

Look, you could drop acid and your stream will look better, but if anyone else sees it, that’s not a solution. So it’s a big deal that there are 50+ filters here, and a random “Wildcard” algorithm you can use from OBS that behaves like a twitchy VJ if you’re streaming solo. (Yes, drunken hyperactive VJ is one thing we can definitely automate with computers.)

But it’s also great that not only are their funky filters, but you get proper output support: – wide angle, telephoto, front and rear cameras.

So you have two likely use cases here.

Wired. For a reliable connection, you’ll want some kind of HDMI input device and a cable. (Think Lightning adapter.) I know we’re not supposed to use those dirt-cheap things from Amazon but … actually some of them surprised me and work pretty well. Here you would use the phone to swap filters (as opposed to OBS for remote control).

Wireless, with remote control. This is less reliable as it does use a wireless connection, but you also have the flexibility of positioning your phone wherever you want and moving it around. Plus, with this OBS plug-in, you can automate filter changes from the broadcast/recording software – which also means you can pre-configure particular sets of filters in scenes and swap between them that way.

Of course, yes, you can also make feedback.

Control inside OBS – though I think the main advantage here being, you can store your filter settings with your other OBS scenes/setup:

By the way, if you’re a developer, you should check out Will Townsend of Loft Labs in Vancouver [GitHub]. His open-source projects are generally terrific, but specifically he did the work on making an iPhone into an OBS video source.

And if you’re not a developer, well – here’s a particle system example from him that’s a good place to start with Swift on iOS.

But the wonderful thing about all of this is, it’s populating our feeds with the videos that look as trippy as music and sound and synths make us all feel:

Now I just need to route this via HDMI into Resolume as mentioned this week so I can filter inside my filter inside my filter.

Grab it if you’ve got an iPhone:

Hyperstream: Live-Stream AR FX 4+ [App Store]