Comfort and creativity – the mystery of what makes certain vintage gear so appealing remains. There are few people closer to the meeting place of digital and analog, reason and sentiment, than Dr. David Berners. He’s the chief scientist for Universal Audio, responsible for modeling in digital software form the characteristics of sought-after, beloved analog gear. It’s science: Berners cut his teeth as an engineer working on the physics of nuclear fusion, going on to pursue a love of music and sound. Now he uses knowledge of physics and the characteristics of sound equipment to model computationally what makes this gear sound the way it does. But it’s also commerce: UA’s DSP platforms unlock access to a range of a la carte plug-ins, bringing a menu of sounds from the past to modern engineers without the associated bulk, inconvenience, and cost of the real thing.

So, if you’re curious to know a bit about what makes analog and digital gear tick, what that analog gear means in a digital age, Dave’s a good place to start. The timing’s good: UA’s on a bit of a roll. The company’s heritage begins entirely in the analog domain, founded in 1958 by Bill Putnam, Senior and resurrected in 1999 by his sons, James and Bill, to make new tools in both hardware and software. UA has recently introduced an elaborate software model of the Studer A800 tape recorder, one that seeks to make a digital workstation sound like a beloved, high-fidelity multitrack tape setup. There are also new models of the SSL console, authorized by manufacturer Solid State Logic, providing the channel strip and bus compressor; the real thing earned more Platinum records than any other gear, so it’s more or less guaranteed you’ve heard it unless you’ve been holed up on a farm listening to old-timey AM for the past few decades. And they’re expanding compatibility, with new support for Pro Tools and, via FireWire, all those Mac laptops that lack ExpressCard slots.

None of that, though, really winds up being the focus of our conversation. Dr. Berners is also Professor Berners, teaching the elements of DSP to students at Stanford with another UA alum and former CTO. Here, class is in session, as he talks about his laboratory-style approach to understanding how equipment works, and why having a theoretical model is so essential. He hedges on the question of why analog gear is appealing, leaving that to others, but opens up when explaining why he fell in love with engineering.

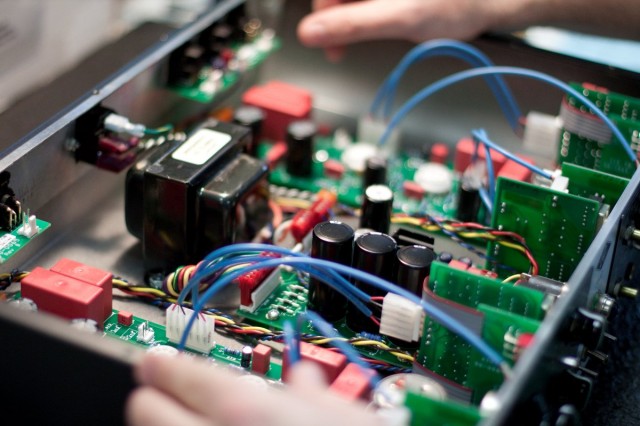

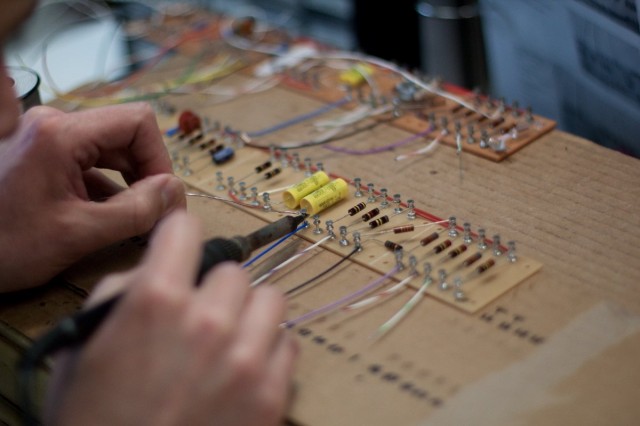

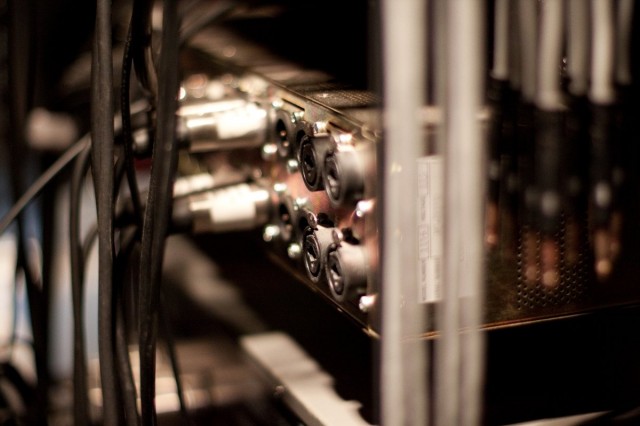

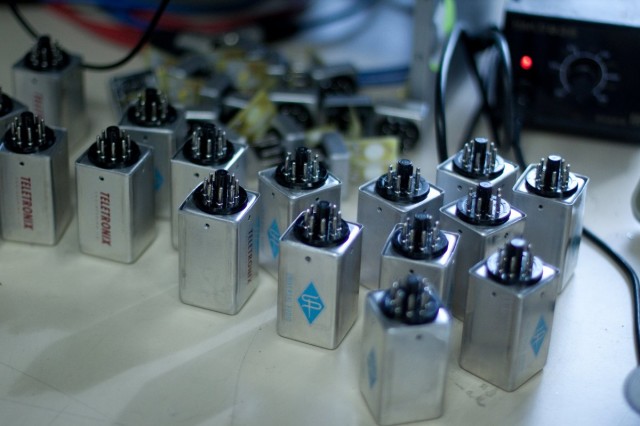

And, in the process, we get some serious gear porn courtesy of photography (and UA PR rep) Marsha Vdovin. She takes us inside the UA studio for a glimpse of a treasure trove of drool-worthy vintage gear and modern test equipment.

Deafening us with science, here’s Dr. Berners, proudly sponsored by our favorite advertiser, The Field of Mathematics. (They’ve been working on improving their PR lately. I hear they’re on Twitter.)

But deep beneath all that science, all the most empirical techniques for modeling, you might just discover how and why digital audio today could find its connection to the past.

CDM: Can you tell us a bit about how you wound up in this field? What led you to working in the science of DSP?

Dave: My parents told me that I wanted to be an engineer, ever since I was about five years old. I described to them the job that I wanted to do, and I asked them what it was called – and they said, that’s called an engineer. As far as I remember, that’s always what I figured I would end up doing.

After finishing a Masters Degree in power supply stuff, I worked at NASA a while on some design stuff for a couple of different projects, and then after that I worked at the Lawrence National Lab in Berkeley. It was some physics projects related to fusion power plants, so that was very different from audio. While I was doing all those things I didn’t realize I could find work in audio. I always liked the idea of doing audio-related stuff, but I didn’t know there would be any way I’d be realistically able to do it. While I was working at those two places, I found out about the CCRMA center at Stanford and decided to apply there for a graduate program [in the early 90s.] That was when I met Bill [Putnam, Jr. founder of the modern UA], because he was also a student there. I had done a little bit of DSP before that, but that was where I learned most of what I know.

And you’re teaching now at CCRMA.

Yes, along with Jonathan Abel. There’s one course in the fall that’s an introductory DSP, Discrete Time Filtering class. That was a course that was created by Julius Smith. He’s written the textbook for it. It’s meant for people who are just getting their feet wet … with no prerequisite other than high school math. The other class is the one Jonathan and I created, and that one’s more related to audio effects processing — tricks, I guess, on how to define DSP effects.

How did your background apply to coming the Universal Audio? Was there an additional learning curve, getting into work in audio?

For me personally, it was pretty smooth because I had real strong musical interests, the whole time I grew up. I had been an amateur musician my whole life, and spent a lot of hours playing music and working on music. Somehow that gave me an advantage – if I discovered I had made a bug or done something wrong, it gave me a good intuition — if something isn’t sounding right, what is it likely to be?

How much of modeling is intuition? I’d imagine that, with audio, the ultimate test is sort of if it sounds right, it is right?

The feeling thing is important. The way I like to think about it is that it’s not really among the design criteria. It’s more of a check. Ideally, what I would like is to be able to get a bunch of information about a product — schematics, info about the physics of how it works, whatever I need to understand the processes by which it operates — make a model, and implement the model. Human hearing comes into play, psychoacoustics, to determine what may or may not be important perceptually. But what I always hope is that by the time we get to the listening phase, there’s not anything left that we’re trying to tune, so to speak. It’d be more like catching bugs.

I do rely on our listening team — Will Shanks, in particular. He’s in charge of the qualification of our products in terms of our sound. I rely on him a lot, but it’s more that he’s finding little mistakes and errors. I don’t ever want to get into a situation where we listen to something and compare it to the original and say, well, I wish this sounded a little brighter. I would be very unhappy if I got in that position. I would much rather be able to have a complete understanding of how the original equipment works, and match that.

There’s a particular reason why I developed that opinion, and it has to do with the non-linearities that exist in a lot of this gear. If we had a totally linear system, like an equalizer that has no measurable distortion, that kind of system can be characterized fully just with one measurement. If I make a very good measurement in a careful way, for whatever setting the controls on the equipment is at, I can know everything there is to know about that piece of equipment. I can be totally confident that no matter what signal somebody puts through it, I can predict the behavior, just on the basis of my one measurement. What happens is if there’s any sort of non-linearity at all, unless we can characterize the non-linearity in a very specific way … it becomes absolutely impossible to characterize by measurement. There would always be the fear that even though you’d listen to a thousand audio snippets and they’d all sound identical, the next one that you try could sound different. It’s very difficult to have confidence in a model of a non-linear system, unless you know how that system works.

That goes hand in hand with how we do our measurements. We do use specific signals to cross-check our model — I’ll take a piece of gear, and start with the schematic, and write out with a pencil how it works. And then it’ll turn out that there are certain things in that circuit that aren’t really specified by that schematic. There’s a lot of behavior of different components – say you have a transistor or a tube or something – [where] you can write the part number on the schematic, but that doesn’t fully specify what that part does. We do have to do some measurements, but the only way we can trust the results of the measurements is if they’re informed. If I try to just take a piece of equipment as a black box, if I didn’t already know what was inside the box, it’d be impossible to make a good model.

You can do signal modeling, where you have some array of test signals that maybe you’ve developed and see what happens to them when they go through the equipment — and that to me is the risky way to do it. And then the other method is called physical modeling, where you try to understand all the processes that are happening inside the box. With that type of a model, you have to build the behavior from the bottom up, and then once you’re done, you need to verify that you’ve got all your parameters right. So instead of unknown types of behavior you just have unknown parameters. So you might say, I know there’s a capacitor inside here, and it probably has some resistance associated with it, and that resistance doesn’t appear on the schematic, because nobody knows what it is. But I can find out what it is by doing a particular measurement.

So then what happens is we’ll build up a behavioral model based on the physics of all the parts. And then only after we make the model can we decide what test signals are appropriate to expose all the unknown parameters. Every model that we make of a different piece of gear, we’ll have to invent a completely different set of test signals to find out the parameters of all the different components. Hopefully we’ll be able to do something without taking everything apart. In some cases, there are behaviors that are unobservable directly. Sometimes we’ll have to unsolder all the components and measure them separately and then put it back together. In general, we’re more comfortable trying to understand the real processes that are happening inside a box.

I’d assume you get better at doing this over time. Does what you learn in one place carry over to another?

Yeah, that’s half of it, and then the other half is, as time goes on, we have more and more processing power available to us. There are certain things that we would have liked to do in 1999 but couldn’t. We already foresaw that we might eventually be able to add some of these effects in. Every once in a long while, it’s worth revisiting some of these things and saying, well, now I have one hundred times more computational power available to me, so now I can start putting in more effects that are less noticeable than the ones we put in already, but maybe above the threshold of being able to be perceived.

So not only do we learn more as we do more projects, but we also have more opportunity to include effects that would have been too expensive ten years ago.

That seems to be a story that’s largely untold. People are aware of the trajectory of CPU power over the years. People are now looking at the area of the GPU and low-power CPUs. But people seem unaware that DSP chips has grown, too. It seems the bang for your buck is better today than it was even recently.

Oh, yeah, I’d say definitely. I think the tangible manifestation of that idea can be seen by the difference between our original UAD-1 card and the UAD-2. Over the last couple of years since the UAD-2 came out, we’ve had increasingly power-hungry processors that we’ve released. Now we’re to the point that a lot of this stuff would not have run even one instance on the old card. But we can still do it. We always feel like if there’s a question whether to include a part of the model that’s a little bit expensive, so far, we always put it in. The amount of processing power is never going to be reduced. We’d rather include more right now, because then we’re ahead of the curve.

Sometimes, it’ll turn out that we’re at a certain point in complexity, and in order to gain a tiny bit of perceptual improvement, it’d take a huge computational cost. And so then we figure we’re at a sweet spot, and so that’s good. Other times, we’ll look at something, and maybe by increasing computational cost a tiny bit, we could get a significant perceptual improvement. Then we may be inclined to put it in even if it stretches the current capacity of our hardware. There’s also cases where, if we feel like something’s gotten really expensive, sometimes we’ll make two versions of a plug-in. We always try to order everything so we take care of the major artifacts first.

Let’s talk about the Studer. First, to revisit this idea of process, where do you start modeling something like this? In some ways, it’s not the most non-linear of the things you’ve had to model. It does seem like it’s a complex system. There was a lot there to take into account in the design.

Yeah — the signal path is long, and there’s a lot of things happening in there. Also, the non-linearities, while they may not be as dramatic as, say, a guitar stompbox or something, they’re considerably complex. There’s a lot of behavior that has a fair amount of subtlety. I think that just about any magnetic mechanism is going to be complicated, because of the hysteresis that you get in magnetic processes. Not only is the tape deck magnetic, but it has a spatial extent. So whereas, if you have say a transformer or an inductor with a magnetic core, unless you’re being very picky, the coils of the wire don’t really move. They might deflect a tiny bit when current goes through them, but for the most part they stay put. And if you imagine the coils of wire are actually fixed on a transformer, the fields that are created don’t change their shape that much, unless you have a material that’s really saturating a lot. Basically, you have a one-dimensional system.

Whereas with the tape, there’s the thickness of the tape and then the width of the tape, and then there’s the length of the tape on which you’re making the recording. That’s all going by the heads, the record and the playback heads, and so the geometries become really important. Any time you have a system that’s got a spatial extent, and especially one that’s got moving parts like that, the computational complexity can go way up. Let’s say you have a tape that’s magnetized, it’s not going to be uniformly magnetized. The magnetization will be a function of the depth of the tape and the width of the tape and of course the length. If you wanted to keep track of all of that stuff, you have this sort of geometric explosion of complexity. It was really necessary to think very hard about how we could have some kind of a model that would be practical to implement – keep all the subtlety that we wanted to have.

Even though the original intent of the [Studer] deck was to be as linear as possible, to be a transparent recording medium, all those different factors made it one of the longer-term projects that we’ve ever done – just trying to figure out how to do the simplifications that we were going to have to do in a way that wouldn’t really detract from the fidelity of the model.

So, how much of this was theoretical? At what point do you have to look at the actual hardware?

We knew that we’d have to have a machine. Just distinguishing between the different tape formulations, it would be very difficult to be confident in those models done all in the abstract. This is one of those cases where we like to have a model, but it’s very important to be able to cross-check the results with the real thing. We had a Studer deck that we got from Ocean Way [Recording, the legendary Hollywood studio] and brought it into our studio. It’s been here for the last year and a half or so, and we’ve used it heavily. It’s really tough to take something like that apart; the cards plug into the interior of the machine. So we’d take the cards out and work on them, and I soldered a bunch of leads on different parts of the circuit that I wanted to look at, and then we could temporarily just lift a component if we really needed it to be disconnected.

For this, we ended up bringing in a bunch of scopes and other test equipment into the control room. I soldered flying leads onto the cards. It really turned out to be critical that we could look at different points inside the circuitry while we were using the deck.

It’s kind of related to the stuff I was trying to describe before. Even if we know a model for the whole process, if we want to expose a particular non-linearity or behavior at a certain point in the circuit, it’s a lot easier if we can look at data right from some internal circuit node rather than the output. So that’s how we did our verification – and obviously, listening, too.

It’s well known that the Studer is really carefully designed to have high fidelity and be well-behaved. But in spite of that, it turns out that there’s a little bit of non-linearity on the record amplifier, so the signal’s [got] some artifacts associated with it on the way to the record head. So that’s why we felt we had to monitor all these points on the circuit.

On the other hand, if we would have just started hooking up wires at different places and blindly tried to figure out what’s going on without knowing anything about how this stuff works, there’d be no way to work out a workable model. If we put out some signal that we just made up out of thin air, it would be overwhelming.

Having gotten intimate with this equipment, can you comment on what makes this gear so desirable in the first place, aside from pleasant associations with it historically?

I’m comfortable with audio and music, but I don’t want to decide upfront that something will be unpleasant or undesirable and leave it out. I’d rather put everything in. It’s never obvious, really, which artifacts are the desirable ones.

It seems really the opposite from what we’re seeing in consumer photography. There, when you see iPhone apps like Hipstamatic and Instamatic, the idea is to apply very specific, desirable qualities from a camera intentionally, rather than to model the whole camera. So they really have decided what’s desirable.

[laughs] If we had as many customers as the iPhone, maybe we’d charge $2 for an app.

Hey, in that case, maybe you’d just listen to your entire song library as if it were coming through the Studer.

That’s right. That could actually be great.

There is a place for that line of thought. And to me, that place is to make forward progress. Let’s say that we’ve analyzed a hundred highly-prized pieces of vintage gear, and tried to understand what makes them all special. Now, it gives us hopefully a good information base, and maybe a little intuition ourselves of how we’d design a new piece of equipment if we wanted it to have a specific sound. If we were going to design something like that, then we’d have a lot of freedom that we wouldn’t [otherwise], if we’re not claiming it’s identical to something.

For us, it’s worth it to do the modeling just to achieve the models ourselves. And when we are doing a model, we don’t want to interpose our own ideas about what’s important. The one case where we do is if there’s really solid evidence from psychoacoustic experiments that people will not be able to perceive something, then we will neglect that if it turns out to be expensive to put things in.

We’re willing to accept the fact that people will be unable to perceive certain things. But what we’re not willing to do is to decide whether something will be pleasant or unpleasant.

What were some of your favorite projects from UA’s now fairly large back catalog – or what were the toughest models you worked on?

Almost the first two models were the 1176 and the LA2A. And it’s kind of interesting to think about those, because they both were difficult, but for different reasons. The LA2A has this little electro-luminescent panel in there that lights up and shines on a light-sensitive resistor, and that’s how the compression happens. And it turned out that the physics of that panel were very difficult for us to understand. And so we spent a long time trying to figure out how in the world we would even understand the mechanism of how that worked but then characterize them somehow. The behavior was just very, very complex and multi-dimensional. It just was very difficult. It really was satisfying to finally get a model that had the right behavior.

The thing that made the 1176 very hard was that the attack is very fast. It’s actually faster than one sampling interval if you’re at 44k. The attack is pretty much just about complete by the time you advance one sample forward in time. Even though we could characterize the behavior of the 1176 more easily than the LA2A, implementing the plug-in became very, very tough, because we had to make this feedback loop. It’s a feedback compressor, and we had to make the loop behave properly, even though these processes were happening much faster than one sample period. So we had to think really hard in terms of how to implement the thing. There’s a lot of different ways to get stuck — you could get stuck trying to understand the actual process, or you might understand the process but then think, “How can I implement this as a digital system?” So at different times, we’ve had different things that stuck out as the tough part of a project.

It does sound like you have a strong philosophy.

When I first started working at UA, Bill met with me and said he had the idea and the vision to do these models, based on physical process. It’s been a company point of view, irrespective of who does the work. There’s actually three or four of us now that do algorithms here, all working with the same ideas and the same ways of going about things. It’s a broad angle of attack that we as a company decided to do, not something that any one person developed.

Bill’s been really great to work with from the days when we were in school together up until now. One thing I really admire about Bill is that he can look at a problem and reduce it to the important components immediately. He can look at something that’s really complex and has a lot of different factors that are difficult to dis-entangle for someone else and get right down to what the important behaviors are going to be. It’s just a really nice, organized way of thinking that he has.

I should also mention Jonathan Abel. [founder of Kind of Loud before it merged with UA]. He had a lot of input – a huge effect into the shape that the first batch of plugins evolved. He worked a lot in the trenches on the algorithms with me, and had a huge impact on how that stuff came out.

So why model historic gear in the first place? And once you are done with the process, what does that tell you about why people value these tools?

That’s a tough question to answer definitively. It’d be very hard for me to make a convincing argument that someone should want to have those models and use them all the time. But if you just want to answer the question, why would someone ever want them, then it’s easier to answer that question.

There are thousands and thousands of vintage projects that have been designed. The ones we focus on are the ones that for some reason have become highly coveted. In a lot of cases, those ones are the ones that were the most carefully designed or the most expensive things available at the time. Not all of them – some of the stuff that turns out to be really popular and sound great, some of those things have a lot of their good characteristics almost accidentally. I’m absolutely sure when we do a lot of these models, we know things about the circuit that the engineers didn’t. People would design something and then just put up with a little bit of an artifact without understanding it or caring about it, whereas we, if we want to recreate that, have to go down into the weeds and really understand it to a higher degree than sometimes the people who designed the gear.

For whatever reason, there are certain pieces of gear that have become super-popular. Whether it’s an intrinsic human characteristic to like those things, or whether it’s cultural weight, or familiarity, for whatever reason, they’re pleasing. And so, for people to have those sounds available to them I think is always going to be beneficial, until people just forget about those sounds, if that ever happens.

I think familiarity definitely leads to comfort, and comfort can lead to creativity just as well as being off-balance can. They’re two different kinds of things. It seems you were making the point that there’s a whole world of new stuff out there where you could make new sounds, and that’s probably true. I hope that people – even us – continue to do that kind of work, too. On the other hand, there are certain sounds people are used to and enjoy, and I think it’s good to have those sounds at their disposal, too.

These tools allow someone to make a recording with a grounding, that gives it a pleasant, familiar, comfortable sound. And then you still have the freedom to add your own novel ideas to the music. Maybe someone’s never used that piece of equipment before, but they’ve probably heard records that were made with that equipment.

It’s happened to me in development. For example, when we did our very first Neve EQ model, I worked out all of the math, designed all of the filters and everything. And then I got hold of the hardware, and started cross-checking the results of my design with the hardware. And I started playing music through both. It was really eye-opening to me. I made some adjustments, and thought – oh, it’s that sound. I know what that sound is; I’ve heard a lot of records that sound like that. But I never knew that that was a 1073 making that sound. But now I do. And it’s the same thing with the 1176 – you know, like I said, if you put that on a drum kit, you think, oh, it’s that, it’s this record and that record, and it’s a beautiful sound, and I always wondered how people got that sound. To me, it’s kind of exciting to have that comfortable feeling of thinking, I love that sound, and now I can do it.

By the way, I’m a design engineer, not a professional musician or a recording engineer, so these perspectives should probably be given very little weight. But I’m just telling you my personal opinion. There’s other people even within UA that probably should carry a lot more weight. I don’t know — I like creativity in music, but also a grounding in some aspect of it that sound comfortable and familiar.

Thanks, Dave. We’ll be taking a closer look at the Universal Audio solutions and where to begin using their stuff in your music, as well as their new FireWire-based Satellite for you Mac users. And in the interest of balance, I also have a very different take on modeling analog, from guy named Dave. I spoke with Dave Hill about HEAT, the Avid product; watch for that interview soon. HEAT is quite different from the UA stuff, but you’ll hear some familiar themes about the larger picture. Got questions for this Dave and UA? Thoughts on your own experiences with hardware and software? Let us know in comments.