There’s a peculiar false controversy going on at the moment over music notation. First, the blog for online (Flash-based) browser notation editor Noteflight introduced a manifesto:

Music Notation Today, Part 1: A Brief Manifesto

The essay by president Joe Berkovitz is a good read, but it oddly makes the comparison between notation and recorded sound, which is a bit like saying a telephone is better than a DVD. One is interactive and intended for human conversation; one is not. So, go ahead and enjoy the copy of Inception that arrived from Netflix — just don’t take it as an excuse not to call your mother. It’s an argument notation will win, to be sure; it’s just not really a very fair fight.

That is, of course, the implication of Berkovitz’s argument, but the failure to state it overtly prompts Synthtopia to run with the comparison:

Does Music Notation Matter For Electronic Music?

Synthtopia’s James Lewin then goes on to make the following argument:

While Berkovitz argues in favor of “looser” communication of music, an over-arching trend in electronic music has been to give you greater and more immediate control over sound.

I’ve heard this before, and it’s worth asking. But I think if you really ask the question, you’ll find that notation isn’t less relevant: it’s profoundly more relevant.

Yes, indeed, electronic music does give composers direct control over sound for solo work. Lewin goes on to say, “For example, it’s fairly routine for composers to create large scale works, such as soundtracks, without the use of traditional notation.” True — so long as they don’t hire any musicians.

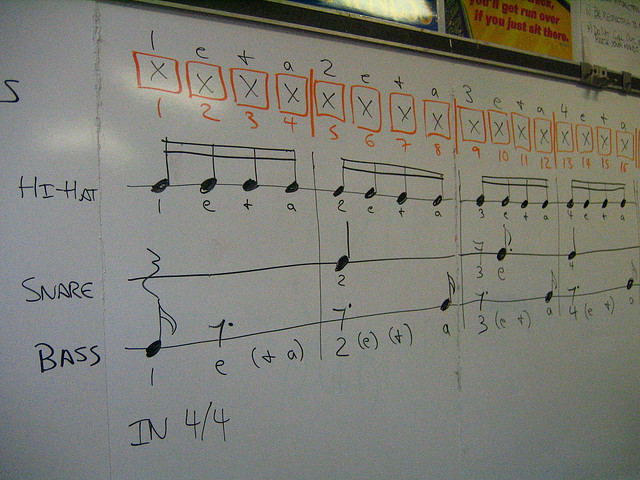

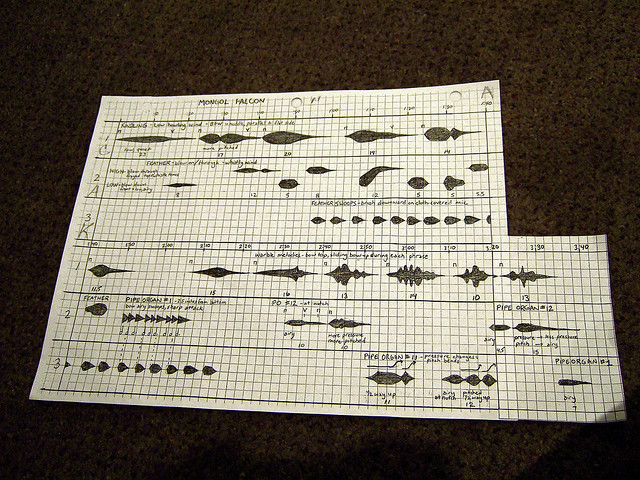

Involve more than yourself, and you’re back where you started. Let’s assume, for instance, you want turntablists, samplists, or controllerists. Great! Oh, wait – you might need to tell them what to do. Now, you could try to explain it to them, but the moment you want to provide any kind of structure to the improvisation, odds are you’ll need some sort of picture.

“Some sort of picture” has always been the core element of music notation. The issue of whether this follows traditional 19th century engraving practice is irrelevant – and entirely inappropriate to many forms of music. But if you draw a picture, whether you use a computer to make that picture or not, it’s a score.

Even working alone, these kinds of representations become critical. We might assume that it “marginalizes” notation because computers facilitate solo work. But as we remember the contributions of Max Mathews this week, it’s important to note that from his first pioneering digital synthesis system over half a century ago, there was always the notion of some sort of musical structure. (In Csound to this day, it’s called a “score,” and not by accident.) Whether you notate on a staff, in pictures, or in code, you create a representation of musical structure in time. In a conventional score, that representation is interactive and open to interpretation. Computer programming languages and graphical patching environments give us new ways of doing this. Sharing that code or graphical patch lets us share our ideas with others. And the moment you want someone to perform a physical gesture to make your music, you return to the same set of needs that have driven music notation for millennia.

There are fancy solutions — see this paper, with lots of pretty images of “spectromorphology,” for one — but how fancy it is doesn’t matter. You’ll need something, even if you scrawl on napkins.

In fact, the moment you want to think about the musical structure, you’re likely to use some sort of visual or representational metaphor. Open up any music software program, and these representations are ubiquitous. Waveforms and spectra are also accompanied by piano rolls, graphs, blocks, colors, and symbols. The Ableton Live Session View has LEGO-style colored blocks. Drum machines represent rhythmic subdivision in units derived from centuries of notation; take away even the handy notes and flags Roland added to theirs, and you still see a grid that you could quickly explain to someone who fell through a wormhole from the 16th Century.

If you want to take any one of those patterns and give it to another musician, then you will certainly translate it into a picture. If traditional notation is the most appropriate, you’ll use that. If graphical notation gets the point across more clearly, you’ll do something non-traditional. But that question has everything to do with intention and communication. You might need to adapt the notation to the technology, but that’s always the case. The turntable requires some specialized symbols, but so, too, do fingerings on a woodwind or plucking technique on a harp.

Speaking as a composer, what frustrated many composers in the 20th century with notation was actually the same criticism typically levied against the computer: notation was too precise, too limiting, too entrenched in certain expectations about measuring time and tune. If you really only wish to organize sound in the privacy of your own home, never involving another human being, you might find these attributes of the computer appealing. But if anything, the computer has given us the potential to be freed from these same limitations, by allowing us to quickly create new graphical and textual languages for representing music, and by reassigning time, tune, and timbre to anything we can possibly imagine. In doing so, they present new frontiers for other human beings to improvise and perform live, whether they’re working with another digital machine, their own voice, or a kazoo.

What has electronic music done for music notation? Simple: it’s expanded its necessity, broadened its meaning and applications, facilitated its storage, transmission, and sharing, simplified its production, exploded its possibilities in everything from graphics to interactivity, and freed it from centuries of accumulated restrictions.

My prediction: if you want to look for the growth area in music technology, it’ll be in notation. We’ll see more of what we already have (conventional notation), and a broader category of what qualifies as musical notation – a greater spectrum of notational systems:

- More kinds of visual musical notation. New interactive systems will facilitate explosive exploration of the connection of visual symbols to sound.

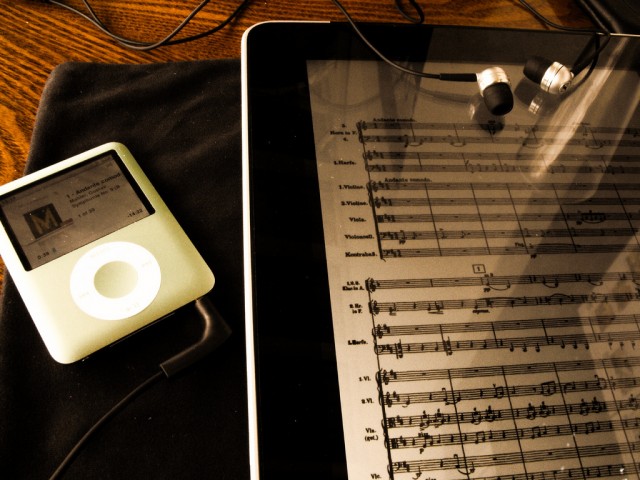

- The display becomes a blank page. Tablets of all kinds – the iPad being only the beginning – will adapt computer displays to forms usable in performance. That’ll be a huge boon to conventional notation and new graphical notational systems alike.

- More connected. The ongoing growth of the Web will mean new ways to edit, share, and view notation. Case in point: guitar tab is massively popular as a a search term online.

- More possibilities. Whereas engraving systems restricted notational practice to certain (largely Western) traditions, open-ended computer notation will make it easier than ever to use alternative notations and non-Western systems.

- More people. People will continue to play instruments. And they’ll need to notate gestures for new instruments as they’re invented.

- More improvisation. Written notation and improvisation aren’t necessarily at odds. Any culture with writing will typically make some annotation, no matter how simple, on a score, even if only squiggles on a sheet of lyrics.

The only way recorded sound would make this go away is if recording makes people stop making live music. But recording, for all the times it threatened to do that, hasn’t succeeded yet in making that happen.

In fact, the potential of digital technology for notation is so broad, so diverse, that it almost does it a disservice to put it in one post. So don’t look at this as a manifesto: look at it, instead, as a challenge, to look at new ideas in electronic music in terms of how they use design, visuals, and textual representation to communicate ideas.

Viewing the world of sound through the grand staff is limiting, and for certain sounds, anachronistic. But to cease to view music through any kind of representation whatsoever would mean abandoning musical thought itself.

I love this definition of music notation on Wikipedia: “Music notation or musical notation is any system that represents aurally perceived music, through the use of written symbols.”

The word “written” doesn’t really fit; if it did, engraving killed musical scores and writers stopped “writing” when they bought typewriters. Music notation, like language itself, is fundamentally symbols.

Oh, and by the way – editing and sharing scores in your browser? Pretty darned cool. And if you think Internet access isn’t capable of making revolutions happen? Well…

More exhibits:

What about the vision impaired? Using notation does not require having sight; computers have been a boon to expanding access to notation. The late Ray Charles was a Sibelius user; sadly, it seems Dancing Dots no longer supports Sibelius, but there are other options. The GPL-licensed open source Freedots continues to work with MusicXML scores for compatibility with many tools. Dancing Dots continues a variety of software and hardware tools for varying degrees of vision impairment from low vision to blindness. These also include interfaces that enable other music software, notably Cakewalk’s SONAR. A 2006 overview from the Texas School for the Blind and Vision Impaired discusses some of the research and tools.

What about rote learning? None of this is to take away the power of rote musical learning. But that’s independent from the computer question; rote musical transmission is perhaps the most direct means of communicating a musical idea between people, and illustrates how significant human communication is to musical process. And even through rote learning, I would think you might come to understand certain patterns of mode or rhythm, which means internalizing those patterns as some kind of mental representation or symbol.

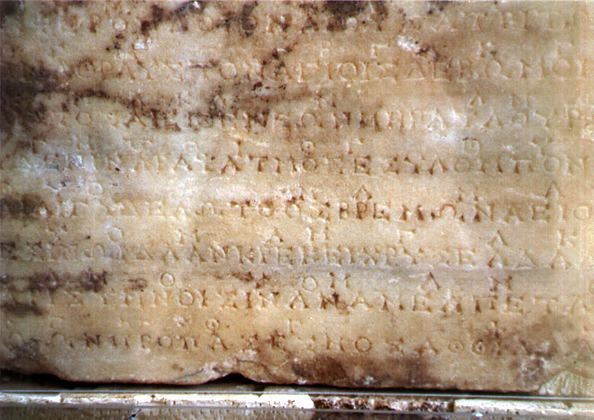

Where these cultures have writing, they tend to have some form of notation. So, for instance, in India – even in a culture in which oral transmission is common – notation has been found as early as 200 BC.