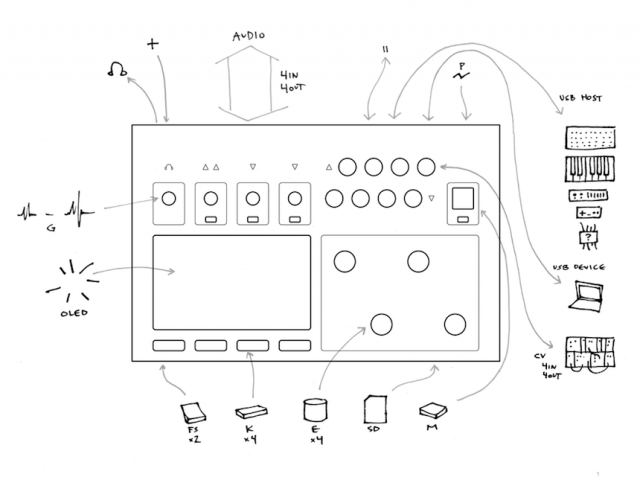

aleph is something of a curiosity: it’s a dedicated box uniquely designed for sonic exploration that isn’t a conventional computer. It comes from the creator of the monome, but while dynamic mapping is part of the notion, it is the first monome creation capable of making sound on its own. The monome is a controller that uses a grid for whatever you want; aleph is a self-contained instrument that makes any sound you want.

In review:

aleph, from monome: Programmable Sound Computer That Does Anything

But this isn’t only a story about some specialist, boutique device. It’s a chance to peer into the minds of two imaginative inventor/musicians, and see what they think the future might hold for their own musical creativity.

And so it’s a pleasure to talk to Brian Crabtree and Ezra Buchla. As brain-picking targets go, you could do worse. Synth pioneer Don Buchla is Ezra’s dad, of course – but it’s just as significant that Ezra has been a big figure in the experimental scene, including as a composer and doing turns in experimental bands The Mae Shi and Gowns. (See monome’s own, engaging interview with Ezra from May.) And then there’s Brian, whose vision has proven as prescient as anyone’s in recent years. What appeared as a boutique oddity in the monome has predicted a striking number of trends in hardware. It pushed openness, sustainability, community support, and more minimal, material-minded, un-branded physical design. And it has been followed by years in which the light-up grid it championed has become the single most dominant paradigm for controlling music on the computer. Not bad for an independent designer.

Of course, the monome had its fair share of criticism when it debuted. Heck, I even complained about its lack of velocity sensitivity at the time. But that’s another reason to look deeper here. Sure, aleph is pricey. Sure, it’s not as easy to run custom software as it would be on an embedded Linux device. Sure, there aren’t going to be a whole lot made. But as a digital musical instrument, there’s a chance for even a few alephs to make a big impact – and for the ideas behind it to spread beyond this project alone.

So, let’s hear some of Brian’s and Ezra’s ideas, on the even of the aleph launch.

We also get treated to a new video for more evidence of what this could become.

By the way, if you like interviews – there’s a terrific series of artist interviews focused as much on music as grids, on the monome site. Well worth saving to read, as you have now around a couple dozen articles:

http://monome.org/category/interview/

See also a great interview with monome (Kelli and Brian):

THOSE WHO MAKE

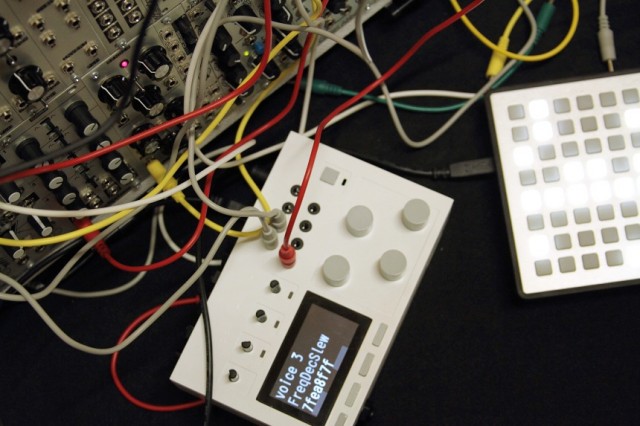

Video: aleph prototype life modulator echo cutter [shot and edited by Kelli Cain, with Brian Crabtree]

aleph prototype life modulator echo cutter from tehn on Vimeo.

CDM: First, most generally, why a dedicated box? Operating systems can cause problems, yes – but it is possible these days to set up a Linux system, for instance, with low latencies and high reliability, especially if you aren’t using a GUI. So why go this route?

Brian: For myself there are several primary motivations. First and most important is having a machine that powers on almost immediately and is ready to do something interesting, more akin to an instrument than a fragile environment which fundamentally requires a lot of setup. It feels strange to be able to power on the aleph, plug in a grid, and immediately be running some sort of algorithmic sequencer driving a synth in complicated ways. This is, of course, all possible on a computer, but I find a lot of frustration in perfectly calibrating a long chain of software and hardware. While I prefer my tools to be unpredictable in what they might sound like, I prefer predictability when it comes to reliability. We don’t have to worry about operating systems– there is none. There doesn’t need to be an upgrade cycle which creates obsolescence. I like the idea of this platform still working in ten years.

Ezra has done almost all of the code, so for me also this presented an exceptional opportunity as a learning platform. We decided to extend this learning to anyone interested by making the frameworks open-source, facilitating a development process, and, very soon, creating tutorials. The aleph is looking to be a wonderful little ecosystem for DSP and control experiments.

Ezra: Performance was certainly a goal, and is facilitated by designing at the lowest level– programming directly to the chip. On the DSP side, we can do single-sample latency. We’ve been down the path of embedded Linux for audio. It seems not quite ready to support this kind of project on a scale that’s bigger than pure DIY and smaller than full-scale industrial manufacture. In a word, Linux adds too much overhead in systems complexity. For us to make effective use of it, we’d have to become kernel developers, which is not happening. Programming the chip directly is more efficient and, in a way, less work.

What was the musical application envisioned here? It seems there were some notions that were driven by your musical needs, and Ezra’s. Can you talk about the personal motivation, and how you think it might extend to the user base?

Brian: This is the most difficult question, of course – because I don’t want to narrowly define capabilities. It can be a drum machine or synth or echo-y texture processor, yet what I’m most excited about is having a system which gracefully facilitates experimentation. A box that I can modify severely or instead just turn on and play.

Ezra: My thoughts exactly. The possibilities of musical computing have filled many books and will never be exhausted.

But for a couple of specific examples: I’m looking forward to using complex and time-varying networks of filtering and buffer manipulation carried over from the laptop. (The things that computers are good at: granular delay, live-sample-chopping, additive synthesis, resonant networks.) It feels great to take a single rugged box to a theater or gallery and just plug the instrument into this kind of processing.

At the same time, I’m stoked on a new percussion synthesizer that I’d never even thought of making before, and the new musical territories it suggests. We’re lining up an insipring crew of developer-artists to participate in this experiment, and I’m looking forward to the diversions they discover.

Is there so far any particular connection to the monome community, in terms of interest from there?

Brian: There is substantial interest in the monome community in that I suspect many people share my same goals: being able to use controllers in interesting ways in a more immediate way, the same way that people enjoy using modular synths or just playing a real piano.

It seems many in the monome community are looking forward to getting deeper into programming. The device tends to propel users into learning more about technology then they expected. If that is your thing, it’s incredibly empowering.

aleph prototype looper and drum synth from tehn on Vimeo.

Can you talk at all about BEES, your new modular environment, or show us what it looks like?

Brian: It’s basically a control environment which allows control sources (knobs, footswitches, monome grids, MIDI, etc.) to be mapped to parameters (control parameters like preset number or knob range, but also DSP parameters like feedback or filter cutoff.) BEES can switch DSP modules (each module tends to have a ton of functionality bundled together) while running, effectively “hosting” each one. The DSP module reports what parameters are available to be mapped.

Additionally, BEES creates “operators” which transform or generate control streams. So a multiplier could be added between a knob and a DSP parameter (say filter cutoff), changing the sensitivity of the knob. A footswitch could trigger a random operator which drives feedback (stomp stomp stomp stomp). A sequencer operator using a monome grid could drive the CV outs (to a modular) and then a heap of other operators could drive the sequencer in different ways (tempo, step, etc.) for something messy and interesting, all within the patching environment, without programming.

All of this stuff happens with a menu system. We’ve made a great effort to make it graceful, but we also acknowledge that designing a complex system requires more visualization, so everything in BEES will be controllable via OSC via the usb connection to a computer. A friend is working on an browser-based editor that we’re excited about.

We’ll have video soon of all of this.

Is there applicability beyond just aleph? I imagine this would be a question for people investing development time; is there a future for BEES or the stuff they write beyond this very limited-run hardware?

Brian: First off, I have no intention of this being a limited run– we simply produce according to perceived demand. If people are interested, we’ll make more.

While I understand there may be a desire to port BEES to an iPhone, it doesn’t seem a perfect fit. We designed the aleph to be good at sound and control specifically, rather than having a phone also be a stompbox using a dongle.

Ezra: We could, for example, build a big Max object that runs BEES, and you could run BEES patches in Max. Why not? Perhaps more reasonably, an aleph application host for Linux, that communicates via OSC, actually has existed at various points, and if it seems useful, it will get a resurrection.

As for the DSP: most of the audio code so far is object-oriented and easy to read, but not optimal. Inner loops will get harder to read as they get faster, and some heavy low-level stuff like FFT will be pretty tough going. But a good algorithm on the musical level is always reusable.

Just to make certain, I have this right, yes? — hardware is proprietary, but the software (including BEES) and toolchain will all be open source?

Brian: The hardware source will be proprietary in that we’re not going to post it publicly and rights will be reserved. But if someone is interested, we’d be happy to share what we’ve done. But what we’ve been doing is nothing like DIY electronics or terribly relevant to kit building. We are reasonable and curious about what happens to our work, and simply prefer that people contact us directly.

Why Blackfin DSP specifically? [aleph uses this DSP hardware/software platform.] What can musicians – or developers – expect out of this platform in terms of performance? How easy will it be to develop for?

Brian: In terms of development ease I’d say it’ll require pretty much the same commitment that most similar programming projects would require. We’re making a disk image with the toolchain set up which can be run in a virtual machine. It’s not going to be an Arduino experience, which is the product of years of great work removing complexity.

But really we have no illusions that the developer audience will be more than a small fraction of total users. What was important to us is to make these sources available, and to design a system that can be radically altered without programming. I’d rather be patch-editing than programming most of the time. We aimed for some equivalent of patching when designing the fundamental configurability of the aleph.

Ezra: The Blackfin is a fixed-point DSP with a peculiar dual architecture of 32-bit data buses and parallel 16-bit ALUs [arithmetic units]. So indeed, it is a different experience from what many programmers are used to. From my perspective, there are two big practical reasons for looking at this family of parts: speed-to-cost ratio, and accessibility — by which I mean, the freedom from proprietary toolchains and difficult packages like BGA [Ball Grid Array, difficult meaning in terms of assembly].

So when I hear (or ask) the question “why Blackfin” it usually refers to the lack of an FPU [Floating Point – math – Unit], and may be followed by, “why not SHARC?” [Analog Devices DSP platform] – the answer is lack of an open-source toolchain. Sometimes the followup is “why not ARM/NEON?” [accelerated instruction set for multimedia and DSP] which is sort of harder to answer. I guess because those tend to be SoC [System on a Chip] configurations, they feel overly complex, and have overall tended to be less appealing for this or that reason.

I like the Blackfin parts because they are fast and simple. The gcc [open compiler] tools work well, with an active community around them. The BF533 [DSP processor] is fast enough that quite naively-written C code can usually get the job done, and on the other hand the ASM [Assembler] instruction set is easy and, I’d have to say, fun. For example, it is well suited to mixed datatypes, and it will be great for porting 8-bit code into a massively faster and more parallel environment.

I don’t think using a fixed-point DSP is a hardship; it is a natural fit for audio. Anyways, the Blackfin float implementation is non-IEEE but fast enough to use when necessary, e.g. filling a lookup table. I think embedded DSP nerds will have a blast with this platform, and it only takes a few!

All that said, its always nice to have more speed. I’m sure there will be criticism of the aleph’s processing power as it doesn’t compare to what a modern computer can do. But it compares very well with what a computer could do a decade ago (at considerable expense), and I’m old enough/young enough to be pretty stoked about that level of digital processing in a small, instant-on metal box with good sound.

What’s the latest on monome? What can we expect to happen next? And now that the world is starting to be full of grids, where do you see monome’s role – as monochrome and on/off buttons amidst RGB and pressure-sensing grids? (I suppose that in itself is sort of interesting.)

Brian: Monome is still just Kelli and myself, though we just hired on Trent Gill (Galapagoose, co-creator of [grid sampling instrument] MLRV). It’s been great collaborating with Ezra. I’m looking forward to refining and further exploring grids both on the aleph and with our existing application and user base. I still feel our minimalist grids have a level of flexibility not seen in others out there.

Outside of electronics, Kelli launched a lovely ceramics design studio (kellicain.com) and we’re considering a label for our apple cider co-op. Ezra and Trent continue to produce shockingly good music and we pressure them regularly to make more.

Someone in comments repeated this idea that you’re uninterested in getting these in the hands of lots of people. But whether it was intentional or not, it seems some of the ideas of the monome project are in the hands of lots of people. We’ve talked about this before, but curious if your take has evolved on that, at all, particularly as grids begin to shift to new instrumental applications.

Brian: Honestly I’m not seeing any shift in grid usage. Pitch maps, clip launching, and drum triggers have been dominant for years now– it is now solidly part of the electronic music vernacular. In this way the grid is not an innovative proposition. But I do hope to see more interest in grid uses outside these fundamental three approaches– there is so much left to be explored!

It’s a completely silly accusation that we wouldn’t want to get these into peoples’ hands. We understand that the aleph has a pretty small audience, but we’re grateful that the support from this audience allowed us to bring a device like this into the world. I’m not going to bother with the usual list of production expenses or yet another attempt at consumer re-education– rather I’d like to say that we aimed very high when designing the aleph. We’re incredibly excited about the unprecedented capabilities of this little box. We’re terribly interested in getting these into the hands of people who share our enthusiasm.