Wouldn’t it be nice if, instead of manually assigning every knob and parameter, software was smart enough to configure itself? Now, visual software and OSC are making that possible.

Creative tech has been moving forward lately thanks to a new attitude among developers: want something cool? Do it. Open source and/or publish it. Get other people to do it, too. We’ve seen seen that as Ableton Link transformed sync wirelessly across iOS and desktop. And we saw it again as software and hardware makers embraced more expression data with MIDI Polyphonic Expression. It’s a way around “chicken and egg” worries – make your own chickens.

Open Sound Control (OSC) has for years been a way of getting descriptive, high-resolution data around. It’s mostly been used in visual apps and DIY audiovisual creations, with some exceptions – Native Instruments’ Reaktor has a nice implementation on the audio side. But what it was missing was a way to query those descriptive messages.

What would that mean? Well, basically, the idea would be for you to connect a new visual app or audio tool or hardware instrument and interactively navigate and assign parameters and controls.

That can make tools smarter and auto-configuring. Or to put it another way – no more typing in the names of parameters you want to control. (MIDI is moving in a similar direction, if via a very different structure and implementation, with something called MIDI-CI or “Capability Inquiry.” It doesn’t really work the same way, but the basic goal – and, with some work, the end user experience – is more or less the same.)

OSC Queries are something I’ve heard people talk about for almost a decade now. But now we have something real you can use right away. Not only is there a detailed proposal for how to make the idea work, but visual tools VDMX, Mad Mapper, and Mitti all have support now, and there’s an open source implementation for others to follow.

Vidvox (makers of VDMX) have led the way, as they have with a number of open source ideas lately. (See also: a video codec called Hap, and an interoperable shader standard for hardware-accelerated graphics.)

Their implementation is already in a new build of VDMX, their live visuals / audiovisual media software:

https://docs.vidvox.net/vdmx_b8700.html

You can check out the proposal on their site:

https://github.com/vidvox/oscqueryproposal

Plus there’s a whole dump of open source code. Developers on the Mac get a Cocoa framework that’s ready to use, but you’ll find some code examples that could be very easily ported to a platform / language of your choice:

https://github.com/Vidvox/VVOSCQueryProtocol

There’s even an implementation that provides compatibility in apps that support MIDI but don’t support OSC (which is to say, a whole mess of apps). That could also be a choice for hardware and not just software.

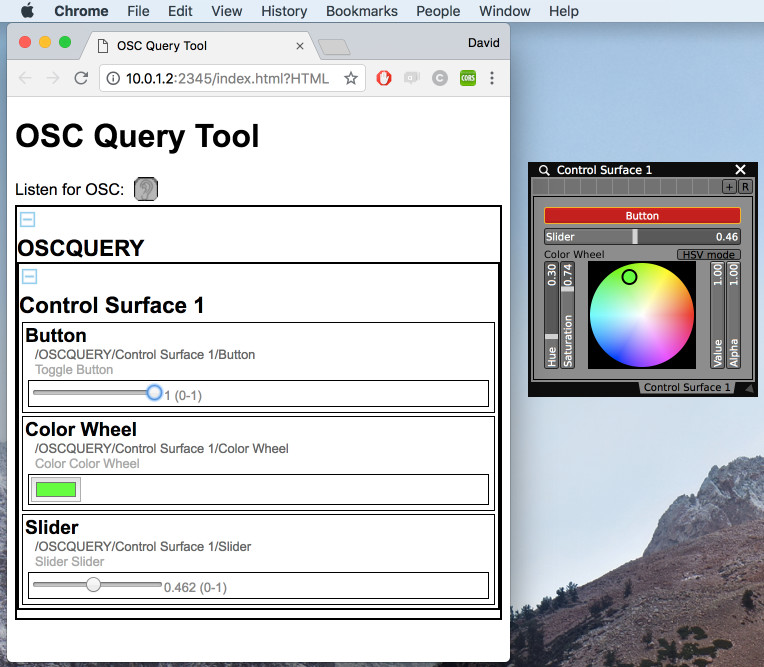

They’ve even done this in-progress implementation in a browser (though they say they will make it prettier):

Here’s how it works in practice:

Let’s say you’ve got one application you want to control (like some software running generative visuals for a live show), and then another tool – or a computer with a browser open – connected on the same network. You want the controller tool to map to the visual tool.

Now, the moment you open the right address and port, all the parameters you want in the visual tool just show up automatically, complete with widgets to control them.

And it’s (optionally) bi-directional. If you change your visual patch, the controls update.

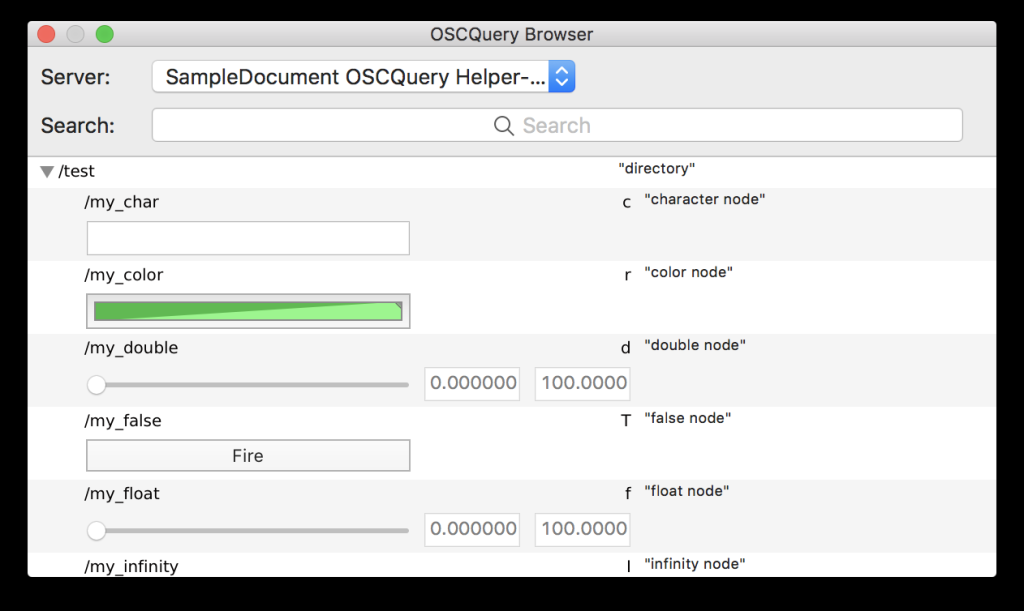

In VDMX, for instance, you can browse parameters you want to control in a tool elsewhere (whether that’s someone else’s VDMX rig or MadMapper or something altogether different):

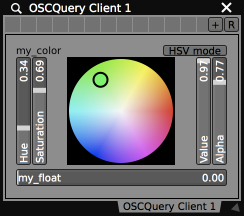

And then you can take the parameters you’ve selected and control them via a client module:

All of this is stored as structured data – JSON files, if you’re curious. But this means you could also save and assign mappings from OSC to MIDI, for instance.

Another example: you could have an Ableton Live file with a bunch of MIDI mappings. Then you could, via experimental code in the archive above, read that ALS file, and have a utility assign all those arbitrary MIDI CC numbers to automatically-queried OSC controls.

Think about that for a second: then your animation software could automatically be assigned to trigger controls in your Live set, or your live music controls could automatically be assigned to generative visuals, or an iPad control surface could automatically map to the music set when you don’t have your hardware controller handy, or… well, a lot of things become possible.

We’ll be watching OSCquery. But this may be of enough interest to developers to facilitate some discussion here on CDM to move things forward.

Follow Vidvox:

And previously, watching MIDI get smarter (smarter is better, we think):

https://cdm.link/2018/02/midi-evolves-adding-expressiveness-easier-configuration/

https://cdm.link/2018/05/midi-polyphonic-expression-now-thing-heres-whats-supporting/

Plus an example of cool things done with VDMX, by artist Lucy Benson: