Notch is the latest “it” real-time visual effects tool. So as motion maven Defasten produced a dazzling science fiction video for my latest release, I asked him to share how it all works. Enter the nodegraph.

Notch is a powerful addition to motion arsenals – deep and responsive, something you can use as your main visual axe or alongside tools like TouchDesigner and Unreal. And it has a node-based, dataflow workflow – meaning, you can patch stuff together with virtual patch cords for modular inventions that are more than the sum of their parts.

But just as modular sound synthesis involves a high degree of transferability of concepts and language, so, too, live visuals play on fluency shared in common. It’s just that you’re playing with math and your GPU and eye candy in place of … math and your CPU and ear candy.

First, let’s watch the video – which began with the more analog material of the Moog Matriarch, with some digital effects here from Ableton Live 11 (and some repurposed string lines from Spitfire Audio). It premiered earlier this month on Berlin-London magazine Inverted Audio:

DEFASTEN (Patrick Defasten) imagined this wonderful, apocalyptic science fiction world. My inbox is somewhat littered with people using the pandemic as inspiration, but Patrick really moved me with this, creating this metaphorical fancy that seemed to capture some deeper emotional reality of this moment and its dissociative endtimes quality. It channels something I felt making the music but hadn’t explicitly said. And relevant to his use of Notch as a tool, to me, he was able to imbue these graphics with a sense of the mood and pacing of the sound. So let’s in fact listen as he explains how he did it.

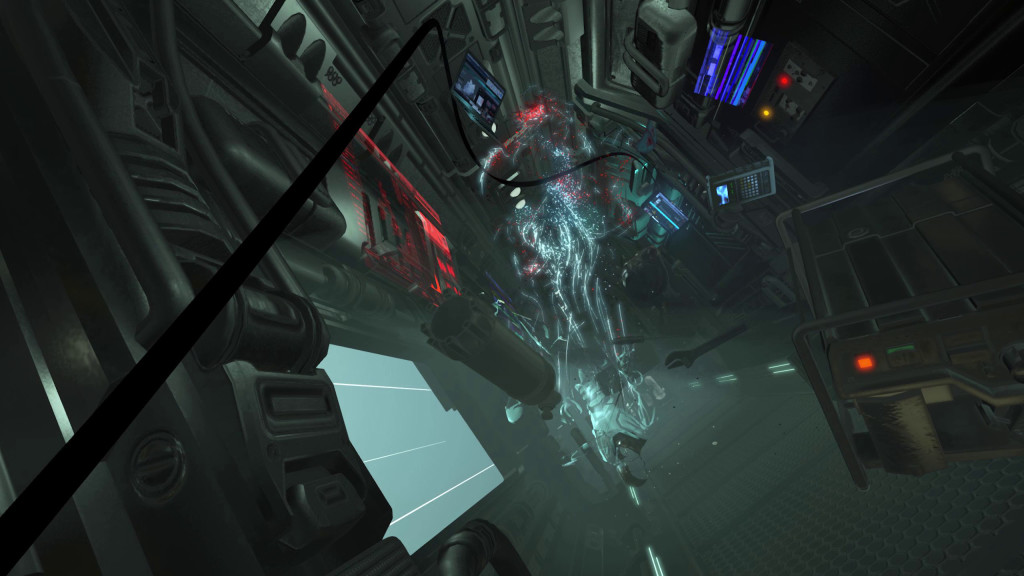

You can navigate that by chapter, too, to help you digest all this information. There’s a combination of 3D objects, lighting, particle systems, and effects like glow – and part of the strength of Notch is letting you work with all of those, in real time without rendering. (Uh, wow.)

Part of the key to managing that is understanding how to manage elements hierarchically, and then applying the cinematic and lighting approaches that add emotional impact.

Takeaways:

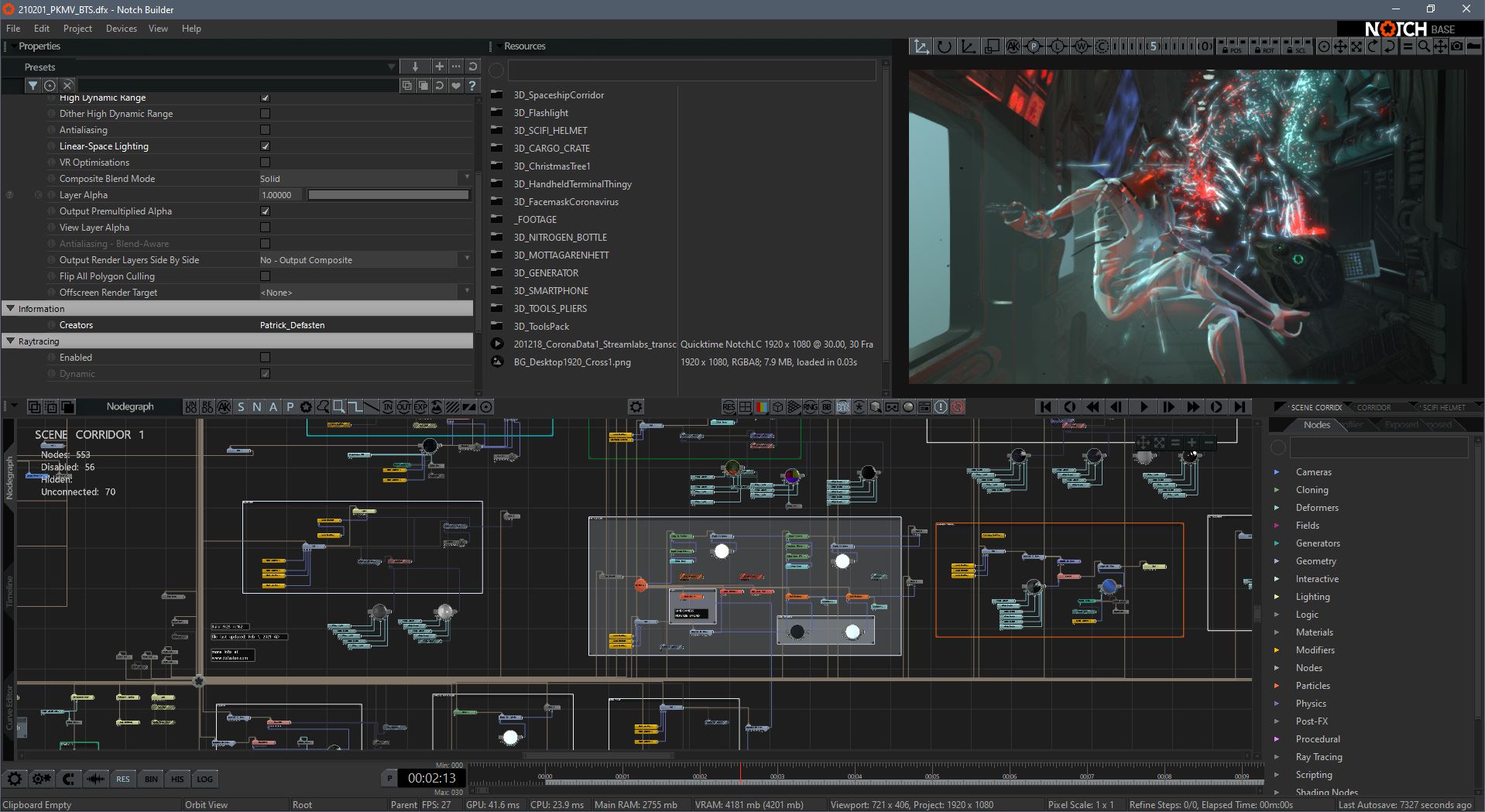

The structure of the scene is organized visually on the Nodegraph, divided into four quadrants – with the root at the center. There’s the corridor, the body, lights,

It’s all dramatic, dynamic lighting – again, in real time. (He talks a bit about optimizing those.)

Sketchfab is a great resource for 3D objects (it’s a 3D and AR asset platform), as is Mixamo (a human figure / animation platform). Basically, think of them as your set, prop, and costume shops – with some free (and affordable) options and optimized assets.

Notch can import PBR materials along with the object and mapped as part of Notch’s render pipeline. No, that’s not hipster beer – it’s a way of describing how an object should be consistently rendered, including the texture map but also how light should reflect when the object is rendered. (PBR stands for physically based rendering, not to be confused with professional bull riders. Here’s another good explanation. Or dive in deep with Adobe.)

Models each have their own dedicated layer to make them easier to modify and preview.

Math modifiers in Notch animate the objects – and make them seem like they’re in freefall – sometimes using the null point as a zero point. (Patrick comments that he could have created a more accurate rigid body system but… I think the way he did it looks convincing and funny enough seems to match both fictional cinematic approaches to freefall plus, well, it sure looks like a lot of those Space Shuttle IMAX movies I obsessed over as a kid.)

Anyway see the explanation of Null nodes in Notch’s geometry system for more on what he means there. Basically, it’s a per-object reference to the transformation – so a way to add the behavior to that datapad or laptop that’s floating in the spaceship.

Dynamic textures pipe in the screen images and add a glitch effect.

Dynamic volumetric lighting provides all that essential mood. Frame rate takes a big hit, but it’s still fast enough to work with, as you can see in the screencast. Patrick balances the Max Depth Range and Num Depth Slices parameters to get desired look and performance.

Particle Trails make beautiful use of the Notch Mesh Attractor and the Time Stretch node.

Wide angle Camera Angles add more cinematic possibilities. And setting Near Clip to as low a value as possible helps preserve that cinematic quality by avoiding clipping objects near the camera (a dead giveaway that this is a computer-generated scene).

Best of all, Defasten has been kind enough to share his original Notch project file with all of us, and links to assets.

Check the video link (and subscribe to his channel).

And more on Defasten’s amazing work:

https://www.instagram.com/defasten/

We’ve previously covered his work here on CDM:

Plus here’s a great intro by Ted Pallas from last year that gets you up to speed on what Notch is – and why it’s useful:

Plus an essential update:

Oh yeah, and if you’re wondering how I did the music, well, let me tell you, I sure… uh… played the parts with my hands and used the Moog’s arpeggiator. Or if we spoke about music like we did visuals, I assigned null points to my fingers on the musical keyboard manual and then applied triadic harmonic transformations.

The music release:

https://peterkirn.bandcamp.com/album/chromatic-adaptation

Don’t miss in particular this remix by Nick de Friez, just added:

And thanks so much, Patrick.

Notch is so deep, I definitely hope we spend more time with it.