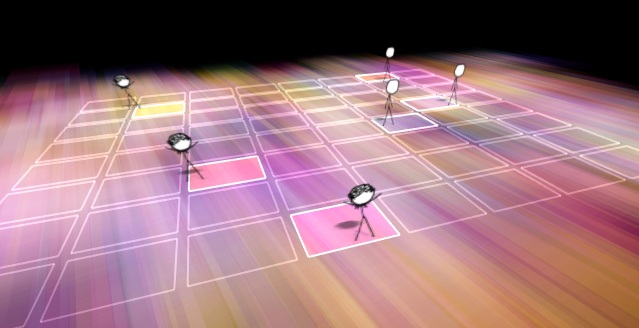

Digital musician and artist Josh Silverman began the Synplode process with something familiar – a checkerboard. Play a game of checkers on its computer vision-equipped playing field and beats and loops triggered in Ableton Live generated a responsive soundtrack for the game. But as it’s evolved, Synplode has become a general-purpose musical grid. Whether with little robotic insects (the Hexbugs here) or full-sized human persons, the grid can turn any space into a dynamic, interactive dance floor. (I think I may actually prefer those cute little bugs to the people and dancers and whatnot. Robot rave, anyone?)

I prodded Josh to write up more description of what’s going on, so he’s created lots of documentation on the project Website.

The basic interaction:

At the start of the Synplode demo video, it is easy to see that a wave passes over the basic projected grid, flashing one column at a time, each containing 8 trigger regions. When a participant (or microbot) is present on a region, it is activated. When the wave intersects with an activated region, it causes a Synplosion, expressed through a splash of color and a distinctive sound. In the grid, each row represents a distinctive color and pitch or audio sample.

The basic ingredients:

1. Computer vision in OpenFrameworks, the fully open-source, artist-friendly C++ toolkit inspired by Processing.

2. Ableton Live, triggering clips in Set Mode and modulating them with MIDI effects and racks.

For more detail:

How it Works (details, in particular, of what’s happening in Ableton)

Why it Works (some of the thinking behind the interaction)

Synplode Project Page

Josh first demonstrated this system publicly at our Handmade Music series here in New York, and this is just the kind of experimentation and iteration I like to see. Here’s the original, checkerboard version: