Imagine playing a duet with a robot: that’s the idea behind Haile, a robotic percussionist that can “improvise” based on analysis of live playing.

Project Page with Video Clips [Gatech.edu]

Gil Weinberg, Assistant Professor and Director of Music Technology at the Georgia Institute of Technology, who created the project with the help of grad student Scott Driscoll, writes CDM with details:

Haile is robotic percussionist that can listen to live players, analyze their music in real-time, and use the product of this analysis to play back in an improvisational manner. It is designed to combine the benefits of computational power and algorithmic music with the richness, visual interactivity, and expression of acoustic playing. We believe that when collaborating with live players, Haile can facilitate a musical experience that is not possible by any other means, inspiring players to interact with it in novel expressive manners, which leads to novel musical outcome.

We use Max/MSP on an [Apple] iBook to control all the interaction, which allows musicians and novices to program the robot and change its behavior to their likings.

Haile is a work in progress; currently only one arm is operational but eventually both arms will work both horizontally and vertically. Already, though, it can strike a Native American Pow-wow drum, an instrument that’s already intended to be used for collaboration. Here’s how it works:

Robotic body: The wooden body has metal joints for arm movement and easy assembly and disassembly.

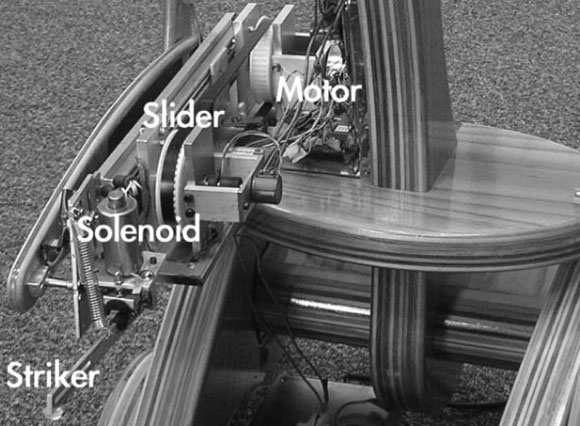

Playing mechanism: A slider/solenoid/striker mechanism is attached to a motor for the actual playing. Not only does the robot have accurate rhythm with its strikes, but it can modulate how hard it’s hitting, too.

What it hears: Using a mic on the drum, the robot can “listen” to your playing and analyze when notes occur, and pitch/timbre information. (Max lovers, check out the bonk~ and pitch~ objects.) This allows it to accurately detect drum hits and the quality of each hit.

Hardware interface: For connecting the Max/MSP software setup to the robot, Weinberg and Driscoll used a commercially-available Teleo USB control board.

The real magic happens in the improvisatory algorithm through which the robot responds to your playing. The robot can simply imitate what you’re playing, “Simon” style, but it can also transform its response (in call and response or variation forms), or accompany you. Like some other musical robots such as the GuitarBot, you can also compose music for it by feeding it a standard MIDI file.

What’s next for the project: new compositions commissioned for the robot. “We are working on a drum circle composition for Haile, who will use beat detection algorithms to play with four darbukah and djumbe players,” says Weinberg. “It will anlayze aspects such as rhythmic density, rubato, and accuracy, and will use [that data] to calculate its responses. The piece was commissioned by an art organization in Israel and is scheduled be performed in Jerusalem in March, featuring a collaboration between Jewish and Arab percussionists.”

What’s next for the project: new compositions commissioned for the robot. “We are working on a drum circle composition for Haile, who will use beat detection algorithms to play with four darbukah and djumbe players,” says Weinberg. “It will anlayze aspects such as rhythmic density, rubato, and accuracy, and will use [that data] to calculate its responses. The piece was commissioned by an art organization in Israel and is scheduled be performed in Jerusalem in March, featuring a collaboration between Jewish and Arab percussionists.”

Gil promises to keep us posted on the project as it evolves, so stay tuned for more: an extended research paper, updated robot, and more musical performances are in the works. And by the way, if you feel like you were just reading about the Georgia Institute of Technology, you were: Gil’s music department colleague Jason Freeman just gave us the iTunes Signature Maker Jordan Kolasinski covered for CDM last week. I’ll get back to you if I find out what they’re having for breakfast over there.

Related CDM stories:

USB-to-Real World Sensor Interface, Muio (a competing alternative to the Teleo)

GuitarBot (another classic robotic instrumentalist)

Related Projects:

League of Electronic Musical Urban Robots (LEMUR)

P.E.A.R.T. Robotic Drum Machine