Good news for artists from NVIDIA this week – think more efficient photorealistic renders in realtime, and more Omniverse support, including for the free and open source Blender.

Yes, it’s SIGGRAPH, aka Graphics Nerd Christmas.

NVIDIA definitely won’t be left out of that one, so here’s what’s in store. I’ll skip over the GPU hardware news – basically, you get another RTX option (the low profile RTX A2000), and generally speaking, NVIDIA is catching up with the setbacks in production that hit industry-wide. So you will be able to find NVIDIA GPUs in the wild – and those of us in the northern hemisphere can avoid virus variants and work on new visual expression.

The rest is good news, too.

Omniverse: open to all, free learning for everyone

Omniverse is now open to all developers, and there are a bunch of chances for artists and devs to learn about it this week online for free, including in a virtual User Group.

All that news is here:

NVIDIA Opens Omniverse Platform to Developers Worldwide

But let’s skip to the lede – Blender 3.0 will have native support for Omniverse and USD. No, that does not mean you’ll be able to throw dollar bills at Blender while it renders. It’s Universal Scene Description, an open-source, extensible file format and means of … describing scenes. (Sorry, I’m sure there is some way to say that without repeating the words ‘scene’ and ‘describe’ but that is what it does – it’s all right there in the name.)

This is not about NVIDIA lock-in or something like that – it is truly open, and it started at Pixar.

In fact, it actually takes Blender – already free and open-source, downloaded millions of times each year – and opens it up to even more collaboration and import and export. It’s really fantastic as a way of working together with others, both on big industry projects and as artists.

This is still bleeding-edge stuff – it’s the alpha branch of Blender and the open beta of Omniverse – but it’s progressing fast, and it’s great to see this stuff arriving. By alpha I do mean alpha; you should definitely hold off on using this in production, and to really get a feel for how USD integration works, you’re probably better tooling around in Houdini or Maya. (CDM contributor Ted Pallas notes that Houdini will give you a full USD Stage Creator, by contrast.) But I think at the same time having the direct involvement of the Blender Foundation and community could be worth the wait, and be sure we’ll keep tabs on this as it develops.

The other cool stuff is, there’s a direct link between research work NVIDIA is doing and toys you’ll be able to use right away in Omniverse. So neural radiance caching, guided light paths, optimal volumetric visibility, RTX direct illumination – uh, in other words, “pretty light render fast” – and lots of other texture and material and render goodies aren’t just staying as interesting papers and YouTube demos. They’re shipping to you, often without you having to buy anything (provided you can get an RTX GPU).

So it’s SIGGRAPH. Let’s open those research presents under the tree.

Realistic light, lighter on the GPU

Not to shill for NVIDIA Research here, but when it comes to “make it look like a tiger is really wandering through the jungle,” yeah, their research boffins are on point.

So they’re showing off a lot today at SIGGRAPH, which I can sum up while you play VR Tiger King. (Oh… that could actually be a thing, couldn’t it?)

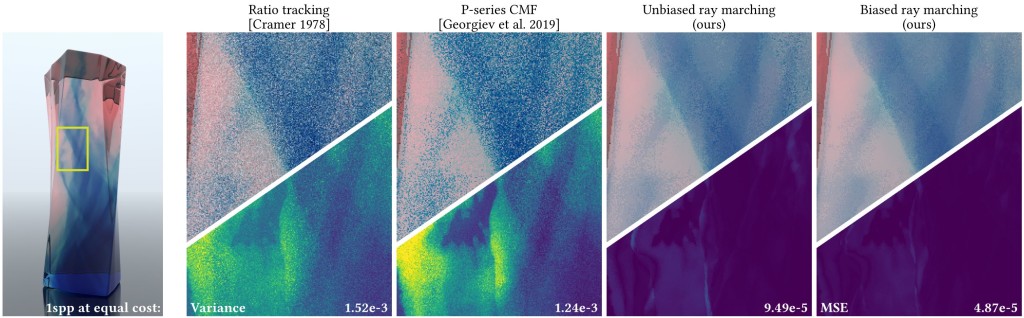

It’s time for unbiased ray-marching transmittance estimators!

NVIDIA Research: An Unbiased Ray-Marching Transmittance Estimator

https://research.nvidia.com/publication/2021-06_An-Unbiased-Ray-Marching

Okay, so the breakthrough here is not about the “sun-dappled light” bit in the PR materials, but actually about how you reduce noise in scenes while calculating ray tracing, especially across volumetric stuff – think clouds, smoke, and explosions. Anyone who every tried ray tracing for the first time had the experience of looking at a Georges Seurat painting too close. (If you went to high school in the Chicagoland area, I do totally hope you faked being sick and went and checked it out.)

The magic here is about predicting which are the important rays to trace – the ones that will have the biggest impact on how the scene looks – and reducing the number of errors and extra calculations. (I’m simplifying, obviously, but that’s the basic gist.) The upshot is incredible, though – making renders just under ten times more efficient to a whopping 166x improvement. (One-six-six. Yeah.)

AI-powered light

Also impressive, and related – the next idea is to use Tensor Cores (NVIDIA’s machine learning accelerated bits) to train a little neural network that learns how light is distributed in the scene. The same rough idea is common to both – predict how light is distributed so you can reduce calculations and errors.

In lay terms – fewer of those silly dots. More of the “Seurat from a distance” and less “Seurat standing right next to the canvas.”

Speaking of lay terms, they’ve done a great job explaining that to folks who aren’t necessarily programmers, 3D artists, or mathematicians, let alone all three:

And more

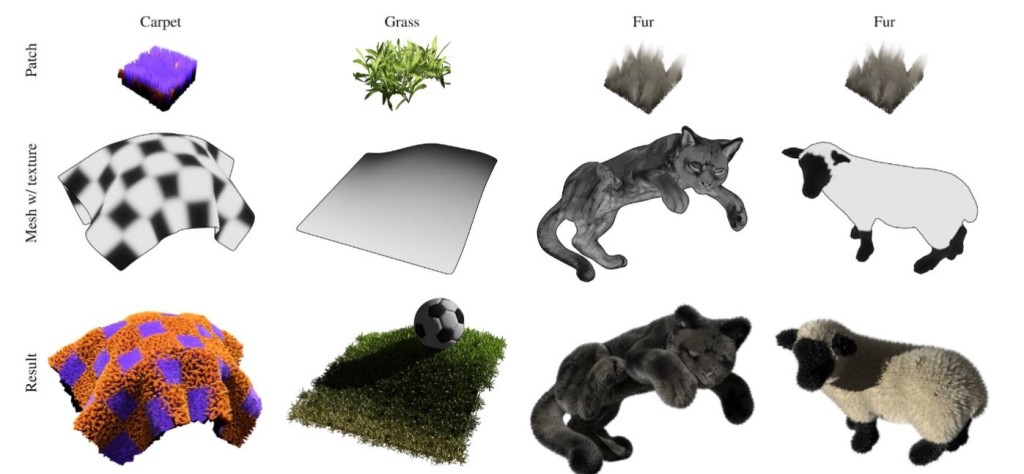

More researchers are also working (with both ETH Zurich and Disney Research|Studios) on using neural networks to help fill in the gaps in textures and modeling complex materials like grass and fur. Fake shag carpeting now gets the power of AI.

Neural fir. (Say that five times fast.)

And holy crap is this an amazing way to make complex models, basically using a reference scene to make optimized meshes and shading.

I think it all nicely represents the big trend of 2021: not just throwing tons of GPU horsepower at problems, but finding ways to render large and complex stuff more efficiently. See also the sudden order-of-magnitude jump in performance in Microsoft Flight Simulator. We’ve already seen demos that benefit from swapping out a GPU with a much beefier GPU. This is the inverse of that – your existing hardware suddenly works like it’s a whole lot more powerful. That’s especially vital as 3D artists tackle more complex worlds.

More research:

Leading Lights: NVIDIA Researchers Showcase Groundbreaking Advancements for Real-Time Graphics

Tigers!

You’re a hero. You scrolled through my rambling article on NVIDIA stuff. You’ve earned this.

Anyway, it’s been stuck in my head since somewhere about 150 words in.

Google Analytics can’t measure that. It can’t hear you blasting Survivor while you make a kickass experimental music video in Blender on the RTX you saved up to buy. But I can hear you. Keep that will to survive.