The “hot s***”, open, purportedly ethical generative text-to-image AI generator has now moved from invite-only contexts and Discord to full public availability.

It’s on the Web. It’s open source so you can grab it – weights and all. You can run it locally (if you have a powerful GPU). And this means, love it or loathe it, now at least the latest trends in generative artwork are both more available and more transparent.

That’s important, because these research developments opened up new ways of mining data to generate images, tools that will be employed by big tech regardless. So even though you may not have any personal interest in using these in your own practice – a very, very valid choice – the availability of tools like Stability.ai shed some light on these techniques and open them to more informed debate.

Yesterday’s announcement already transforms one of the more popular tools to emerge this summer. Access had been free on Discord and now requires either a powerful GPU, an investment in your own GPU-powered servers, or paying their (reasonably nominal) fees for their servers. But the big shift here is that you can both poke around what they’ve done to understand it technically and anyone can make use of it – not just a select few. That’s important, too, because a lot of the vital research and criticism comes from people without institutional affiliation.

Or to put it another way – now the punks can have it, analyze it, use it (if they choose), or trash it. Hopefully all of those.

Here’s a breakdown of all that just happened on Stable Diffusion.

The Discord bot is dead. But –

Check the public demo: https://huggingface.co/spaces/stabilityai/stable-diffusion

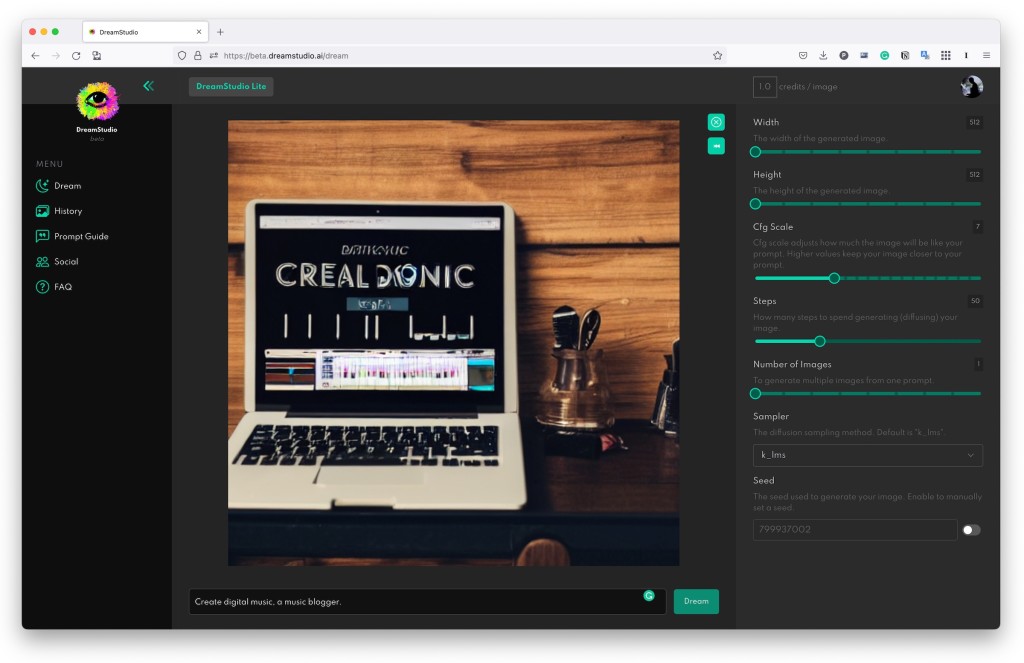

Try the (paid) beta of a Web interface on DreamStudio: https://beta.dreamstudio.ai/

That has a good “101” explanation of what the heck this thing even is:

DreamStudio is a front end and API to use the recently released stable diffusion image generation model.

It understands the relationships between words to create high quality images in seconds of anything you can imagine. Just type in a word prompt and hit “Dream”.

The model is best at one or two concepts as the first of its kind, particularly artistic or product photos, but feel free to experiment with your complementary credits. These correlate to compute directly and more may be purchased. You will be able to use the API features to drive your own applications in due course.

Adjusting the number of steps or resolution will use significantly more compute, so we recommend this only for experts.

You can run this locally – even downloading weights. (You need a GPU with 6.9GB + VRAM.) Our friend Lewis Hackett (see link at bottom) says this is fast on a 3090Ti from NVIDIA – “on par if not faster than the Discord bot was!!!”

More key points:

Hugging Face was a collaborator, well worth checking out for their work on reference open source machine learning models – not just open in name, but genuinely open and community-driven. This is used by the likes of Google, Amazon, and Facebook AI, but unlike the “open” initiatives often backed by big tech, you don’t constantly hit “black box” implementations

Infrastructure collaboration with GPU Cloud providers CoreWeave.

Open licensing: Creative ML OpenRAIL-M license [https://huggingface.co/spaces/CompVis/stable-diffusion-license].

There is some “censorship“/control. There’s an AI-based “Safety Classifier” – probably essential to avoid really destructive imagery being produced, but also something I imagine artists may wind up bypassing in individual work and – since it is algorithmic and can ultimately censor some important expression, this will remain a discussion point. But yeah, you need to avoid any shared resource being used to create penises, Nazis, or Nazi penises. (One of the reasons I love the show Mythic Quest is how it nailed this very issue!)

The source is still the Internet. So before you imagine the AI is making up images, it’s not – this is not remotely related to general artificial intelligence or creativity. If the images are pleasing to “the Internet,” well, that’s who really made them. Stability Diffusion is “trained on image-text pairs from a broad internet scrape” – which in turn raises authorship questions itself.

Yes, there’s a Colab! AI aficionados will want this Web-friendly hackable tool: “an optimized development notebook using the HuggingFace diffusers library:” [https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/stable_diffusion.ipynb]

How the model is worked is shared in the model card.

The community is still on Discord: https://discord.gg/stablediffusion

Coming soon: here’s where things get most interesting – more GPU support, including Apple M1 and M2 (so yeah, you’ll be able to run this on a MacBook Pro), API access, animation (oh!), “multi-stage workflows” (right now it’s pretty flat), “and many more.”

But yeah, you can already use the Web tool, poke around the Colab, and if you have an NVIDIA GPU run locally.

Details:

https://stability.ai/blog/stable-diffusion-public-release

Previously, for some of the more creative things you can do with text-to-image: