What does it mean to “master for iTunes?” Apple tripped that question with the launch of a suite of utilities and sound-processing algorithms intended to master music for their codecs and software, rather than more generically as would be done with the CD. More significantly, what does it mean that an increasing number of music listeners experience all music through Apple’s software as the final gateway to their ears? In our first look at this issue, we welcome guest writer and producer Primus Luta (David Dodson). He tests this issue the only way that really matters: with his ears.

There’s so much to say, in fact, that almost each line here of David’s conclusions is up for potential discussion and debate. That to me isn’t a red flag for posting – quite the opposite, it’s an invitation. So we consider this the beginning, not the end, of this conversation. -PK

The announcement of Apple’s new Mastered for iTunes suite caught me at a serendipitous time, as I prepped the first release on my new label. In fact, the day of the announcement came right in the midst of reviewing masters for the release. It’s an interesting situation for a compilation release, in which styles range from ambient to muddy beats. Finding a good balance that keeps them all flowing together is an art in and of itself. But it would seem Apple has that all solved with their Master for iTunes droplet. Drag the high-quality files to the droplet, and presto-chango — out come files that all play perfectly in iTunes.

Well, that’s the claim, but is it mastering or encoding? To their credit, in the documentation Apple explains that their 32-bit process manages to encode from high-res audio without leaving a dithered footprint. Ed.: “Dithering” is the addition of adding small amounts of noise to compensate for errors that can occur in downsampling from greater bit depth to less – it’s used in image processing as well as in sound. According to Apple, their use of greater bit depth in the intermediary file prevents aliasing and clipping, and thus they don’t need to use dithering. -PK Apple’s tools aren’t the only way to do this. Most pro audio editors can achieve the same, but often people are ripping MP3s or AACs in their media players, so it is an important distinction. It still begs the question: why go down to CD specifications, especially while making the point of noting their process results in a quality better than CD’s or CD rips? Ed.: The greater bit-depth is only an intermediary file; eventually delivery is not only compressed, but at specifications set by the CD. Greater resolution and bit depth are limited to the mastered files, not to what the listener ultimately hears.

The most important question, though, is how does it sound? If you send a song to be mastered, you expect in general to get back a song that sounds different than the one with which you started. Generally, this difference is in perceived overall volume, but also can include changes to dynamics and other touches. So what changes does the Master for iTunes droplet make to your files? Well, none: it just encodes them. They describe the process as such:

The Droplet creates an AAC audio file from an AIFF or WAVE source file by first generating a CAF (Core Audio File) rendered with an iTunes sound check profile applied to the file. If the sample rate of the source file is greater than 44.1 kHz, it’s downsampled to 44.1 kHz using our mastering-quality SRC. Next, it uses this newly-rendered CAF to render a high-quality AAC audio file. Once the final AAC audio file is generated, the intermediary CAF is deleted.

The key part relating to how your files sound is the “iTunes sound check profile applied to the file.” Rather than changing the volume gain in the file, metadata information is used to tell the playback device how to play it. What the documentation does not tell you is what or how this information is determined.

Reviewing masters involves listening on many different systems. I like to listen on studio monitors, a small boombox, a consumer surround sound theatre system, laptop speakers, desktop computer with headphones and, of course, in a portable media player with various headphones. I’ve also added a cloud-based stream to that mix — and doing that is what brought me to the experiment I conducted.

I uploaded a test master to the the cloud and was comparing listening to it and iTunes, when I noticed a rather huge discrepancy in volume. At first, I figured they were just set to different levels, but upon checking both were at their max. So I went to play my reference song, which currently is the title track from Monolake’s new album Ghosts (I tend to try to keep my reference material relatively contemporary.) The volumes on this track between applications were more or less the same. Meanwhile, my test master, which was playing pretty much on par with the Monolake track from the cloud, played significantly lower in iTunes.

That was when I remembered Sound Check. I wasn’t on my normal listening computer and never bothered to see if Sound Check had been enabled, but sure enough, when I looked the preference was checked.

Ed.: I actually had some difficulty getting a solid answer, but consulting with Apple-following journalist Jim Dalrymple of The Loop, we believe that the default setting is off in iTunes for Mac and Windows and on iOS. If someone has a different answer to this, I’d love to hear it. What you can tell about it is what Apple has documented in support document HT2425, namely, Sound Check operates track-by-track, not album-by-album, operates in the background, and computes and stores non-destructive normalization information in ID3 tags. It works exclusively with .mp3, .AAC, .wav, and .aiff file types, and gain increases occur before the built-in iTunes Limiter. That also means you should consider the iTunes Limited as part of this process.

As soon as I disabled it, the volume was consistent across players. This inspired me to test how Sound Check was affecting other files, and so, going through my iTunes library, I built up a sample set of 25 songs to test the effects of Sound Check:

| Artist | Song | Sound Check |

| Tori Amos | “Night of the Hunters” | null |

| Tori Amos | “Teenage Hustling” | – |

| Tori Amos | “Blood Roses” | – |

| Sun Ra | “Sea of Sound” | – |

| Stevie Wonder | “Superstition” (Live Bootleg) | – |

| Stellar OM Source | “The Oracle” | null |

| The Staple Singers | “I’ll Take You There” (Wattstax Live) | + |

| Sonnymoon | “Goddess” | – |

| SoiSong | “Jam Talay Say” | – |

| The Smiths | “The Queen is Dead” (Live) | – |

| Shigeto | “Huron River Drive” | – |

| Powell | “09” | – |

| PJ Harvey | “The Glorious Land” | – |

| Pharoah Sanders | “Harvest Time” (Vinyl Rip) | – |

| Oscar Pettiford | “Bohemia After Dark” | – |

| Pierre Schaffer | “Bidule en ut” | + |

| Ojos de Brujo | “Zambra” | – |

| Nosaj Thing | “Us” | – |

| Nine Inch Nails | “The Great Destroyer” | – |

| Rotary Connection | “I Am The Black Gold of the Sun” | – |

| Muslimgauze | “Believers of the Blind Sheikh” | – |

| Muslimgauze | “Ramadan” | + |

| Moritz Von Ozwald | “Horizontal Structure 2” | – |

| Monolake | “Ghosts” | null |

– = Sound Check turned down the volume

+ = Sound Check turned up the volume

null = Sound Check had no effect on volume

This was all done by ear, and while my ears aren’t what they used to be, I’m willing to guess if you tested, your results would be similar. Ed.: You should also be able to investigate the actual ID3 data, but in this case, perceived volume may be more interesting anyway, and the effect isn’t necessarily subtle.

About halfway through, I thought it’d be good to confirm these findings with numerical tests, but then I started noticing a pattern. Almost everything gets turned down, some more extremely than others — the most extreme example being the Nine Inch Nails track. The two tracks that get turned up are both archival recordings, and so it makes sense that they are at a lower volume. The vinyl rip from Pharoah Sanders would likely have gotten turned down, as well, save for the fact that vinyl rips are re-mastered to raise their volumes. Same goes for the live Stevie Wonder boot.

The stand-outs are the ones which Sound Check has no affect on, each of which was released within the last two years. The Tori Amos track comes from her last orchestral album. Because of the result, I tested two other selections by her on either side of the advances of digital technology, both of which get turned down. The track “Blood Roses,” like “Night of the Hunters,” features no drums but still gets turned down, as the mixing for the album is definitely rock-influenced and so the harpsichord falls on the loud side.

Stellar OM Source’s track is of the ambient drone variety, also without drums. But the Monolake track is techno, full of drums and crunching distortions, yet it remained unaffected by Sound Check. (It’s also worth noting that the Powell track, which also has prominent drums, is only barely turned down by Sound Check.) Because “Ghosts” is one of my reference tracks, I had previously done an analysis of it. I noted that, despite peaking at the max of 0 dB, its RMS only averages out at -14.5 dB. I’ve done this type of analysis for a number of modern tracks and this is unusually low. Typically, drum- and bass- heavy tracks manage to hit around -10 dB RMS with some going as high as -6 dB RMS.

The results for the Monolake track led me to hypothesize that what Sound Check was actually doing was applying an RMS limit on tracks of around -15 dB (with a +/- that I haven’t calculated yet). Anything below that gets turned up and anything above that gets turned down (with the precaution that turning up never results in clipping by going above the 0 dB max). This was confirmed when I normalized one of my test master’s to an RMS of -15 dB. This version of the track, when played in iTunes with Sound Check enabled, played at the same volume as with Sound Check disabled.

Where an object of mastering is to create a version of a song which plays at the optimum level across playback devices, where iTunes is understood as rapidly becoming a primary application for playback, and where Sound Check is often enabled as a preference in iTunes, it stands to reason that those producing masters today should be working to create versions of songs for which Sound Check does not need change the levels. As such, mastering for iTunes can be understood as creating a quality master which has an average RMS of -15 dB.

Prior to this, the primary barrier for the levels of a master was the 0 dB max limit to prevent clipping. Within that, the RMS levels could fall anywhere, which is the freedom that gave way to the loudness wars. The so-called “loudness wars” refer to the increase in compression to produce greater perceived loudness, as tracked over the rise of big FM radio and the CD through the 80s, 90s, and today. Two songs with a max of 0 dB can have extreme differences in volume based on the RMS. Production and mixing tricks, especially with the heavy use of dynamics processors like compressors, can squash a song, allowing the overall volume to be raised incredibly. Using these techniques, it’s entirely possible to create a mix (not a master) which has a max level of -4 dB but an RMS of -10 dB. If you master that track, raising the max, to 0 dB, the RMS level will push close to -6 dB. When this file is played in iTunes with Sound Check enabled, however, it’s going to be turned down to -15 dB RMS which will be below the -4 dB max level that it started with.

The potential of adopting this as a standard is an end to the loudness wars as we’ve known them. As the above example shows, doing everything you can to push a song to the max ends up having the opposite effect. So rather than worry about loudness, producers and mixing engineers can return to focusing on getting good, clean mixes of songs. Mastering engineers can also worry less about pushing the volume to the max and focus on bringing the best out of the mixes.

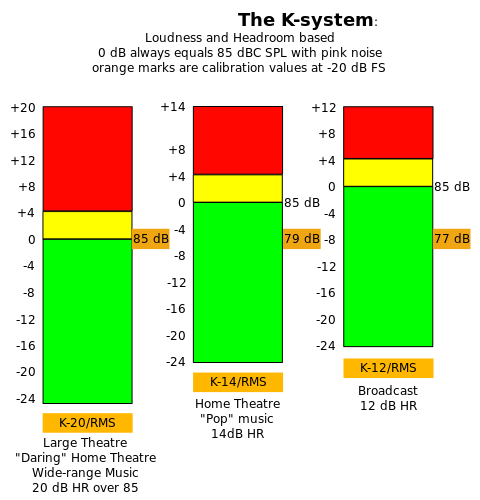

Incidentally, the system for producing tracks that comply to this have long existed in the mastering world, thanks to Bob Katz and the K system of level metering. Using the K-14 system of metering for mastering (and producing and mixing) can ensure that engineers are not pushing their mixes too loud.

There are, however, some negatives which can be attributed to the adoption of such a standard. Because of the headroom afforded by digital, in the last decade, the creative use of volume has increased. “Loud” has new musical meaning, and the tools utilized to maximize loudness normally in mastering are being introduced during production to create effects. An example of this is the pumping effect of side-chain compression on drums. This can be quite appealing creatively even when (and perhaps because) it pushes to levels of distortion. Creating this effect without clipping is easily managed with a limiter at the end of the signal chain. However, creating this effect below -15 dB is not so straightforward, and the results won’t necessarily be the same.

For the mastering of multi-song projects there are other issues. Over the course of an album, dynamic shifts between songs can help to carry the mood of the project. One wouldn’t necessarily want all of the tracks to have the same -15 dB RMS; ideally, that would be reserved for the loudest song and the others mixed under that accordingly. It presents a challenge, but it is manageable. What’s nice about this type of limit is that, unlike the 0 dB max limitation, going over it does not necessarily result in destructive clipping, so there’s still a dynamic range within which to work. It’s also worth noting that the Sound Check process can be applied to an album to ensure consistency in listening.

One has to hope that, should this become a standard, new creative ways of working within these parameters will be born. To be clear, -15 dB RMS, while not the loudest, can sound great for a great mix. Just listen to the Monolake track if you want proof. Getting people to adopt to it is a challenge, but I think the incentive to adopt will be there once artists realize that the more they push the volume, like their mother, the more Sound Check will turn the volume down.

As a footnote, I thought to test how Sound Check treated what was previously considered the most perfect album from a mixing mastering perspective – Steely Dan’s Aja. In iTunes, Sound Check turns “Peg” down. So it’s not just your bass heavy-beats that could be affected by this. Also, it’s not just iTunes and not just Sound Check. Replay Gain is a similar tool found in other media players. Spotify also has similar limiting for its streaming services. These things will likely show up in more playback applications as time goes on so adopting to this now is a pretty safe bet. Sure, your tracks may not sound the loudest when tested without these services, but with good mixes, they will still sound good, regardless. “Good” is far more important than “loud.”

I’m still on the fence, though. In general, I’m not a fan of auto volume control. Adopting a mastering standard that caters to them just seems wrong, even if I am (for the most part) on the side of ending the loudness wars. And, again, on the creative side, I’m very concerned. A decade of loudness wars in many ways has changed our sense of sound possibilities, and signals pushed into the red — well, I kind of like those, when they’re done creatively. People talking about the loudness wars are usually talking about traditional rock and pop music being squashed and absent of dynamics. But we’re at a point now where there are other genres for whom pushing into the red can be seen as more valuable than dynamic range. It’s a completely different school of thought and need not be shut down (or turned down) because of an antiquated sense of norm.

You can follow David Dodson on Twitter. http://twitter.com/#!/primusluta

We’re interested to hear what you think.