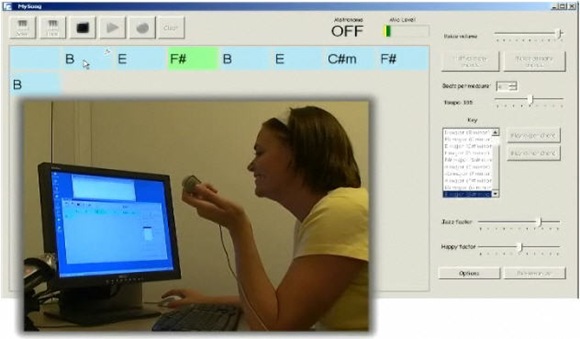

Microsoft Research has done some amazing work; it doesn’t always move me to tears, but there’s some fantastic stuff that deserves real recognition. And MySong is … well, technologically impressive, if musically painful. It’s a sort of collision between AutoTune and Band-in-a-Box: it recognizes a melody as input, then harmonizes that melody.

Microsoft Research has done some amazing work; it doesn’t always move me to tears, but there’s some fantastic stuff that deserves real recognition. And MySong is … well, technologically impressive, if musically painful. It’s a sort of collision between AutoTune and Band-in-a-Box: it recognizes a melody as input, then harmonizes that melody.

The vocal input goes well, and illustrates the number of different inputs beyond the mouse you can expect in The Future. Here’s the problem: harmony is extraordinarily difficult to model on a computer because of the number of variables, the amount that’s driven by instinct and art. And let’s be blunt: it doesn’t work right.

In short: if you’re planning to build a Jerome Kern robot, the technology may not be there just yet.

If you’ve got a strong stomach, you can watch the application lay waste to “The Way You Look Tonight.” Speaking of tears: composer Kern actually drove the lyricist, Dorothy Field, to tears with the original. MySong might make you cry … in a different way. It chooses chords that fit a key and fit the melody, but completely unravels when it comes to making chords work horizontally with each other with the melody — which, when you think about it, isn’t all that easy even for experienced musician. The funny thing is, the harmonic structure of the song isn’t that complex (well, until MySong gets cranked to its avant-post-bop setting later in the demo). Harmony is perhaps just harder than the technologists may realize.

The researchers do compare their tool to Band-in-a-Box’s automatic harmony selection module, and this works better than that — but that’s not saying much.

I also have to admit, I’m getting a little fatigued of all these tools that want to dumb down music, as if somehow it’s music’s obligation to be push-button easy. Do we build giant robotic armatures so people can play basketball without practicing? Isn’t it the struggle that makes it fun? The researchers in this point seem to have missed the point: all those hours you spend sitting with an instrument working out chords are perhaps what music is about. There’s not some musical secret the experts are keeping from everyone else. The songwriter with the guitar very likely received very little training. All of that tweaking of melody and harmony is part of the process that eventually yields things like, well, “The Way You Look Tonight.” Jerome Kern and Cole Porter and Richard Rogers did it very quickly; amateurs may do it more slowly. But it may not be possible to reduce to rules in a way that the current generation of computing intelligence can even understand — and even if it does, it may require more than one or two sliders to adjust.

The best part of the video is the editable parameters: sliders for Jazz factor and Happy factor settings. (Theory fans: the approach seems to be for Happy factor to lobotomize to major I/V chords and Jazz factor to eventually turn everything into sus13.) I’d like to suggest a few additional settings for reproducing a broader variety of music:

- Emo angst factor

- Tone deaf factor

- Pretentious techno chords factor

- Stoned factor

- Saccharine-sweet triteness factor

- Community theater audition accompanist factor

- Went to a liberal arts college where everyone on my floor played Ani DiFranco way too much factor

It’s well worth watching the demo. And, of course, this is the reason to tackle artificial intelligence — even if you’re unsuccessful, you’re learning. My guess is, we’ll need genuine AI before we can successfully harmonize melodies.

MySong, from Microsoft Research, makes your singing sound a lot better than it really does [istartedsomething]

MySong: Automatic Accompaniment for Vocal Melodies [Explanation, Demos, Academic Paper]