Everything has an “API” these days, but what that means in practice is often not so exciting. You can make little widgets for Facebook, or post recent Twitter messages, or do other simple developer tricks. Echo Nest’s “Musical Brain” API is more far-reaching: it’s an API for music. All music online. The first of a series of developer tools, “Analyze” is designed to describe music the way you hear it, figuring out tempo, beats, time signature, song sections, timbre, key, and even musical patterns. More developer tools will follow.

Twelve years in development, the Musical Brain is a bit like a digital music blogger. It’s been crawling the Web while you sleep, reading blog posts, listening to music to extract musically-meaningful attributes, and even predicting music trends. It’s like almost like a robotic, algorithmic Pitchfork. (And I’m serious — it may be April Fools’ Day, but this is real. That’s what the “brain” claims to do, backed by research at UC Berkeley, Columbia, and MIT.)

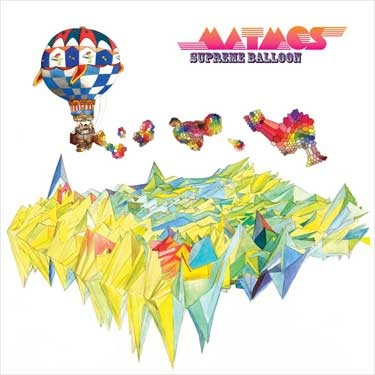

What does all of this mean? The Musical Brain may not be replacing your friendly local music blog any time soon, but what it can do is infuse some musical intelligence into applications like music visualization. The Matmos album at top used the Analyze algorithm to map the timbral profiles of songs on an upcoming album; that graph was then rendered by an artist in watercolor, translating the digital into traditional paint media.

The Analyze API could also enable everything from Web music apps and mash-ups to live audiovisual performance tools, or even smarter music games. That’s the reason co-creators Brian Whitman and Tristan Jehan, both with PhDs from the MIT Media Lab, chose to open up development to a broad audience. I got to speak to Brian a bit about the project.

Machines that Get Music

“We’ve had it internally in code for a long time — but we want other people to make cool stuff with this,” Brian explains. “We have friends who make visualizers, VJ-type things, music mash-ups. So some of the things we’ve seen are visualizers. Video games is another one. We have a lot of friends who make electronic music, making Max patches and stuff, making chop-up kind of things [mash-up tools] now.”

Brian’s background was natural language processing. Tristan had worked in acoustic analysis. Both had seen research in automated music listening and machine listening, as well as new abilities to train computers to understand musical properties and human language. What’s been missing, says Brian, is a way to bring together these different research developments — mature after years of academic research — and put them out to a broader audience, not only of programmers, but artists, as well. Echo Nest is a way to do this.

Mash-up Machine

Right now, you can upload songs to the Musical Brain and try out analysis, and you can read up on the documentation and try some stuff yourself.

But if you’re anxious to see where this may be going, there’s also a first Web application so you can see how these tools could work in online development. It’s called “thisismyjam”, and it uses the API to create “micromixes”, instant mixes of your music. Type in music (it was able to handle a wide range, though it missed some more obscure examples I threw at it), and it will mash them together into a consistent mix.

Here’s my first experiment. I intentionally chose some disparate stuff that I liked to see how it would handle it, and let the algorithm decide automatically on ordering to try to match the music.

Because thisismyjam knows something about music, it was able to match my tune to something related.

Now, thisimyjam is mostly just a curiosity and demonstration for the moment. The attention deficit-style mixing of tunes was intentional, says Brian. The project grew out of their frustration with licensing rules, which limit the length of audio excerpts on sites like iTunes. “What we were trying to do is, if you want to hear an entire album in a minute, do it our way,” he says.

That said, it does suggest some of the possibilities of working with large-scale musical information. As opposed to something like Pandora, this is not manually-entered musical information; it’s all an algorithm. (See, by way of contrast, our interview with Pandora’s founder.) As such, even this basic example demonstrates what machines may be able to do if given some additional human-style intelligence. Mash-ups aside, these additional analysis tools could go beyond the basic audio-analysis and beat detection tools in current music software and create new audio-processing possibilities, even with audio you’ve recorded yourself.

Get Developing; More Information

Of course, the nice thing about all of this is is, like Matmos, you can get with the happy music picture-making straight away for free, using tools like Flash and Processing.

Developer Site (you’ll want to request an API key)

And if you don’t want to brave coding, there are plenty of examples to play around with:

thisismyjam.com (free mash-up community site)

We’ll be watching this as it develops — and I’ll be messing with Processing, the free, artist-friendly coding tool for Mac, Windows, and Linux, so I hope to post some examples over on CDM Labs soon.

If you’re involved in related research, we’d love to hear about that, too.