In Robert Seidel‘s “motion painting” Meander, digital video is fragmented into atomic textures, as sound and visuals alike crackle through streams and clouds. Don’t mention the word “glitch” or “datamosh”: it’s really more like digital action painting. Working with the visual development environment TouchDesigner, Robert collaborates with sonic artist Heiko Tippelt, as seen here in the excerpts from a recent performance in Sao Paolo, Brazil. Materials are fractured and reconstructed, in granular fashion. I got to talk to Robert a bit about his work.

meander // live performance | Robert Seidel & Heiko Tippelt | ROJO NOVA @ MIS Brazil from Robert Seidel on Vimeo.

CDM: How you conceive this work, artistically speaking?

In short – “meander” is an audio-visual live performance rearranging video fragments into a subconscious flow, freed from spatial and temporal constraints. The performance concept is based abstract live motion paintings, which derive from ideas of my painterly, experimental films. The cinematic soundscape is added by a musician and friend of mine – Heiko Tippelt.

I started working with motion artefacts years ago, long before the fashionable term “data moshing”. When working on “_grau” (see below) in 2004, I experimented with a lot of visual techniques, like motion compression artefacts. I love their textural quality and their beauty of fusing different motion patterns together, creating a hybrid of different visual states. It seemed almost like a “metaphoric tool”, since_grau was all about creating and referring to structures that are close to way our memory and perception works. Since I could not find a solution to control them more precisely, they became very subtle textures, almost unnoticeable.

_grau | 10:01 min | d 2004 from Robert Seidel on Vimeo.

With my work on “Futures” in 2006 (below), I used the technique to create very complex, undefined structures that shape over time, revealing something of unexpected clarity. These seconds of uncertainty are one of my interests in developing abstract and abstracting system and were the next step into developing a realtime motion painting system.

Futures – Zero 7 feat. José González | 3:58 min | d 2006 from Robert Seidel on Vimeo.

In 2007, I developed an audio-visual live performance with Max Hattler for Aurora Festival and I created a software that allowed me to fuse different videos very painterly, for the first

time in realtime. My goal was to create a flow between different movements of animals using found footage material. This was the first iteration of the live software (the internal name is “tierschmier”, which could be translated to “animalsmear”) to create a constantly fusing flow of

images.

While travelling to festivals and exhibitions in the same year I started to replace the animal footage successively with my own recordings. My “memory collection” grew with shots from a myriad of locations in Europe and Asia, adding moments with very specific textures and rituals from architecture or human behaviour as well as impressions of food and nature. And then, when touring Japan in 2008 with Max for 5 weeks I had the feeling of becoming part of a corrupted image flow. We had so many university talks and screenings in so many cities all over Japan that I lost track. When people sometimes revisited us at another location, I got very confused; it seemed like one place was melting into the next.

So. after Japan I was shaping my software in that experience, adding new material with every performance. In the last years I was creating fluctuating, dream-like memory landscapes with

artists like Rechenzentrum 2.0 with Ensemble Contemporánea, Shackleton, Michael Fakesch or No Accident in Paradise. Sometimes the motion paintings were very minimal, other times I added temporal layers until the captured perspectives of the material dissolved. All the sound artists played experimental or cinematic compositions, but the collaboration with musician Heiko Tippelt added a new layer since we know each other for very long.

How you the two of you worked together, across those media of sound and visuals?

This year I got the invitation to ROJO NOVA in São Paulo to do a live performance with a musician of my choice. I went for Heiko Tippelt

since we have worked on several projects together, starting with the score of “_grau” and my coming experimental movie “floating” (below).

floating | trailer | robert seidel from Robert Seidel on Vimeo.

Heiko had developed and captured several songs and sounds over the years, so we sat together and developed a loose choreography for “meander”. We were more talking in abstract terms like density, detail or pacing to create a system that was not too rigid and allowed changes with every new show. I find the spontaneity of performing live very inspiring, so we do not sync anything. Heiko is developing the sound and I develop painterly compositions along their outlines. Sometimes the motion painting and the soundscape flow perfectly together, other times they drift away completely and meander into something unexpected. After working on a film or installation for many months, I really enjoy the contrast of creating new images along a loose score, having the freedom to improvise. Like in my movies, this allows the viewer to search for secondary or even tertiary audio-visual correlations and not just simply follow a repetitive beat or visceral event.

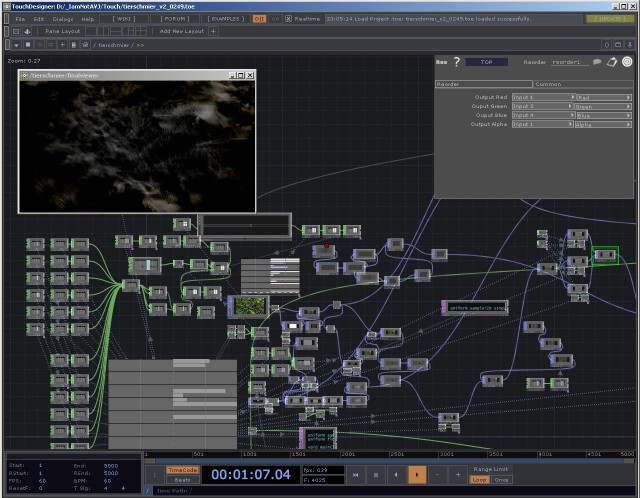

What it’s like working with the software TouchDesigner?

Being familiar with node trees from 3D software, I found it quite easy and inspiring to unleash the power of the graphic card for realtime purposes. But since the software is very complex, it was great to have the support of one developers from Derivative [TouchDesigner’s creators]. Markus Heckmann and I have known each other since Bauhaus University and I loved the possibilities of TouchDesigner back in the days, but not the old user interface. So the first version of my software was implemented in Visual Basic instead. But with the preparation of “meander” I migrated all functionality to the “new” Touch and added a couple of possibilities based on shaders or layering.

Overall, it is a very powerful and robust tool with a good node tree implementation. It has some peculiar ways of working and is not always logical or streamlined, but at the moment it is my favourite realtime tool. It is free for non-commercial use since some time now and I hoped it would “explode” like Processing or vvvv with thousands of new users, but the community is still quite small compared to its possibilities.

How are you controlling it live? What are you using for input and to manipulate performance parameters?

Easily controlling the image flow has a very high priority and so after fighting with the mouse and keyboard for some time I finally settled for the KORG nanoKONTROL [MIDI controller], which is cheap and quite flexible. Since it is not very precise and has a life of its own, it sometimes influences the image generation process. So it behaves very much like an analog instrument by adding tiny variations.

The abstract tableaux and their visual flow can be controlled by several parameters like speed, direction, density and transparency. Being able to re-map the controller in Touch easily and adding new functionality with every performance, the control of the “fractured memory perspectives” become more and more fluid.

Thanks to Robert for starting the conversation. Readers, feel free to add your own thoughts or questions in comments. More images below of Robert’s work…