Being a digital musician requires a new set of skills, a precise tack between the forces of engineering and creativity. Robert Henke aka Monolake is always someone I find thought-provoking, not only because he’s so open and articulate, but because he seems uniquely focused on balancing those two sides of his personality. As a media artist and producer, his work relies heavily on his own technological invention, but he is also able to keep true to his own aesthetic compass.

For acoustic evidence of where Robert’s mind is exploring, his full-length album Silence, released last month on his own Imbalance label, reverberates with clarity. To my own ears, its crystalline rhythms and finely-honed, always-foreground timbres and textures recall all the best of Monolake through the years, back to the early, pre-Ableton collaboration between Robert and (now Ableton CEO) Gerhard Behles. (For an eloquent review, see Fact Magazine’s take.)

As far as engineering in the sense of recording and production, Robert did a terrific interview with engineer/musician Caro Snatch for her blog; she gets some fascinating answers out of him and they even talk about his technique of avoiding compression on electronic sources. But I was interested in how engineering can work in the compositional sense: with open-ended tools like Ableton Live and Max/MSP, how do you create compositional systems? How do you wrestle with the potential of Max inside Live? Where do you draw limits?

As always, Robert has some sharp ideas – whether fodder for inspiration or disagreement, I think you’ll find things worth talking about. And indeed, while technology figures prominently, I think you’ll find some ideas that are really fundamentally about music, about compositional intent, thinking about sound, and thinking about rhythm.

PK: It seems that you’ve always had a really particular approach to timbre, and that it’s especially focused and evolved on this record. There’s a certain purity of tone to which you tend to gravitate, as I hear it. Can you talk a bit about how you approach timbral color?

RH: I can only nail it down to personal taste. I enjoy timbres with inharmonic content, and I like the contrast between very sharp transients and very lush, airy sounds.

I know that Silence, as with your other work, combines synthesized and found sounds. There is a sense that you get to an almost atomic level with each, however, that the synthesized are becoming organic and the recorded sounds are deconstructed to the point that become almost primitive and synthesized. Is there a different approach to each of these, or is that something that happens naturally?

The ambiguity of sonic events always fascinates me. That border between ‘real’ and ‘synthetic’ is a quite interesting one, not only in sound design, but also in visual arts. Working with synthetic sound generation sharpens my senses for the real sounds around me, and often I am surprised by how much they can blend. We are not talking any more of sound generation with a single square wave oscillator and a lowpass filter, but methods that are capable of creating highly complex and rich timbres. Those methods’ sonic definition matches the complexity of real sounds and this is where the fun starts. I like to place a recording of a metal thing next to a physical model of a metal thing next to a processed sample next to an FM timbre and see how they become a nice ensemble of similar sounds.

What’s your workflow like now in Ableton Live? On some level, it’s a tool that does things that you have conceived or asked for, or that reworks things you’ve created. On another, of course, it’s also this commercial tool that has been adapted to a generalized audience. Are there areas of it that you tend to work in most? Are there areas or features you tend to ignore or even avoid?

I try to avoid ‘content’. I am not interested in ‘throwing beat loops together’. I do not use presets from other people when it comes to synthesis, this all is just not my way of thinking. Why should I leave that great part of composition which is coming up with interesting timbres, to someone else? I am also not using time stretching / warping as a tool to match beats. I don’t like time stretch artefacts, unless I drive it in the very extreme as a special effect. I don’t need factory groove templates, in fact I never you groove at all, if i want to achieve it, I move notes by hand.

Apart from that, I’d say I use everything Live has to offer. There is not typical workflow, it highly depends on what I want to do. The most significant difference to the old pre-Live times is to me that I can make lots of sketches without any special idea in mind, just let go, and save the result once I am bored with it. And much later I can open all those sketches, and see if anything in there is of interest. Then I grab that element and continue working on the basis of this. I have a lot of complex tree structures of fragments on my hard-disk, and this a great source of material and inspiration.

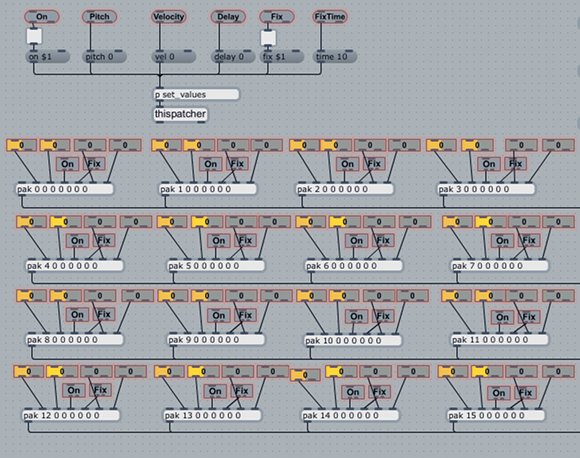

Recently, you shared some of your early, personal Max patches as Max for Live creations. Were any of these patches used on Silence?

I don’t mean to focus exclusively on the technology, but it seems that these Max patches – even more than any element of Live – really embody some of your aesthetic and taste, yes? They’re a bit like experiencing a Monolake album interactively. Do you conceive them in that way, as a sort of compositional thought formed into a tool?

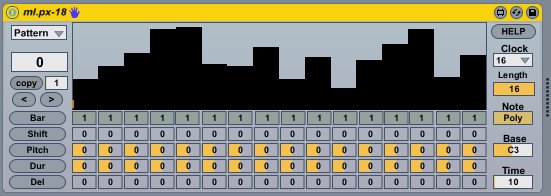

The tools have a strong influence on the result. Take the Monolake PX-18 sequencer. Its way of expanding a one bar loop into something that repeats in longer cycles is based on such a rigid concept, that it enforces a quite specific rhythmical approach. Some patterns are simply not possible, some are very easy to achieve. This is exciting and this is very musical; a piano is an instrument which makes it very easy to treat all twelve notes of a well tempered scale the same. And it is an instrument which makes it impossible to play with any notes that do not fit in such a scale. This is exactly the same interesting tension between enabling and inhibiting expression as with the rhythmical limitation of the PX-18.

There is an interesting interaction going on between developing tools and achieving musical results. The whole process is far from being linear and entirely result orientated. The idea at the beginning is shaped by first results and experiences gained from playing with a simple prototype of a part of the functionality, this drives the further development of the tool, but also influences the musical idea. If I try to build a granular time freezer, and after initial tests I figure out that I need a lot of overlapping grains to get the sound I want, I can also start thinking in swarms of particles, and this might lead to musical ideas that shape how I try to improve the grain thing. Working this way often provides far more interesting results than sticking to an initial plan. As an interesting side note, this way of thinking also finds its way more and more into general software/hardware development and interface/functionality design. The tools of the future need to _feel_ right. One cannot design a multi touch screen application on a piece of paper, implement it and think it will work. It would, technically, but it might not be inspiring to use and therefor most likely not a success in a competitive market.

A few years ago, when you were in New York, you made a couple of comments that stuck with me. One was that you thought that the tech press sometimes wasn’t critical enough of technology, that, for instance, they weren’t saying critical things about Ableton Live. Another was that you felt like there was less need for Max/MSP partly because of what Live itself does. I’m curious if you have any new thoughts on either of those?

I find myself doing a lot of things in Max these days, since the integration in Live made it so easy and rewarding. When I made that Max statement in NYC, I felt that coding is a trap when it comes to actually creating music. One simply does spend to much time with non-musical problems.In many ways, Max 5 and Max for Live reduced the time needed to get results. And this makes the whole package very attractive again.

I started teaching sound design at the Berlin University of Arts a year ago. I can show my students how to create a simple two-operator FM synthesizer with an interesting random modulation within fifteen minutes and the result is a Live set including the Max for Live part, which I can save and send to the students as an email so they can open it again an continue working on it. If stuff can be done that fast, it leaves enough headroom to actually use it in a musical context. In retrospective a lot of 90s IDM music was way to much driven by exploring technology. At some point one has to step back and say: okay, now lets actually have a look at the composition and not only at the technical complexity of the algorithm.

So, what’s the role of the press in this? One experience I gain from reading the Ableton user forum and from talking with students is that there is a great amount of insecurity about which technology to use. It’s the abundance paradox. Which software sounds best? Which compressor do i need to use? Which plugins do I need for mastering housy dub music with a hint of pop and some acoustic guitar? Having the choice between 5000 compressor plugins whilst not understanding what makes a compressor really sound the way it does it pretty much my idea of hell. So often I have that impulse telling the world: hey, you can use the sidechain input of the compressor you already have in Live, and you can feed that sidechain with a slightly delayed version of the original signal. You could also apply saturation, filtering, or even reverb or again an instance of the compressor in that side chain signal to shape its timing and response to its input. This will have a result of the compression curve, and this means you can build anything from a very normal compressor up to the most exotic effect you can imagine. And you can store those structures for later re-use. You can automate every single aspect of it. You can use ten or twenty instances of it in a song. Are you guys aware that you have more power right in front of you than the best music producers and hardware designers just ten years ago would have dreamed off?

I simply do not want to read any more articles about new compressor, be it hardware or software, unless it provides insight into the amazing possibilities we already have. I don’t want to read anymore sound quality discussions that deal with the last bit of a 24-bit file in a world where people listen to mp3 over mobile phones and enjoy those artefacts.

The most exciting new music comes from young kids guys running some audio software in a bedroom, listening to the result over a shitty hi-fi and use Melodyne all the way wrong. Those folks do not read gear magazines, they could not care less about yet another mastering EQ, but create the most stunning beauty. If people talk too much about gear I usually do not expect too much good music. I am often trapped in this twilight zone between engineer and composer too, so I know what I am talking about here…

As far as your own music, do you find you need some critical distance from a tool as an artist? Or does that fall away once you’re in the process of actually making the record? (It seems, after all, we’re all a bit spoiled by the various excellent tools we have at our disposal.)

Deadlines help. If I know that a project needs to be finished, I simply stop investing time in technology at some point, and instead use what’s there. Its a question of discipline and experience too. I try to teach my students that if they are working on a technically challenging project they need to define a deadline for the technical side. If not, they might work till the very last moment on technical stuff and loose focus on the artistic part. At the end, the result counts, not the beautiful MAX patch, which could possible create a nice result.

And have you ever considered trying to return to just building something simple in, say, Max, and limiting yourself to that? Or are you able to find necessary formal limitations in the tools you have?

I am constantly limiting myself. I set up a multi-dimensional network of constraints and bounce off its walls. Exhausting but it helps getting stuff done. A typical constraint: No more patching in Max till that project is finished, or try to get all Melodyne processing done in one afternoon and use those results.

I’m particularly interested in how you conceive rhythm. It seems like some of the ideas about sequencing rhythm in ATOM are also present here. Some of these rhythms are relatively symmetrical, pulse-like. Then you have these stuttering rhythms, as though a vibration has been set in motion and is naturally playing itself out in space. How do you work rhythmically?

I contrast totally straight 16th grooves with material that itself constitutes a rhythmical quality off that grid. In ‘Silence’ obviously I often used gravity driven processes with their inherent accelerations. Or I played notes with an arpeggiator that is not synced to song time but where I control its rate with a slider. Something Gerhard already did on the very first Monolake track ‘Cyan’ in 1995. Silence offers quite a few hidden connections to Monolake history. My general approach to groove is simple: I change things in time till it feels right.

What was your compositional process like, generally, for these works? Did they start with some of those sounds? With a rhythmic motive?

There is no general rule. I often just open Live to explore an idea, and end up doing something else because I found an interesting detail along the way. Or I have to work on a highly specific project, and have to discard a lot of the results because they do not work in a given context. Instead of throwing them away, I keep them and this might form the basis for another composition.

The title, “Silence,” certainly recalls John Cage. Was that intentional? Were there other meanings here? In an album that’s not silent, what is the role of silence?

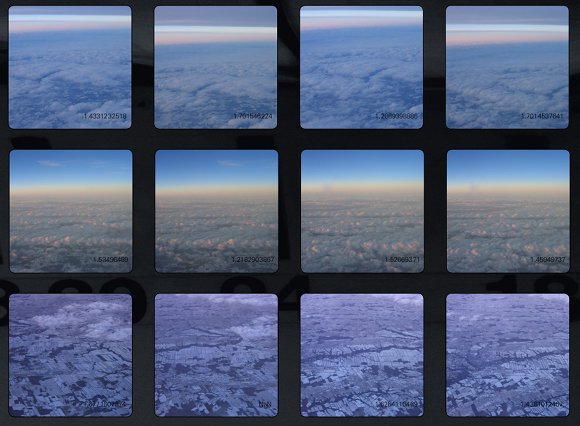

Silence is such a great concept. There is no silence, unless in a vacuum, its that great mystic world which cannot exist in our world. Also, in music the time between the musical events is as important as the events itself. But I really leave it up to the associations of the listener to make sense of the title. And of the liner notes and the photographs and the music. I think there is a lot of room for all sorts of connections and connotations.

When we talked at the end of last year, we got to reflect a bit about winter. I’m editing this as I watch a snowstorm here in Manhattan, having come from snowstorms in Stockolm. It seems that winter is again a thread on this record. How did winter play into the album?

I grew up in the Bavarian countryside. Winter there equals silence, introversion, deep thinking, and general inwards focus. I like this.

http://monolake.de/

Free Max for Live patch downloads: http://monolake.de/technology/m4l.html

Silence: http://monolake.de/releases/ml-025.html