There are cameras. There’s video and 3D. What happens when you create a futuristic mixed reality space that combines them, live? I headed to a cavernous northern New Jersey warehouse to find out.

With or without the pandemic crisis, our lives in the digital age straddle physical and imagined, meatspace and electronic worlds. XR represents a collection of current techniques to mediate between these. Cross or mixed is a way to play in the worlds between what’s on screen or video and what exists in physical space.

Now, with all these webcasts and video conferencing that have become the norm, the reality of mixing these media is thrown into relief in the mainstream public imagination. There’s the physical – you’re still a person in a room. Then there’s the virtual – maybe your appearance, and the appearance of your physical room, is actually not the thing you want to express. And between lies a gap – even with a camera, the viewpoint is its own virtual version of your space, different than the way we see when we’re in the same space with another person. XR the buzzword can melt away, and you begin to see it as a toolkit for exploring alternatives to the simple, single optical camera point of view.

To experience first-hand what this might mean for playing music, I decided to get myself physically to Secaucus (earlier in March, when such things were not yet entirely inadvisable). Secaucus itself lies in a liminal space of New Jersey that exists between the distant realities of the Newark International Airport, the New Jersey Turnpike, and Manhattan.

Tucked into a small entrace to a nondescript, low-slung beige building, WorldStage hides one of the biggest event resources on the eastern seaboard. Their facility holds an expert team of AV engineers backed by a gargantuan treasure trove of lighting, video, and theatrical gear. Edgewater-based artist/engineer Ted Pallas and his creative agency Savages have partnered with their uniquely advanced setup to realize new XR possibilities.

“Digital artists collaborating with this new technology pave the road for where xR can go,” says Shelly Sabel, WorldStage’s Director of Design. “Giving content creators like Savages opportunities to play on the xR stage helps us understand the potential and continue in this new direction.”

I was the guinea pig in experimenting with how this might work with a live artist. The mission: get out of a Lyft from the airport, minimizing social contact, unpack my backpack of live gear (VCV Rack and a mic and controller), and try jamming on an XR stage – no rehearsal, no excuses. It really did feel like stepping onto a Holodeck program and playing some techno.

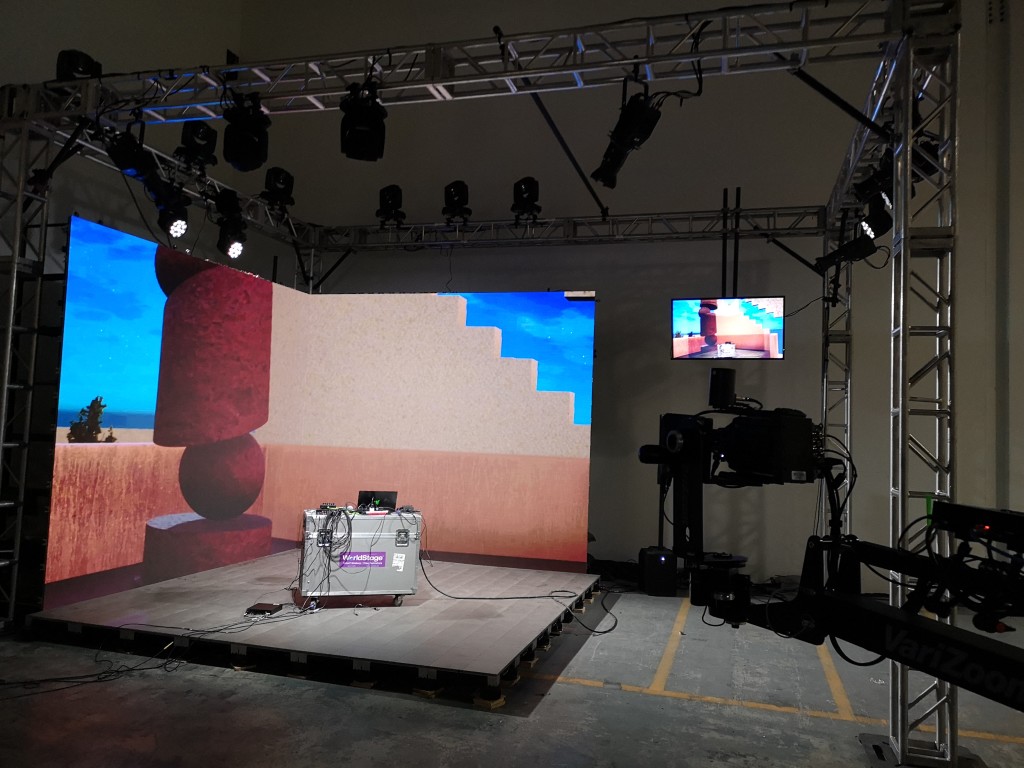

And I do mean stage. The first thing I found was a decent-sized surface, LEDs on the floor, a grid of moving head lights above, and over-sized fine-grade LED tiles as a backdrop on two sides. Count this as a seven-figure array of gear powering a high-end event stage.

The virtual magic is all about transforming that conventional stage with software. It’s nothing if not the latest digital expression of Neo-Baroque aesthetics and illusion – trompe-l’œil projection in real space, blended with a second layer of deception as that real-world LED wall imagery is extended in virtual space on the computer for a seamless, immersive picture.

It’s a very different feeling than being on a green screen or doing chroma key. You look behind you and you see the arches of the architecture Ted and his team have cooked up; the illusion is already real onstage. And that reality pulls the product out of the uncanny valley back into something your brain can process. It’s light years away from the weather reporter / 80s music video cheesiness of keying.

I’m a big believer in hacking together trial runs and proofs of concept, so fortunately, Ted and team were, too – as I was the first to try out this XR setup in this way. He tells CDM:

This was our first time having an artist in one of our xR environments, in a specific performance context – we’d previously had some come visit, but Peter is the first to bring his process into the picture. As such, we decided to keep things mellow – there was a lot of integration getting blessed as “stable” for the first time, and I wanted to minimize the potential for crashing during the performance – my strong preference is to do performances in one take.

The effects you’ll see in the video are pretty simple and subtle by design. Plus I was entirely improvising – I had no idea what I would walk onto in advance, really. But the experience already had my head reeling with possibilities. From here, you can certainly add additional layers of augmentation – mapping motion graphics to the space in three dimensions, for instance – but we kept to the background for this first experiment.

Just as in any layered illusion, there’s some substantial coordination work to be done. The Savages team are roping together a number of tools – tools which are not necessarily engineered to run together in this way.

The basic ingredients:

Stype – camera tracking

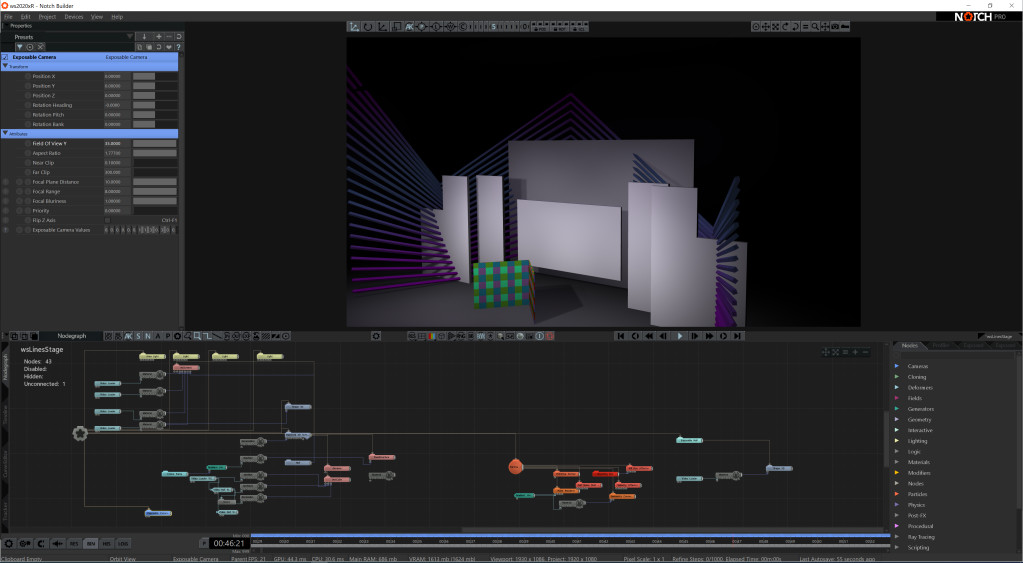

disguise gx 2c – media server (optimized for Notch)

Notch – real-time content hosted natively in disguise media software

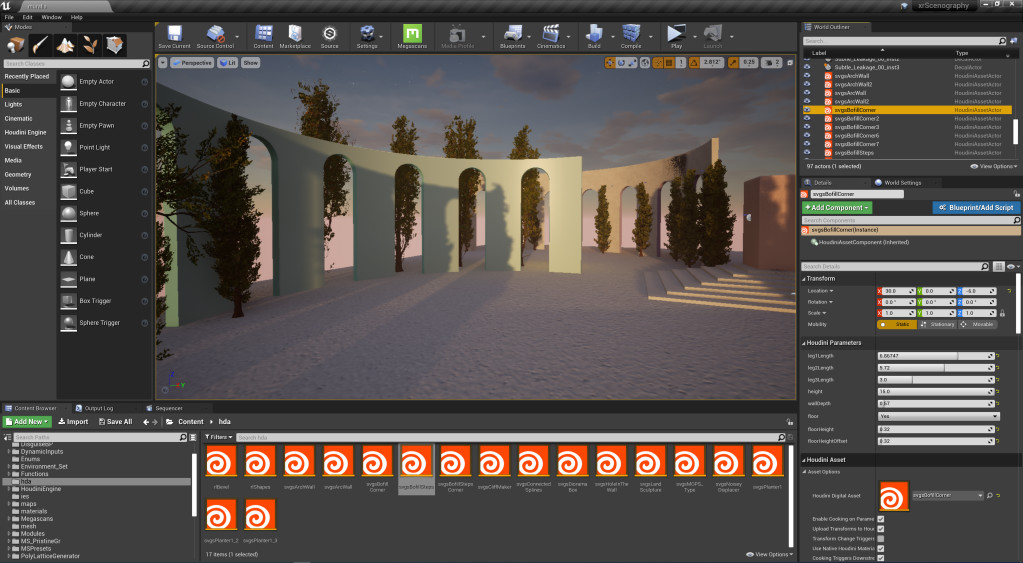

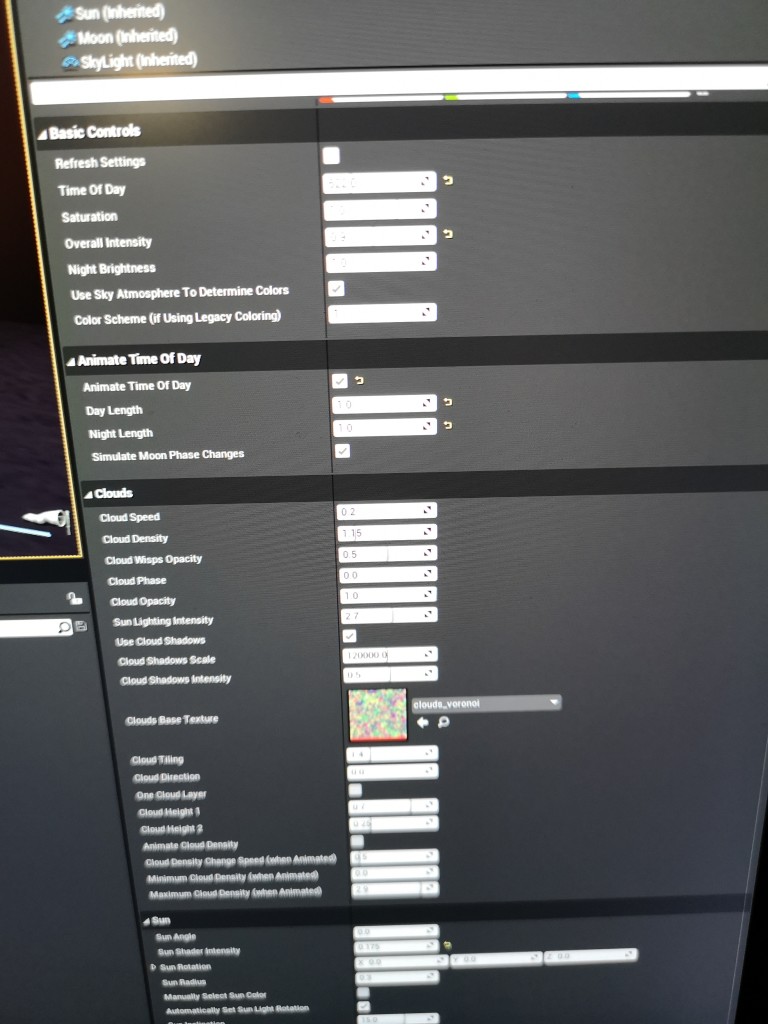

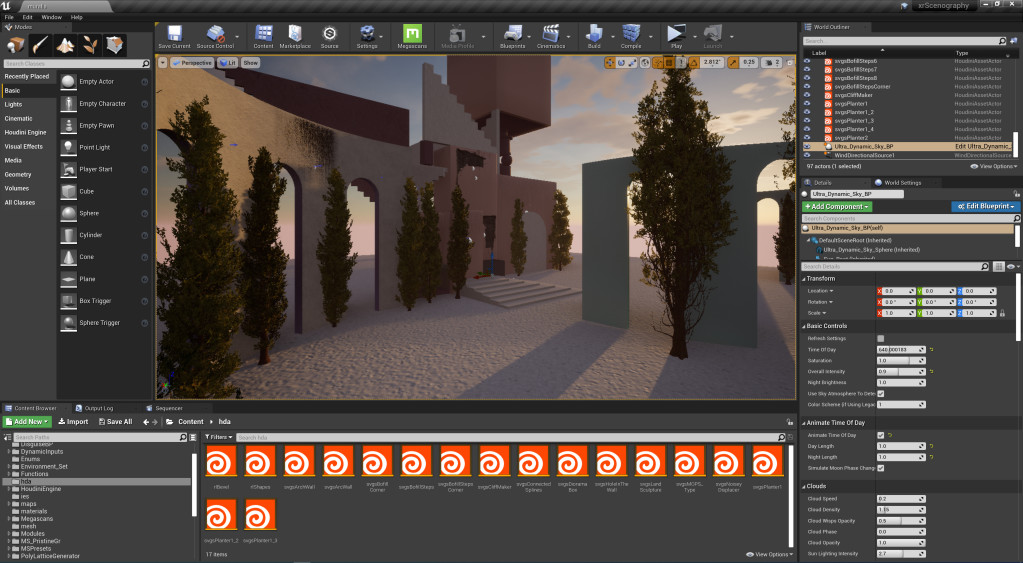

Unreal Engine – running on a second machine feeding disguise

BOXX hardware for Unreal, running RTX 6000 GPUs from NVIDIA

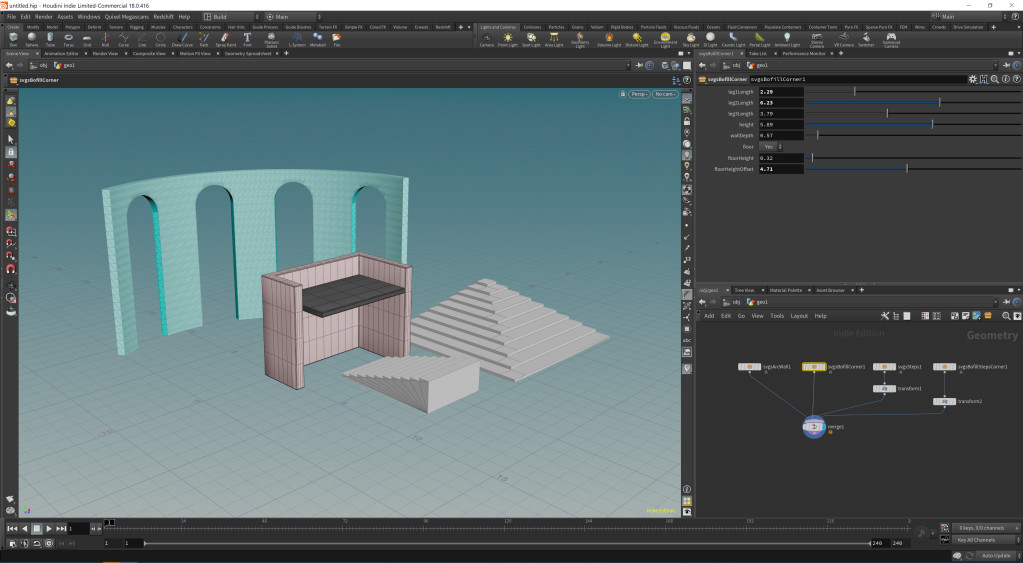

SideFX Houdini software for visual effects

Camera tracking is essential – in order to extend the optically-captured imagery with virtual imagery as if it were in-camera, it’s necessary for each tiny camera move to be tracked in real time. You can see the precision partly in things like camera vibrations – the tiniest quiver has a corresponding move in the virtual video. Your first reaction may actually be that it’s unimpressive, but that’s the point – your eye accepts what it sees as real, even when it isn’t.

Media servers are normally tasked with just spitting out video. Here, disguise is processing data and output mapping at the same time as it is crunching video signal – hiding the seams between Stype camera tracking data and video – and then passing that control data on to Notch and Unreal Engine so they’re calibrated, too. It erases the gap between the physical, optical camera and the simulated computer one.

Those of you who do follow this kind of setup – Ted notes that disguise is instancing Notch directly on its timeline, while Unreal is being hosted on that outboard BOXX server. And the point, he says, is flexibility – because this is virtual, generative architecture. He explains:

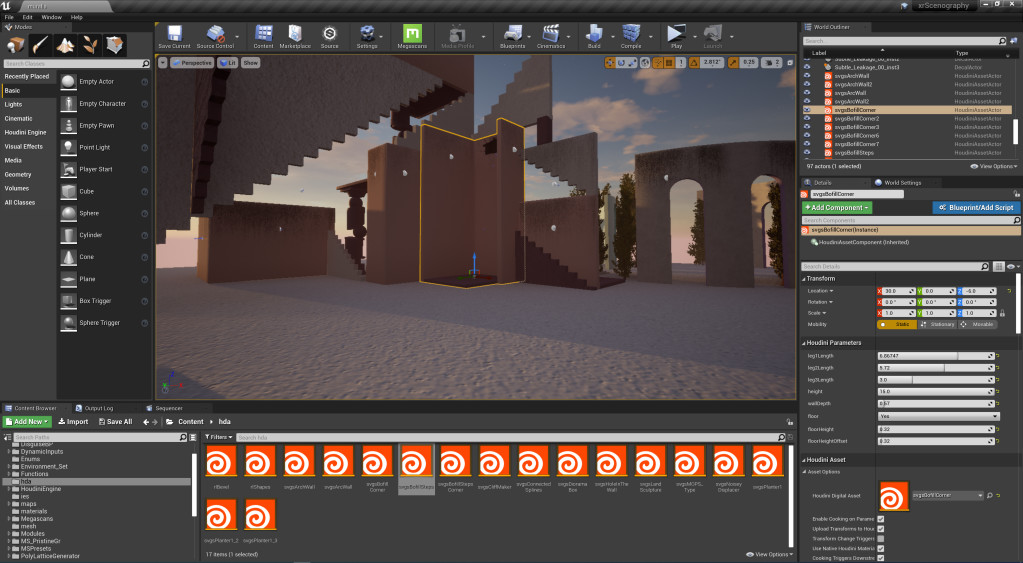

Apart from the screen surface in the first set, all geometry was instanced and specified inside of the Unreal Engine via studio-built Houdini Digital Assets. HDAs allow Houdini to express itself in other pieces of software via the Houdini Engine – instead of importing finished geometry, we import the concept of finished geometry and specify it within the project, usually looking through the point of view of the [virtual 3d] camera.

This is similar in concept to a composer writing a very specific score for an unknown synthesizer, and then working out a patch with a performer specific to a performance. It’s a very powerful way to think about geometry from the perspective of the studio. Instead of worrying about finishing during the most expensive part of our process time-wise — the part that uses Houdini — we buffer off worrying about finishing until we are considering a render. This is our approach to building out our digital backlot.

The “concept of the geometry” – think a model for what that geometry will be, parameterized. There’s that Holodeck aspect again – you’re free to play around with what appears in virtual space.

There are two set pieces here as demo. I actually quite liked the simple first set, even, to which they mapped a Minimoog picture on the fly – partly because it really looks like I’m on some giant synth conference stage in a world that doesn’t yet exist. Ted describes the set:

The first set is purposefully pedestrian – in as little time as possible, we took a screen layout drawing for an existing show, added a bit of brand-relevant scenic, and chucked it in a Notch block. The name of the game here was speed – start to finish production time was about three hours. On the one hand, it looks it. On the other hand, this is the cheapest possible path to authoring content for xR – treat it like you’re making a stage, and then map it from the media server like it’s a screen. What’s on the screen can even be someone else’s problem, allowing digital media people to masquerade as scenic and lighting designers.

The second piece is more ambitious – and it lets a crew transport an artist to a genuinely new location:

The second set design was inspired by architect Ricardo Bofill’s project La Muralla Roja. As the world was gearing up to shutdown, we spent a lot of time discussing community. La Muralla Rojo was built to challenge modern perspectives of public and private spaces. Our Muralla is intended to do the same. We see it as a set for multiple performers, each with their own “staged location” or as a tool to support a single performer.

And yes, placing an artist (that’ll be me, bear with me here) – that adds an additional layer to the process. Ted says:

[Bofill’s] language for the site is built out of plaster and the profile of a set of stairs, modulated by perpendicularity and level. An artist standing on [our] LED cube is modulating a perpendicular set of surfaces by adding levels of depth to the composition.

This struck me as a good peg for us all to use to hang our hats. Without you [Peter] standing there, the screens are very flat – no matter how much depth is in the image. Likewise, without the stairs, muralla roja would be very flat. when i was looking for references this is what struck me.

It may not be apparent, but there is a lot still to be explored here. Because the graphics are generative and real-time, we could develop entire AV shows that make the visuals as performative of the sound, or even directly link the two. We could use that to produce a virtual performance (ideal for quarantine times), but also extend what’s possible in a live performance. We could blur the boundary between a game and a stage performance.

It’s basically a special effect as a performance. And that opens up new possibilities for the performer. So here I was pretty occupied just playing live, but now having dipped in these waters the first time, of course I’m eager to re-imagine the performance for this context – since the set I played here is really just conceived as something that fits into a (real world) DJ booth or stage area.

Ted and Savages continue to develop new techniques for combining software, including getting live MIDI control into the environment. So we’ll have more to look at soon.

To me, the pandemic experience is humbling partly in that it reminds us that many audiences can’t physically attend performances. It also reveals how virtual a lot of our connections were even before they were forced to be that way – and reveals some of the weakness of our technologies for communicating with each other in that virtual space. So to sound one hopeful note, I think that doubling down on figuring out how XR technologies work is a way for us to be more aware of our presence and how to make the most of it. Our distance now is necessary to save lives; figuring out how to bridge that distance is an extreme but essential way to develop skills we may need in the future.

Full set:

Artist: Peter Kirn

Designer (Scenography, Lighting, VFX): Ted Pallas, Savages

Director of Photography: Art Jones

Creative Director: Alex Hartman, Savages

Technical Director: Michael Kohler, WorldStage Inc.

Footnote: If you’re interested in exploring XR, there’s an open call out now for the GAMMA_LAB XR laboratory my friends and partners are running in St. Petersburg, Russia. Fittingly, they have adapted the format to allow virtual presence, allowing the event itself to go on., and it will bring some leading figures in this field It’s another way worlds are coming together – including Russia and the international scene.

Gamma_LAB XR [Facebook event / open call information in Russian and English]

https://gammafestival.ru/gammalab2020 [full project page / open call]