The new iPhones and iOS are three-dimensional instruments, depth-sensing 3D cameras – pure magic. And one of Japan’s top studios has given away a tool that lets you unlock all of that, including with powerful visual app TouchDesigner.

It’s a shame that smartphones have become objects for doomscrolling and depression. Inside, these are Star Trek-style pocket computers, packed with sensors whose miniaturization is a marvel of science and engineering.

Those sensors can be used as musical instruments, visual instruments, art tools, and tasks that blur those very categories.

The iPhone recalls the do-it-all Tricorder – full of sensing devices that indicate awareness of its surroundings, from advanced versions of traditional devices (magnetometers and gyroscopes) to now more-futuristic additions like LiDAR (laser range sensor).

This isn’t all the work of Apple. In the case of those Lidar sensors, you can thank a vendor called Lumentum. Apple has helped add enough to Lumentum’s bottom line – both by delivering around a quarter of that company’s revenue, but also surely via the Apple halo effect – that now Lumentum is blowing billions buying a laser company. Now that’s my kind of tech. Don’t talk to me about how fun it is having a beer tap and ping-pong table. Buy a damned laser company.

Anyway, since we all can’t go buy laser companies or lasers, we can make use of LiDAR on new iPhones.

(Footnote: there’s a superb, technical deep dive on the LiDAR sensor of the iPhone 12 by Sabbir Rangwala for Forbes. Rangwala led a LiDAR unit for automotive, so suffice to say he would know his stuff. That’s how this showed up in the transportation section: The iPhone 12 – LiDAR At Your Fingertips)

There are plenty of apps that let you do fun stuff with the Lidar sensor, but none quite like ZIG SIM.

The app is the work of uber-chic Kyoto design agency 1→10, Inc. And what a generous – and impressive – calling card, in case you hadn’t encountered their work. An earlier version for Android and iOS already had the power to bundle tons of onboard data and send it to a computer.

But the current ZIG SIM is a work of art. It accesses every sensor – touch, Apple Pencil, battery, motion data, compass, GPS, pressure, proximity, mic level, remote control, and Beacon (a Bluetooth proximity tech), for starters. That already should get the brain going.

But it’s also an insanely awesome tool for visuals and interactive music. You can use it with NDI, which is a tool for routing video and audio. You can directly access image detection (machine learning and algorithmic face and barcode recognition). You can work with the augmented reality tools in ARKit – position and rotation.

The real trick here, though, is using NDI – which captures the video stream from the camera – with depth camera enabled from the rear-facing camera.

There’s a great written tutorial from the developers:

https://1-10.github.io/zigsim/getting-started.html

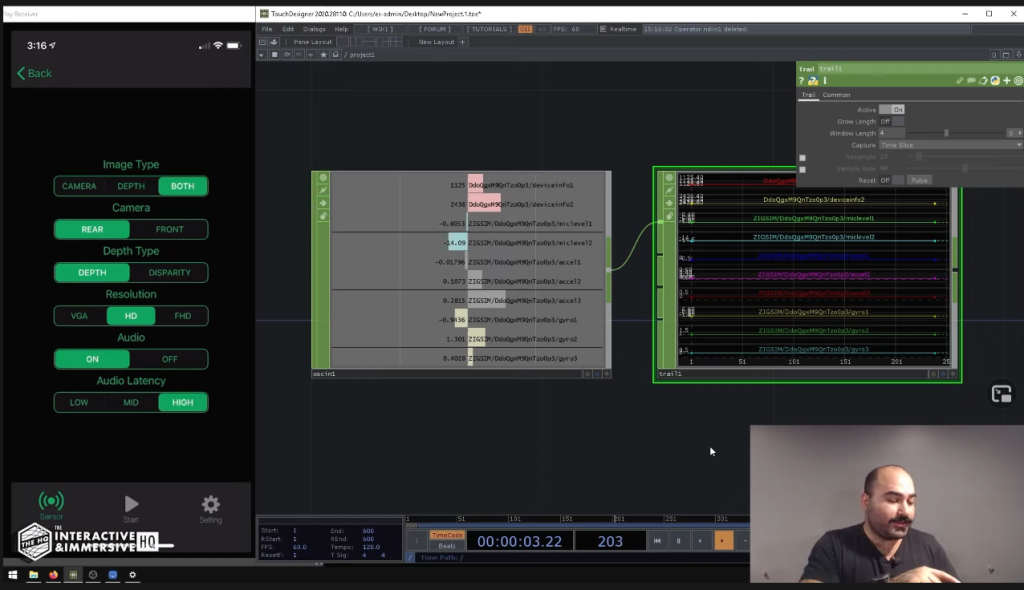

That already includes tips on how to use every available sensor, and it uses TouchDesigner as an example.

But you should also check out the excellent video tips from The Interactive & Immersive HQ by Elburz and Matthew Ragan. Elburz leads the way:

And about 10 minutes in, you get the depth-sensing trick. Note that this is not so obvious (and not really detailed in the developer tutorial). He spotted that you can grab that depth sensing camera in that NDI page, here:

TouchDesigner is a convenient endpoint, but it’s not the only one. Other NDI tools will work, too, and you can use the data streams with anything that works with OSC and JSON (like your own code, if you’ve got the chops).

I’m really excited by the LiDAR depth-sensing camera capabilities of the iPhone, along with its other sensors. (Apple’s sensors in general I find more useful, apparently thanks to crisp calibration of device, sensor, firmware, and OS.)

I’ll be explaining more of what LiDAR is and how to use it soon, in case that went over anyone’s head. I do also have a Huawei smartphone to test out the Android version of this software. (Hey, I’ve got two hands! Maybe these can work together)

And Elburz, I’m subscribed to your channel and eager to hear part two.

Also from Japan, here’s a tutorial working with TouchDesigner and the iPhone camera – in this case, just the camera. It’s beautiful and poetic and easy for any TouchDesigner user to follow, regardless of which language you speak:

But while you’re grabbing camera images, one more time:

https://1-10.github.io/zigsim/

$4? Please. Dear 1-10, can I, like, take you out to dinner in Kyoto, devs, in that beautiful day I can return to Japan? Because, wow:

– Features other than NFC Reader are available on iOS 12.3.1 iPad Pro.- Features other than Apple Pencil are available on iOS 12.3.1 iPhone X&11.

– NDI function Depth cannot be used on models that do not have a Dual camera.

– ARKit function FaceTracking cannot be used on models that do not have a TrueDepth camera.

– NFC Reader function cannot be used on models that do not have a NFC Chip.

This appn supports the following sensors and functions.

Note that actual availability depends on the model and OS version.

•NDI (image type : camera, depth)

•ARKit (tracking type : device, face, marker)

•Image detection(detection type : face, QR, rect, text)

•NFC

•Apple Pencil

•battery

•acceleration

•gravity from accel

•gyro

•quaternion

•compass

•atmospheric pressure

•GPS

•touch coordinate

•touch radius

•touch pressure

•beacon

•proximity monitor

•mic level(mic volume)

•remote control(headset remote control[Communication]

It supports the NDI protocol in NDI function.

It supports the local UDP and TCP(client)communication.

It also supports to save sensor data to local file.

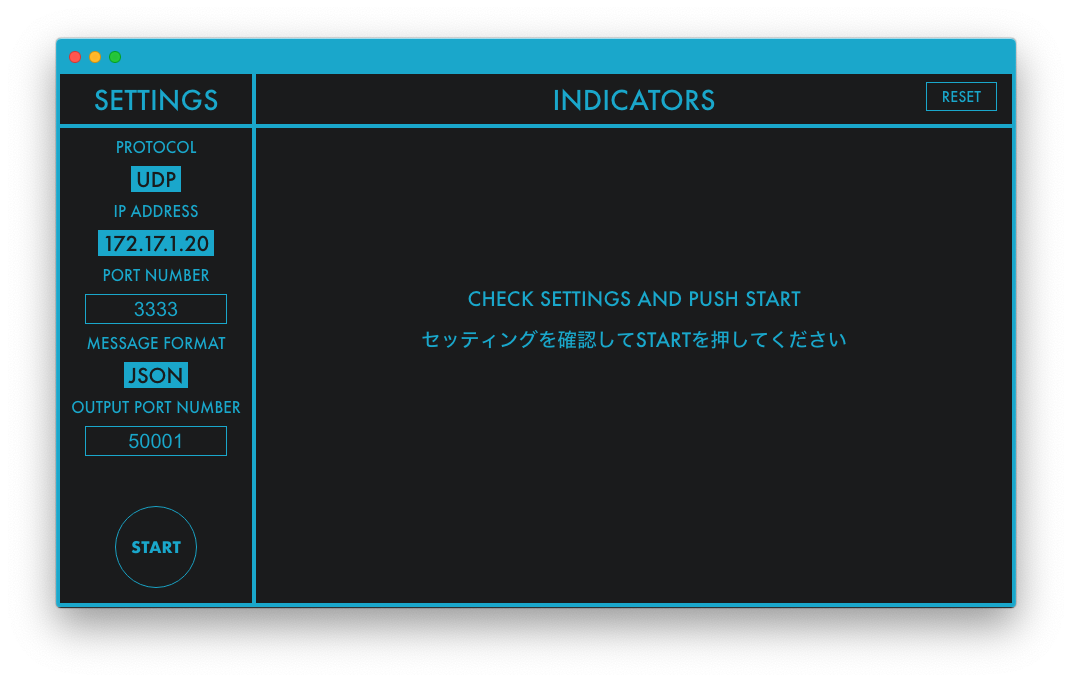

[Communication interval]

You can select from 1,10,30,60 FPS.

Note that the interval might vary depending on the sending device, the receiving device and the communication environment.

[Message type]

It supports OSC(used in the music industry) and JSON(used in the web industry).

ZIG SIM PRO [iOS App Store]

More hot TouchDesigner learning action – and a great place to start! (Now with video added,even if the talk was in the past)