Digital: disposable, identical, infinitely reproducible. Recordings: static, unchanging.

Or … are they?

Icarus’ Fake Fish Distribution (FFD), a self-described “album in 1000 variations,” generates a one-of-a-kind download for each purchaser. Generative, parametric software takes the composition, by London-based musicians-slash-software engineers Ollie Bown and Sam Britton, and tailors the output so that each file is distinct.

If you’re the 437th purchaser of the limited-run of 1000, in other words, you get a composition that is different from 436 before you and 438 after you. The process breaks two commonly-understood notions about recordings: one, that digital files can’t be released as a “limited edition” in the way a tangible object can, and two, that recordings are identical copies of a fixed, pre-composed structure.

Happily, the music is evocative and adventurous, a meandering path through a soundworld of warm hums and clockwork-like buzzes and rattles, insistent rhythms and jazz-like flourishes of timbre and melody. It’s in turns moody and whimsical. The structure trickles over the surface like water, perfectly suited to the generative outline. At moments – particularly with the echoes of spoken word drifting through cracks in the texture – it recalls the work of Brian Eno. Eno’s shadow is certainly seen here, conceptually; his Generative Music release (and notions of so-called “ambient music” in general) easily predicted today’s generative experiments. But Eno was ahead of his time technically: software and digital distribution – both of files and apps – now makes what was once impractical almost obvious. (See also: Xenakis, whom the composers talk about below.)

You can listen to some samples, though it’s just a taste of the larger musical environment.

Fake Fish Distribution – version 500 sampler by Icarus…

12 GBP buys you your very own MP3 (320 kbps). Details:

http://www.icarus.nu/FFD/

The creators weigh in on the project for Q Magazine:

Guest column – Electronic band Icarus on whether algorithms can be artists?

The conceptual experiment is all-encompassing. Just to prove the file is “yours,” you can even use it to earn royalties – in theory. As David Abravanel, Ableton community/social manager by day and tipster on this story, writes:

“As a sort-of justification for the price, all Fake Fish Distribution owners are entitled to 50% of the royalties should the music on that specific version ever be licensed. A very unlikely outcome, but at least it’s sticking to concept.

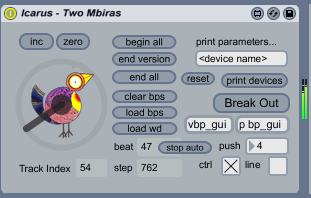

I spoke with Ollie and Sam to share a bit about how the mechanism of this musical machine operates. Using Ableton Live and Max for Live, each rendition is “conducted” from threads and variables into a sibling of the others. The pair talk about what that means compositionally, but also how it fits into a larger landscape of music and thought. Of course, you can also go and just experience your own download (first, or exclusively) to let the music wash over you, an experience I also find successful. But if you want to dive into the deep end as far as the theory, here we go.

CDM: How is the generative software put together? What sorts of parameters are manipulated?

Ollie: The basic plan to do the album came before any decision about how to actually realise it, and we initially thought we’d approach the whole thing from a very low level, such as scripting it all in the Beads Java library that has been a pet project of mine for some time. But although we love the creative power of working at a low level, the thought of making an entire album in this way was pretty unappealing. We looked at some of the scripting APIs that are emerging in what you might call the hacker-friendy generation of audio tools like Ardour, Audacity, and Reaper, but these also seemed like a too-convoluted way to go about it.

Even though Max for Live was in hindsight the obvious choice, it wasn’t so obvious at the time, because we weren’t sure how much top-down control it provided. (As a matter of fact, one of the hardest things turned out to be managing the most top-level part of the process: setting up a process that would continuously render out all 1000 versions of each track.) Although it was quite elementary and unstable (at the time), [Max for Live] did everything we wanted to do: control the transport, control clips, device parameters, mix parameters, the tempo … you could even select and manipulate things like MIDI elements, although we didn’t attempt that.

So we made our tracks as Live project files, as you might do for a live set (i.e., without arranging the tracks on the timeline), then set up a number of parametric controls to manipulate things in the tracks. Many of these were just effects and synth parameters, which we grouped through mappings so that one parameter might turn up the attack on a synth whilst turning down the compression attack in a compensatory way. So the parameter space was quite carefully controlled, a kind of composed object in its own right.

We also separated single tracks out into component parts so that they could be parametrically blended. For example, a kick drum pattern could be spilt into the 1 and 3 beats on the one hand, and a bunch of finer detail patterning on the other, so that you could glide between a slow steady pattern and a fast more syncopated one. So loads of the actual parameterisation of the music could actually be achieved in Live without doing any programming. Likewise, for many of the parts on the track, we made many clip variations, say about 30, such as different loops of a breakbeat. The progression through those clips — quantised in Live, of course — could also be mapped to parameters.

Finally, by parameterising track volumes and using diverse source material in our clips, we could ultimately parameterise the movement through high-level structures in the tracks. So we could do things like have a track start with completely different beginnings but end up in the same place. We did this in Two Mbiras, which is probably the track where we felt most like we were just naturally composing a single piece of music which just happened to be manifest it a multiplicity of forms. In that sense, this was the most successful track. Some of the other tracks involved more of an iterative approach where we didn’t have a clear plan for how to parameterise the track to begin with, but that fits with our natural approach to making tracks. At one point, we wondered if we could just drop a bank of 1000 different sound effects files into an Ableton track, to load as clips. To our glee, Live just crunched for a couple of seconds and then they were there, ready to be parametrically triggered. So each version of the track MD Skillz could end on a different sound effect.

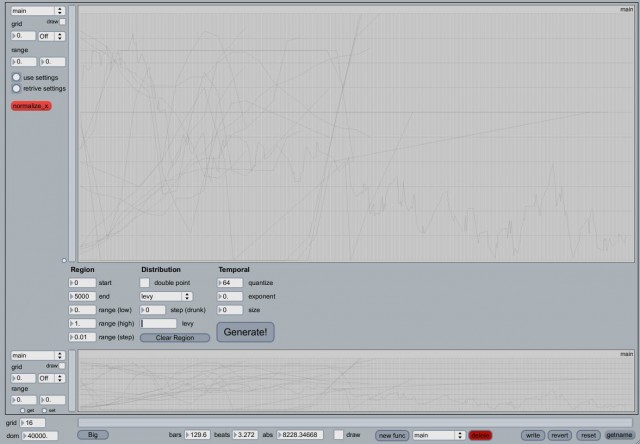

The Max software consisted of a generic parametric music manager and track-specific patches that farmed out parametric control to the elements that we’d defined in Live. The manager device centred around a master “version dial”, a kind of second dimension (along with time), so you could think of the compositional process as one of composing each track in time-version space.

We used Emanuel Jourdan’s ej.function object, which is a powerful JavaScript alternative to the built-in Max breakpoint function object, and wrote some of our own custom function generators and function interpolation tools to interact with it. Using the ej.function object, we composed many alternative timelines to control the parameters, and then used the version dial to interpolate smoothly between these timelines, resulting in a very gentle transition between versions. I.e., version 245 and 246 are going to be imperceptibly different, but version 124 and 875 will be notably different (we quickly broke from our own rule and started to introduce non-smooth number sequences into some of the tracks, so for example in Colour Field two adjacent versions will actually have quite different structures). We spent some time making it well integrated into Live so that once we really got into the compositional process it would work smoothly and be generally applicable to all of the different ideas we wanted to throw at it. That said, it’s a few steps of refinement from being releasable software.

Pictured: the master controller device, very minimal, just a version dial and a few debug controls. Double clicking on bp_gui leads to the other figure, a multitrack timeline editor, with generative tools for automatically generating timeline data using different probability distributions.

How did you approach this piece compositionally, both in terms of those elements that do get generated, and the musical conception as a whole?

Sam: Since 2005, we had been working a lot in the context of performance, not only as Icarus, but with improvising musicians through our label / collective Not Applicable. This is reflected in the records we put out both as Icarus and individually during that time, which increasingly used generative and algorithmic compositional techniques as structural catalysts for live improvisations. (As Icarus: Carnivalesque, Sylt and All Is For The Best In The Best Of All Possible World. Individually: Rubik Compression Vero, Five Loose Plans, Nowhere, Erase, Chaleur and The Resurfacing Of An Atavistic Trait). Our performance software was made using Max/MSP and Beads and we started by crafting various low level tools that would loop and sequence audio files in various different ways, giving us control parameters that were devised around musical seeds we were interested in exploring.

In many respects, our approach was very similar and partly inspired by Xenakis’ writings in Formalised Music, although the context is obviously very different. These low-level tools were augmented by various hand-crafted MSP processing tools which used generated trajectories and audio analysis as a method of automating the various parameters that effected the sounds themselves, the logic being that an FX unit as a manipulator of sound is in some way loosely coupled to the musical scenario it is contextualised in. In both cases above, the idea was to step back from performance ‘knob twiddling’ by using the computer to simulate specific types of behaviour that would control these processes directly (hence the reason why we have never used controllers in performance).

Our search for different methods of coupling our increasing parameter space led us to develop various higher-level control strategies at Goldsmiths and IRCAM respectively, culminating in autonomous performance systems built in the context of the Live Algorithms for Music Group at Goldsmiths College. The autonomous systems we developed used a battery of different techniques to effect control, from CTRNNs and RBNs to analysis-based sound mosaicing, psychoacoustic mapping and pattern recognition. This work resulted in us being commissioned to put together a suite of pieces for autonomous software in collaboration with improvising musicians Tom Arthurs and Lothar Ohlmeier called “Long Division” for the North Sea Jazz Festival in 2010. The challenge of putting together a 45-minute programme of autonomous music really forced us to think more strategically about how it was possible to structure musical elements within a defined software framework and how they could vary not only within each individual piece, but also from piece to piece.

The most obvious inspiration for how we might do this ultimately came from reflecting on what it is we do when we perform live as Icarus. The experience of working up entirely new live material and touring it without formulating it as specific tracks or compositions proved to be an ideal prototype not only for Long Division, but also ultimately for FFD. Here, in a similar sense to the work of John Cage, large-scale structure and form became a contextually-flexible entity, which meant that for us it became to a far greater extent derived from the idiosyncrasies of the performance software we developed and keyed in by our own specific way of listening out for certain musical structures and responding to them in either a complementary or deliberately obstructive fashion (or perhaps even not at all). Creating these two pieces (‘Long Division’ and ‘All Is For The Best In The Best Of All Possible Worlds’) gave us the conviction that we could devise musical structures that were both detailed enough and robust enough to benefit positively from some level of automated control.

Therefore, when we came to start working on FFD, the main question we had to ask ourselves was; within the music making practices we had already been working with, what were the tolerances for automation within which we were still ultimately in control of and ultimately composing the music we were creating? In the end, the framework we set up was comparatively restrained; the generative aspect of each track was always notated as a performance via a breakpoint function and therefore able to be rationalised by us, the variation between different versions of the same track was done using interpolation and is completely predictable and incremental and finally, the entire space of variation is bounded to 1000 versions, meaning that the trajectories of the variation never extend into some extreme and unrealisable space.

More notes on the album:

Web: http://www.icarus.nu

RSS: feed://www.icarus.nu/wp/feed/Last.FM: http://www.last.fm/music/Icarus

Discogs: http://www.discogs.com/artist/Icarus+(2)SoundCloud: http://soundcloud.com/icaruselectronic

Twitter: http://twitter.com/#!/birdy_electricMyspace: http://www.myspace.com/icaruselectronic

Facebook: http://www.facebook.com/pages/Icarus/132324596558CREDITS

Music, Software, Scripting – Icarus (Ollie Bown and Sam Britton)

Mastering – Will Worsley, Trouble Studios

Artwork – Harrison Graphic DesignIcarus gratefully thank the following for their support of the FFD project

The PRSF Foundation (UK)

STEIM (Netherlands)

Ableton (Germany)

The University of Sydney (Australia)

Emmanuel Jourdan (France)