Ed. Our friend Momo the Monster (aka Surya Buchwald) joins us for a guest column with a proposal: what if messages sent between music and visual software could be expressive? His idea is simple, but powerful: it’s musical semantics for live visual messages, as basic as knowing when there’s a bass drum hit. Momo introduces the concepts here; more audiovisuals coming shortly, so feel free to hit him up with some questions. -PK

Last October, I was approached by the management of American Werewolf about creating some custom visuals for their show. This was the first time I’d been contacted to work directly with an artist for an upcoming show with a good lead-time and some budget for creating something from scratch. That’s when I started developing a system to abstract their songs into more uniform data so I could develop interchangeable synced visuals.

The video you see above shows the granularity of the system. For this little demo track, we can read the incoming kick drum, snare, hi-hat, bassline, and a special parameter I call intensity. First, we see a very straightforward example, where each instrument triggers an animation of its own description. Then I change scenes to something I designed for an American Werewolf song, and we can see the modularity of the system in action.

I’d like to explain this system as it exists so far, and get some feedback. I’ll break it down into three parts: the Standard, which is a set of terms I use to abstract the music, the Protocol, which is how I mapped the language into OSC, and the Implementation, which are the audio + video programs I used to make things happen. It’s important to view these separately, because something like the implementation could be switched to another system without affecting the Standard or Protocol.

The Standard

The idea here is to go a step beyond MIDI, and make use of OSC’s advantages. American Werewolf are an Electro House act, and as such there are many things that will be the same for each song. There will likely be a prominent Kick and Snare drum, a Bass line, some Vocals, and perhaps a Lead.

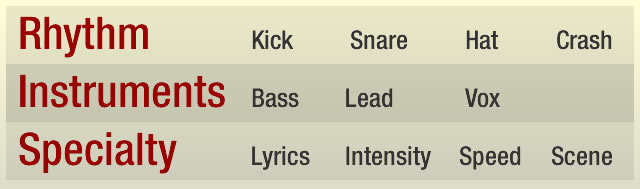

With this in mind, I started breaking it down thus:

Rhythm: Kick, Snare, Hat, Crash (or other large accent)

General Instruments: Bass, Lead, Vox

Specialty: Lyrics, Intensity, Speed, Scene

Thinking this way enabled me to design visuals that reacted to a subset of musical data that was present in most songs. I could always create custom bits beyond that, but this basic framework lets me try visuals with different songs.

The Protocol

OSC seemed a natural fit. I wanted to keep the mapping simple, so I wound up with:

/instrument float

A kick drum played at half velocity would be /kick 0.5, for example. The float argument is always a floating-point number between 0 and 1, and the instrument simply uses its natural name. In the case where there is more than one of an instrument, like two different lead instruments (perhaps a synth and a guitar), the first is /lead/1/, the second is /lead/2/, and messages to /lead will hit both channels.

Lyrics are especially useful this way. You pass along:

/lyrics “We Want Your Soul”

And your VJ Soft can read the actual words and integrate them into a dynamic visual.

I also wound up using the abstraction ‘Intensity’ when I wanted to modify a variety of visuals at once. This is a human-readable aspect of a song -while you could possibly design a method to compare volume peaks, beat changes, frequency ramps, etc., it’s easiest just to spit out some data that reads ‘the song just jumped from an intensity of 0.5 to 0.7, and now it’s ramping up to 0.9). Then all of the visuals in your scene react as appropriate – getting bigger, more vibrant, shaking more, morphing, etc.

The ‘scene’ command is very handy for narrative visuals. Some songs I designed have a large variety of scenes which all react to this same musical data in different ways, and its important that they trigger whenever the music reaches a certain point. This command could be used as a generic, however, by using existing song conventions:

- /scene intro

- /scene verse

- /scene chorus

- /scene verse

- /scene chorus

- /scene bridge

- /scene outro

The Implementation

So theory is great, but it’s just theory. Here is how this all came together for me:

Ableton Live MIDI -> Max4Live Plugins (convert MIDI to OSC) -> Storyteller custom AIR Application.

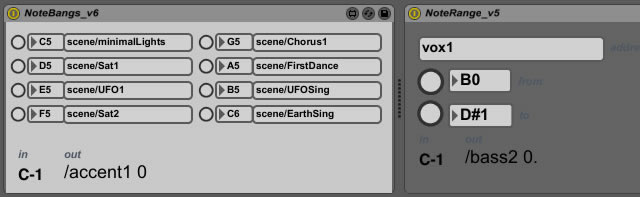

I developed a number of custom Max4Live plugins that would take MIDI information from within Live and turn it into logical music data:

- NoteBangs: Turn a MIDI note into an OSC Message, converting velocity to floating point argument

- NoteRange: Turns a range of notes into an OSC Message, converting the position in the range into the first argument, and the velocity into the second

- CC: Turns a MIDI CC into an OSC Message, converting the ‘amount’ into a floating point argument

- Lyrics: Takes a text file and a MIDI note range, triggers lines from the text file as OSC Messages based on the incoming MIDI note, with a velocity of ‘0’ triggering a /lyrics clear message

- OSCOut: Takes all the messages coming from the various plugins and sends it out to an OSC receiver (in this case, it is set to localhost)

These plugins are Max MIDI Effects, so they take MIDI on their channel and convert it in realtime, passing the data along in the chain. In the case of recorded MIDI data playing from live, the logic is straightforward. There are many cases, however, when MIDI data needs to be turned into baked audio clips for performance purposes (too many stacked effects), in which case the MIDI clip can be Grouped with the audio clip to launch simultaneously.

A big catch here, of course, is that Max4Live currently requires a substantial investment beyond just the purchase of Live. For the performance with American Werewolf, I had them just send me the MIDI notes, and I ran Live with the custom plugins on my end. These plugins could easily be created as standalone max patches, though one of the huge conveniences of the method as I have it above is that all the mapping is saved within the Live Project.

For the visuals, I wound up writing a basic VJ playback application in Adobe AIR, which can read data from UDP Sockets as of Air 2.0. The visuals are all written in actionscript, using Flash libraries for the graphics, and the application reads an XML file which maps the music data (/kick, /snare) to methods in the Flash files (triggerLights(), blowUpAndSizzle()). I’ve got a modification to this system in the works which will allow the Flash files to play back in any supported software (VDMX, Modul8, Resolume, GrandVJ, etc.) while still receiving method calls from the Master app. Realistically, that project is probably about 6 months away from launch.

Looking Ahead

I want to stress that the implementation above is just that – one implementation. You could run the music from Ableton Live and have it control visuals in OpenFrameworks, Processing, Quartz Composer, what have you. You could run the music from Max/MSP or Logic or Cubase if you could figure out how to do the MIDI to OSC translation. I’d love to get feedback on this system as I’m developing it because I think it could be useful for others, and the more of us that use a common language, the easier collaboration will be in the long run.

Imagine creating visuals that react to a Kick Drum or Bassline and knowing that they’ll sync effortlessly to the variety of acts you’ll be playing with over the next year. It could happen.