The gaming industry has made their bet, and it’s that touchscreens go better with tactile controls. Might digital musicians reach the same conclusion?

A funny thing has happened on the way to the touch era. The vision of a device like the iPad is minimalist to the extreme: an uninterrupted, impossibly-slim metal slate, as impenetrable as some sort of found alien scifi object. The notion is that by reducing physical controls, the software itself comes to the fore. It’s beautiful conceptually … and then you find yourself tapping and stroking a piece of undifferentiated glass. For navigating interfaces – and even, I’d argue, exploring sound design and composition – it works brilliantly. But for live digital performance (what to game lovers is called “gaming”), for anything that wants tactile feedback, it can be imprecise or unsatisfying, or both.

Watching this shake out as a design problem is fascinating, especially coming from the perspective of music. Digital musicians were exploring alternative interfaces since before it was cool. Given the ability to make any sound we can possibly imagine, the question of how you design an interface around sound is compositional, philosophical, essential.

Whatever winds up working in the marketplace, there are some fascinating ideas for combining touch with tactile. Since both are good at certain tasks, why not do both?

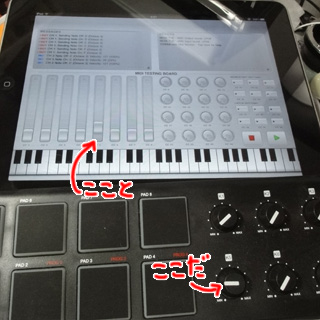

We’ve seen several examples among musicians and researchers exploring how to augment the touchscreen with physical input:

Mike Kneupfel’s research at NYU’s ITP program, in the video at top, investigates adding additional inputs. See: Extending the Touchscreen.

We saw that kind of extensibility in an iPad dock concept by Livid Instruments.

While it lacks additional tangible controls, I/O extensibility is featured in a still-as-yet-unreleased “pro” dock by Alesis, and most recently in a DIY dock by circuit bending pioneer Reed Ghazala.

Now, game vendors are moving in the same direction – even with prototypes that look quite a lot like the research project above. (Sometimes, arriving at the obvious conclusion is necessary for a great design.)

Sony’s PlayStation Vita, successor to the PSP mobile game platform, augments touch input with tactile controls in much the same way as Michael Knuepfel’s work does. Notably, it also proposes how these inputs can coexist in a form factor that’s larger than a phone, but smaller than a tablet – scaled roughly to a comfortable holding distance between your two hands. (Microsoft and Apple each unveiled standard split keyboards on Windows 8 and iOS 5, respectively. The era of thumb ergonomics is now fully underway.)

Nintendo’s Wii U controller combines a lot of sensing capabilities into one device. Like Sony’s effort, the centerpiece is the combination of the interactive touch display with analog controls. But true to its Wii heritage, Nintendo is packing other sensing technology, too. While its evolution has been more piecemeal, the same is true of the Xbox 360 in the Kinect era. The Kinect camera is really a bundle of mic and stereoscopic camera sensing with software intelligence for motion analysis and even speech analysis via a variety of methods. While Kinect is touchless, the conventional gamepad still plays a role.

What’s the relevance of all of this evolution to music? Digital music’s demands parallel gaming, requiring precision, accessibility, scalability from beginners to hardcore experts, and real-time interaction. Also, music research has often been at the forefront of experimentation with a variety of means of translating sensory data to expression. And since musical practice itself is roughly as old in human evolution as language, if not older, it’s a key way of glimpsing how ubiquitous interfaces can become meaningful.

Let me put that another way: the stuff game companies are doing now looks a heck of a lot like what computer musicians have been doing for years.

While much of the acclaim for platforms like the iPad has been for their transparency and unadorned interfaces – and while I believe those are valuable concepts – bundles of capabilities for interacting with the world can become powerful. That means efforts like Apple’s addition of USB MIDI connectivity to the iPad, or Google’s nascent work to standardize USB host mode and open hardware development (based on Arduino), take on new meaning. Add to this additional connectivity via Bluetooth and wifi, and it may be that we only really see what these platforms do when, like the PC, they start geting sociable with a range of other gear.

This could also mean that communities like the music community have a chance to prove that the “post-PC era” is a little different than it’s been described in the mainstream press – and maybe a little less a radical departure. The “post PC era,” we’re told, is less about being a hub for a lot of hardware. But as people look for tactile feedback, some of the coolest applications of these platforms may not be in the mainstream use as “consumption” devices, but at the fringe.

I’ve just come from the launch of the Samsung Galaxy Tab 10.1 in New York. You’re not missing much; there were a handful of people snapping up the tablets. (I think the 10.1, and a few other Honeycomb-based tablets, do have a bright future, though their growth may be a bit slow at first as developers get their hands on them and give people a reason to buy them.)

What was most compelling to people at the launch, though, was a planned appearance by pop star Ne-Yo (at least according to some staffers to whom I spoke).

But the connection was, at best, tenuous. It may be when devices like these tablets are made more viable for musicians onstage that that connection starts to make sense. And that may mean that Apple and Google/Android vendors alike need to start to think more aggressively about the larger ecosystem and hardware applications. Remember all those futuristic promises from Apple about hardware accessories? Right now, the most significant hardware is the Square payment add-on, and it uses a hack to make it work through the audio jack. Both Apple and Google can do more work to open up hardware development.

It’s all well and good for the tablet to be a “post PC” device, to be different from PCs, to be better. But they may simultaneously need some of the openness to other gadgets that made the PC age so revolutionary.