Get ready: from one more-than-human musical cyborg, a robotic horde of beatjazz artists.

Onyx Ashanti isn’t satisfied just augmenting his own body and musical expression with 3D-printed, sensor-laden prostheses. He’s extending that solo performance with bots that crawl around and gesture for feedback, then – inspired by the organic beauty of fractal geometry – is binding together performers with his system in a networked system of nodes.

Just don’t call it a jam session. Call them patternists.

If this sounds crazy, it is: crazy in just the way we like. But amidst this hyper-futuristic vision of performance, Onyx also writes for CDM the story of how he came to use open source, free patching tool Pure Data for his music. He waxes poetic about his frustration with sync and live “jams,” and what he wanted from networking and playing with other humans. (Really – humans.) And in the end, some of that “jazz” in beatjazz makes its way back. I think the technical and artist concerns will sound familiar – even as robo-spiders crawl around your floor extending your Wi-Fi. Here’s Onyx with a story that’s part narrative, part manifesto. It comes as he’s in the midst of a crowd-funded campaign to get the idea going, as development of the enabling technologies proceeds at a furious pace.

Get ready to enter the Sonic Fractal Matrix. -PK

Making the Leap to All Pd

It was at a Pd convention in Nantes, France, in April 2012. I was one of the presenters, part of a lineup that included Pd all-stars like Katja Vetter, Andy Farnell (Obiwannabe), and even [Pd and Max creator] Miller Puckette himself. I wanted to present my idea properly in front of these people. My performance system at the time consisted of the beatjazz controller, connected wirelessly to Pure Data as a control interface for my VST-rack-Native Instruments Kore 2 (discontinued), NI’s Guitar Rig 2 for looping, and a few choice VST synths, including Minimonsta, [NI] Pro-53 and [NI] FM7 for that “chordpad” preset I couldn’t live without at the time. There were workshops and presentations during the day and performances at night. It’s not often that i get to hear a whole night of Pd-based performances, so I was excited.

One thing that was immediately clear as I listened to the performances was that every person onstage was using Pd as their sole source of sound. The sounds were so varied and interesting; some were rough and jagged and others were thin and textured and others were precise and methodical. It was inspiring. After seeing two or three of these performances, I began to feel like I was cheating somehow. My rig consisted of VST synths. Everyone else’s rig was self-built or at least self-actuated in Pure Data. I allowed this feeling to spur me into digging deeper into Pd, past the belief that I couldn’t grasp the DSP necessary to create my own sounds.

The following July, I accidently destroyed my hard drive, so it got replaced with an SSD and when reinstalling software, the only music software I loaded was Pd-extended 0.43.1. There was a basic system concept already, but I had avoided interacting with it because the VST-based system “just worked.” Two and a half months of development commenced, s-l-o-w-l-y — especially as I tried to wrap my head around the concepts of manipulating signals as opposed to messages. Signals are “quantum mechanics” to messages’ “Calculus.” Ed.: I don’t know if all patchers and DSP coders would agree with that one, but at the very least working with signals adds a level of abstraction and complexity. Messages were easy to configure into logic systems, but signals were a different beast. Many things were not working together; the button presses were prone to “sticking”, the sounds were very linear, i.e., straight lines from lowest to highest for controlling things, and sometimes it would just shut off or freeze for no reason, amongst other things.

Transformative Transform

The bright point came in September of that year in the form of a new type of channel (element) for the beatjazz system. I added a “Transform” element that would take all seven loopers and flatten them down to a file I could transform by way of gestural control of a granular synth engine and a filter array, while simulataneously initializing the previous seven looper states.

On this day, the Transform Element had a simple pitch-shifter patch in it. I was able to “play” my arrangement in a different way. From that point, the Transform channel has become the new signature sound of the system itself. I might even say that the Transform idea has become the point. The system is no longer restrained by tempo or key. It could go in any direction at any time. Previously, I was completely restrained by both the starting tempo and the harmonic range of the loop I was building on. Now, there was no such restraint. The expression could go in any direction, instantly.

Into Fractals

There was a need to contextualize this capability. I needed something that could put this concept into a musically-relevant frame. I came across Benoit B. Mandelbrot who, in the 70s, championed a new branch of mathematics called Fractals. He proposed an algorithm of division and self similarity that [he argued] formed a basis for most naturally-occurring systems like trees and the human nervous system. Those algorithms are used extensively throughout the computer industry to manage complex systems and as the basis for some CGI in movies. At first, I thought about directly interpreting his equation into a Pd patch, but, besides the fact that I had no idea how to do that, it also felt like that approach was too “linear” for what I was seeking. But the idea of fractals imposed itself on the idea of this system: create a system that is an origin point for sonic exploration.

The fractal construct that fit most readily was that of a snowflake. That a structure so small could express itself in so many ways with such a limited rule set was interesting as a thought project. Imagine being at the center of a snowflake-like structure, and the sonic intent can go in the direction of any of its “branches.” Each of these branches consisted of a singular sonic element, like drums, bass, voice, etc.; the focus then became developing each branch logically, so as to feed the Transform process more texturally-rich source material.

The Transform process has now evolved to a point where it is a permanent and evolving part of the sound. It gains more expression vectors daily now. Transforming repurposes the performance audio as raw materials for the next sonic concept, endlessly, or until the batteries die, whichever comes first. It is so crazy and so obvious at the same time. Playing it is exciting in a way I haven’t experienced in years. The permutations of sound in between sample grains are so abstract and different from one another that sometimes I stop in mid set and just laugh at the audacity of the system allowing such a sound to come out of the speakers. I haven’t had this much fun in a long time.

(fast forward to 3:46 to see what transforming looks and sounds like currently; will play from that location automatically)

As such, I find that my desire to “jam”, in the traditional sense of the word, has all but disappeared. I used to make a living playing with DJs and DJing and playing simultaneously. I have tried MIDI sync, which, when it does work, locks the systems too directly and in too linear a fashion; only one person is master of the tempo. Changing this in performance, dynamically, had been a pain in the ass for me. Then, what about my beloved new Transform ability? Once you can “flip” everything into a completely new unpredictable state, controllably, you never want to not do it again.

Beyond Jamming: Synchronized Patternists

So, a few months ago I started playing with an idea, mainly for all the guys that already own one of the beatjazz systems; a simple synchronization system that would allow our systems to synchronize so we could play together. This system was more dynamic but still did not have an idea construct for Transforming that “fit”. I could squeeze the idea in; they could transform or I could or we both could, but at the time, it was harder to synchronize the XBee [wireless]-based system. Moving the system away from XBee and serial communication to Wi-Fi and UDP communication solved this. The system was finally fast enough for low-latency performance, and was now on a Wi-Fi-based network system rather than the closed and less expandable XBee system and its associated serial bus.

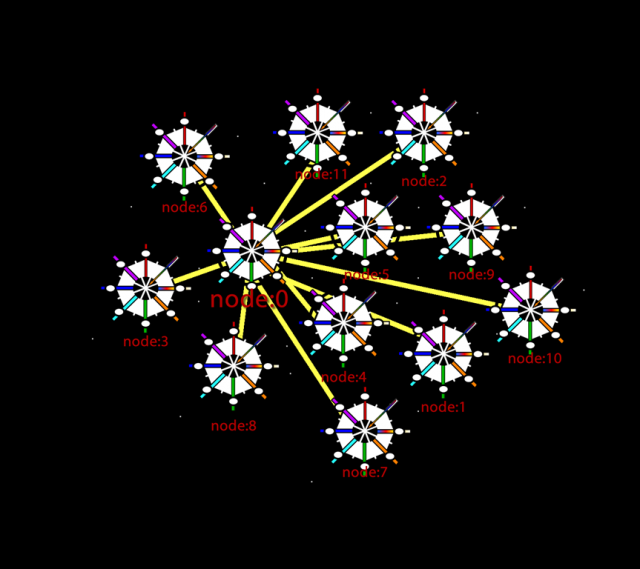

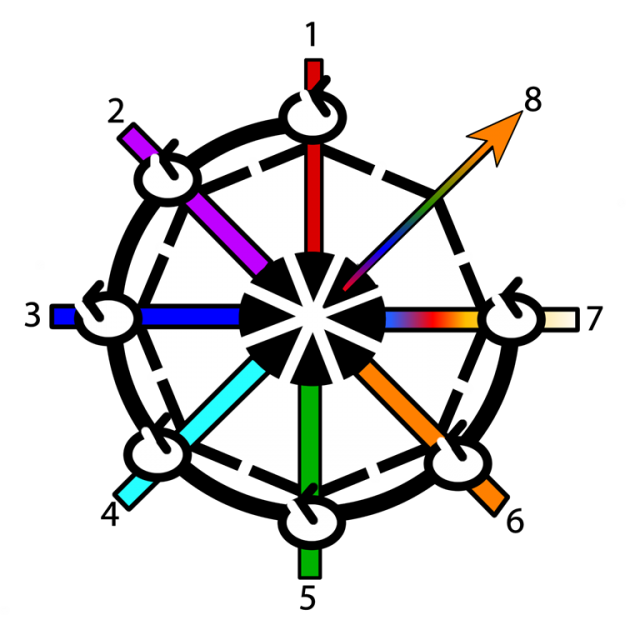

Connecting multiple beatjazz systems together was a networking logic issue. A dozen control systems could share the same router if necessary. Let’s say I am synchronized with another “patternist,” i.e., another person with a beatjazz system. Both of us have the same capabilities. The first person in the network is the pattern master. They broadcast their tempo information to all other beatjazz systems on the network, each of which is referred to as a node. The pattern master is node:0. Both are able to play together. Since the systems are designed to manipulate sound parameters gesturally, they evolve their sounds to co-exist together. They are synched loosely, meaning only loop length information is shared, not [any form of] quantization, so they can concentrate on sculpting a co-independent sound construct. But when the pattern master so chooses, he/she may transform not just their audio but the audio of every node in the system! Any other patternist may transform their own audio at any time but it does not affect any other system and doesn’t change their tempo. Only the pattern master can do those things. The pattern master can create a new loop with the transformed audio and as this new loop length is transmitted to the rest of the “pattern”, he/she is deprecated from node:0 to last in the list of connected nodes and the previous node:1 is now node:0 and thus, pattern master. Their indication lights flash to let them know that they re now in control and the cycle repeats.

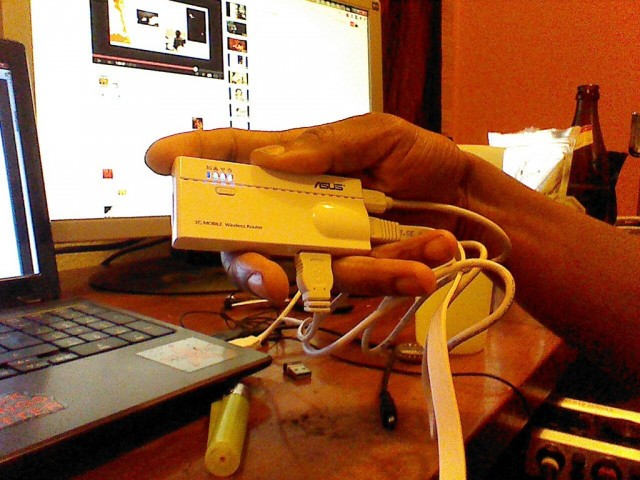

Each patternist has a node that includes the wireless router/relay/interface for the prosthetic portion of the beatjazz system, the part that’s attached to the body. It intercepts the serially-formatted Wi-Fi signals coming from the prosthesis. The RN-XV Wi-Fi transceiver formats its data streams for use with [open physical computing platform] Arduino, which is primarily interfaced to the serial bus. The data streams are coming out at 115,200 kbps, which is fast enough to transmit the data at a rate that could be considered realtime. Over distances, these streams nose dive for reliable professional signal integrity. Putting the wireless router as close as possible remedies this.

The first node (revision1) will send a high speed, properly-composed Wi-Fi stream to the computer, which benefits from the increased bandwidth of a full-size 802.11g [Wi-Fi] pipe while keeping the prosthesis super-close to the router interface (this is what is being created currently). Revision2 then would network the router/relay to an embedded computer system.

Revision3 will have a hexapod body whose legs will give kinetic parameter feedback. In addition to the benefit of having one’s instrument “walk” out onstage with you as a robot avatar, I can confirm from my own experiments that people interact with robots as living things and not as servo motors and plastic, which makes it another expression vector i am looking forward to investigating further.

Every patternist eventually becomes pattern master and controls the Transform of every other patternist in rotation. There is no permanent king or hierarchy. Since designing this system, I built a recorder that automatically records everything I play. Synchronization will be the same — automatic. Having played with a couple of other gesturally-enabled artists, there is a definite sense of non-linearity to the sound and interaction. There is not so much emphasis on song form or linear structures. The narrative vectors out in the directions of timbral concept, layering, tempo and pattern variation and transform “plot twist” concept. The two (or more) sonic hypotheses presented combine into an answer that neither entity could arrive at singularly, and those interactions with other gesturally-active performers were without synchronization. Synchronized, multi-elemental systems would create patterns of infinite variety. It’s like walking through 1000 variation of the same groove, created by each patternist, based on their place in a dynamically updating, decentralized list.

I call this system a Sonic Fractal Matrix. Synchronizing gesturally-active sonic systems automatically will create a new type of soundscapes that evolves and mutates. Imagine events that have no main performer, DJ, or band — just one or more patternists, creating a fractal array of sonic patterns. How they sound depends on the individual patternist. But as more and more patternists synchronize with them, the overall sonic construct mutates and becomes communal, a piece of collaborative sonic architecture. And each patternist takes away a more detailed, intricate mental pattern concept with each gathering because, unlike the Borg or other hivemind concepts, real humans like to express their individuality. This is no different. Within the network of the sonic fractal matrix are 2 or 10 or 100 expression vectors, projecting in individual directions, drawing from a mutating core concept.

A detailed explanation of that core concept

We’d love to hear your thoughts – and ways that this kind of networked performance can work, even beyond the beatjazz system. -Ed.

Crowd-funding campaign, in case you’d like to be part of this patternist business yourself:

Node:0 of the Sonic Fractal Matrix-A vision of a new model for music