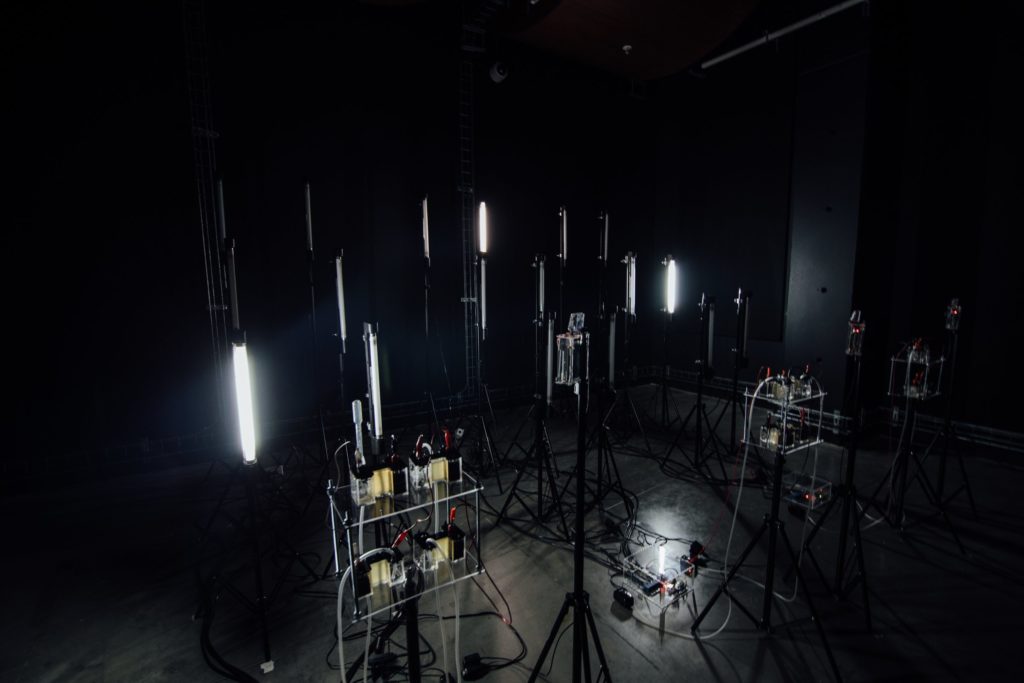

In the patterns generated by bacterial cells, Micro-ritmos discovers a new music and light.

From the Mexican team of Paloma López, Leslie García, and Emmanuel Anguiano (aka Interspecifics), we get yet another marvel of open source musical interface with biological matter.

Micro-ritmos from LessNullVoid on Vimeo.

The raw cellular matter itself is Geobacter, an anaerobic bacteria found in sediment. And in a spectacular and unintentional irony, this particular family of bacteria was first discovered in the riverbed of the Potomac in Washington, D.C. You heard that right: if you decided to literally drain the swamp in the nation’s capital, this is actually what you’d get. And it turns out to be wonderful stuff, essential to the world’s fragile ecosystems and now finding applications in technology like fuel cells. In other words, it’s a heck of a lot nicer to the planet than the U.S. Congress.

So if composers like Beethoven made music that echoed bird tweets, now electronic musicians can actually shine a light on some of the materials that make life on Earth possible and our future brighter.

Leslie, Paloma, and Emmanuel don’t just make cool performances. They also share the code for everything they’ve made under an open source license, so you can learn from them, borrow some sound synthesis tricks, or even try exploring the same stuff yourself. That’s not just a nice idea in theory: good code, clever hardware projects, and clear documentation has helped them to spread their musical practice beyond their own work.

Check it out here – in Spanish, but fairly easy to follow (cognates are your friend):

https://github.com/interspecifics/micro-ritmos

The basic rig:

RaspberryPi B+

RasPi camera module

Micro SD cards

Arduino

Bacterial cells

Lamps

SuperCollider for sound synthesis

The bacterial acts as a kind of sophisticated architectural sonic spatializer. Follow along – the logic is a bit Rube Goldberg, mixed with machine learning.

The bacteria trigger the lights, variations in the cells generating patterns.

Machine learning coded in Python then “watches” the patterns, and feeds that logic into both sound and spatialization. Sound is produced from synthDefs in the open source SuperCollider sound coding environment, and positioned in the multichannel audio system, all via control signal transmitted from the machine learning algorithm via OSC (OpenSoundControl).

Imagine the bacteria are live coding performers. They generate a kind of autonomous, real-time graphical score for the system.

In some way, this unstable system is a modern twist on the experiments of the likes of Cage and Tudor. But whereas they found these sources in the I Ching and unpredictable circuitry and feedback systems, respectively, here there’s a kind of grounding in some ecological, material microcosm.

It’s funny, the last day I was in Mexico City, I saw an exhibition of organic architecture by the Mexico City native Javier Senosiain. Senosiain built homes that found some harmony with their natural environment, forms from organic material. Here, there’s a similar relationship, scaled from microcosm to macrocosm in unpredictable ways. But that means that this is not sound synthesis that establishes some dominion over nature; it allows this cells some autonomy to produce the composition of the piece. And I don’t just mean that in some lofty philosophical sense: the sonic results are radically different.

Beautiful work, presented for the first time in Medellín, Colombia.

More soon from this trio, as we worked together in Mexico City last month with MUTEK.mx.