In glitching collisions of faces, percussive bolts of lightning, Lorem has ripped open machine learning’s generative powers in a new audiovisual work. Here’s the artist on what he’s doing, as he’s about to join a new inquisitive club series in Berlin.

Machine learning that derives gestures from System Exclusive MIDI data … surprising spectacles of unnatural adversarial neural nets … Lorem’s latest AV work has it all.

And by pairing producer Francesco D’Abbraccio with a team of creators across media, it brings together a serious think tank of artist-engineers pushing machine learning and neural nets to new places. The project, as he describes it:

Lorem is a music-driven mutidisciplinary project working with neural networks and AI systems to produce sounds, visuals and texts. In the last three years I had the opportunity to collaborate with AI artists (Mario Klingemann, Yuma Kishi), AI researchers (Damien Henry, Nicola Cattabiani), Videoartists (Karol Sudolski, Mirek Hardiker) and music intruments designers (Luca Pagan, Paolo Ferrari) to produce original materials.

Adversarial Feelings is the first release by Lorem, and it’s a 22 min AV piece + 9 music tracks and a book. The record will be released on APR 19th on Krisis via Cargo Music.

And what about achieving intimacy with nets? He explains:

Neural Networks are nowadays widely used to detect, classify and reconstruct emotions, mainly in order to map users behaviours and to affect them in effective ways. But what happens when we use Machine Learning to perform human feelings? And what if we use it to produce autonomous behaviours, rather then to affect consumers? Adversarial Feelings is an attempt to inform non-human intelligence with “emotional data sets”, in order to build an “algorithmic intimacy” through those intelligent devices. The goal is to observe subjective/affective dimension of intimacy from the outside, to speak about human emotions as perceived by non-human eyes. Transposing them into a new shape helps Lorem to embrace a new perspective, and to recognise fractured experiences.

I spoke with Francesco as he made the plane trip toward Berlin. Friday night, he joins a new series called KEYS, which injects new inquiry into the club space – AV performance, talks, all mixed up with nightlife. It’s the sort of thing you get in festivals, but in festivals all those ideas have been packaged and finished. KEYS, at a new post-industrial space called Trauma Bar near Hauptbahnhof, is a laboratory. And, of course, I like laboratories. So I was pleased to hear what mad science was generating all of this – the team of humans and machines alike.

So I understand the ‘AI’ theme – am I correct in understanding that the focus to derive this emotional meaning was on text? Did it figure into the work in any other ways, too?

Neural Networks and AI were involved in almost every step of the project. On the musical side, they were used mainly to generate MIDI patterns, to deal with SysEx from a digital sampler and to manage recursive re-sampling and intelligent timestretch. Rather then generating the final audio, the goal here was to simulate musician’s behaviors and his creative processes.

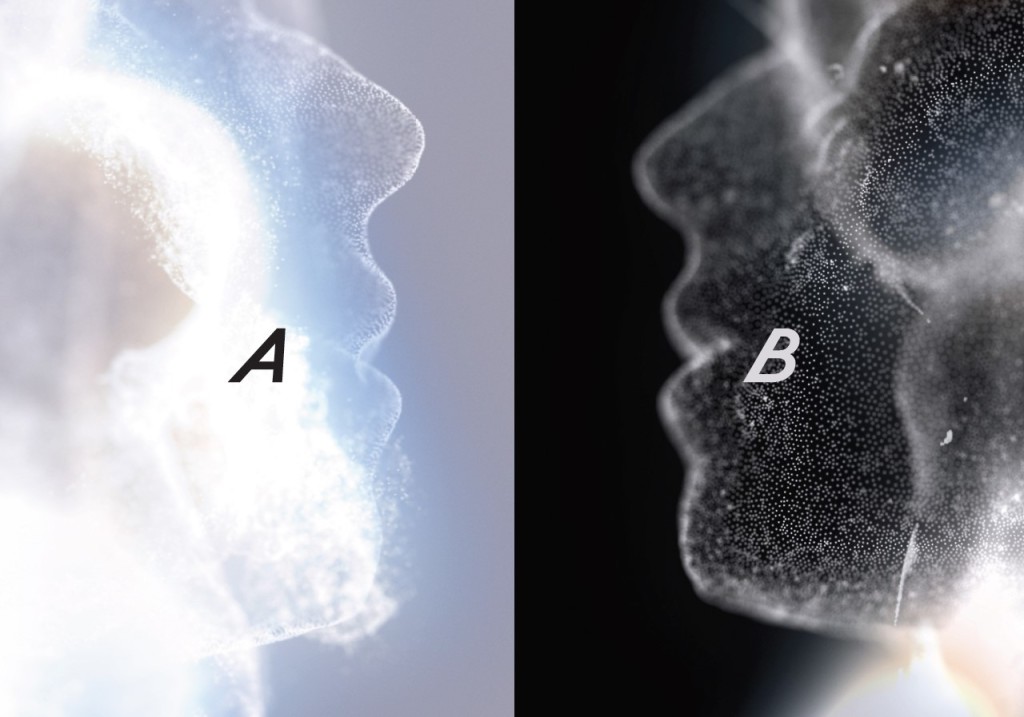

On the video side, [neural networks] (especially GANs [generative adverserial networks]) were employed both to generate images and to explore the latent spaces through custom tailored algorithms, in order to let the system edit the video autonomously, according with the audio source.

What data were you training on for the musical patterns?

MIDI – basically I trained the NN on patterns I create.

And wait, SysEx, what? What were you doing with that?

Basically I record every change of state of a sampler (i.e. the automations on a knob), and I ask the machine to “play” the same patch of the sampler according to what it learned from my behavior.

What led you to getting involved in this area? And was there some education involved just given the technical complexity of machine learning, for instance?

I always tried to express my work through multidisciplinary projects. I am very fascinated by the way AI approaches data, allowing us to work across different media with the same perspective. Intelligent devices are really a great tool to melt languages. On the other hand, AI emergency discloses political questions we try to face since some years at Krisis Publishing.

I started working through the Lorem project three years ago, and I was really a newbie on the technical side. I am not a hyper-skilled programmer, and building a collaborative platform has been really important to Lorem’s development. I had the chance to collaborate with AI artists (Klingemann, Kishi), researchers (Henry, Cattabiani, Ferrari), digital artists (Sudolski, Hardiker)…

How did the collaborations work – Mario I’ve known for a while; how did you work with such a diverse team; who did what? What kind of feedback did you get from them?

To be honest, I was very surprised about how open and responsive is the AI community! Some of the people involved are really huge points of reference for me (like Mario, for instance), and I didn’t expect to really get them on Adversarial Feelings. Some of the people involved prepared original contents for the release (Mario, for instance, realised a video on “The Sky would Clear What the …”, Yuma Kishi realized the girl/flower on “Sonnet#002” and Damien Henry did the train hallucination on “Shonx – Canton” remix. With other people involved, the collaboration was more based on producing something together, such a video, a piece of code or a way to explore Latent Spaces.

What was the role of instrument builders – what are we hearing in the sound, then?

Some of the artists and researchers involved realized some videos from the audio tracks (Mario Klingemann, Yuma Kishi). Damien Henry gave me the right to use a video he made with his Next Frame Prediction model. Karol Sudolski and Nicola Cattabiani worked with me in developing respectively “Are Eyes invisible Socket Contenders” + “Natural Readers” and “3402 Selves”. Karol Sudolski also realized the video part on “Trying to Speak”. Nicola Cattabiani developed the ELERP algorithm with me (to let the network edit videos according with the music) and GRUMIDI (the network working with my midi files). Mirek Hardiker built the data set for the third chapter of the book.

I wonder what it means for you to make this an immersive performance. What’s the experience you want for that audience; how does that fit into your theme?

I would say Adversarial Feelings is a AV show totally based on emotions. I always try to prepare the most intense, emotional and direct experience I can.

You talk about the emotional content here and its role in the machine learning. How are you relating emotionally to that content; what’s your feeling as you’re performing this? And did the algorithmic material produce a different emotional investment or connection for you?

It’s a bit like when I was a kid and I was listening at my recorded voice… it was always strange: I wasn’t fully able to recognize my voice as it sounded from the outside. I think neural networks can be an interesting tool to observe our own subjectivity from external, non-human eyes.

The AI hook is of course really visible at the moment. How do you relate to other artists who have done high-profile material in this area recently (Herndon/Dryhurst, Actress, etc.)? And do you feel there’s a growing scene here – is this a medium that has a chance to flourish, or will the electronic arts world just move on to the next buzzword in a year before people get the chance to flesh out more ideas?

I messaged a couple of times Holly Herndon online… I’m really into her work since her early releases, and when I heard she was working on AI systems I was trying to finish Adversarial Feelings videos… so I was so curious to discover her way to deal with intelligent systems! She’s a really talented artist, and I love the way she’s able to embed conceptual/political frameworks inside her music. Proto is a really complex, inspiring device.

More in general, I think the advent of a new technology always discloses new possibilities in artistic practices. I directly experienced the impact of internet (and of digital culture) on art, design and music when I was a kid. I’m thrilled by the fact at this point new configurations are not yet codified in established languages, and I feel working on AI today give me the possibility to be part of a public debate about how to set new standards for the discipline.

What can we expect to see / hear today in Berlin? Is it meaningful to get to do this in this context in KEYS / Trauma Bar?

I am curious too, to be honest. I am very excited to take part of such situation, beside artists and researchers I really respect and enjoy. I think the guys at KEYS are trying to do something beautiful and challenging.

Live in Berlin, 7 June

Lorem will join Lexachast (an ongoing collaborative work by Amnesia Scanner, Bill Kouligas and Harm van den Dorpel), N1L (an A/V artist, producer/dj based between Riga, Berlin, and Cairo), and a series of other tantalizing performances and lectures at Trauma Bar.

KEYS: Artificial Intelligence | Lexachast • Lorem • N1L & more [Facebook event]

Lorem project lives here: