Put some of the best brains in music and sound together in a room. Give them a deadline. Tell them to invent the future as quickly as they can.

What results is crazy, from better ways of teaching music production to composing inside Minecraft to strapping displays on your wrist to simulate the Apple Watch before anyone’s even able to get one. So, we sent one of the smartest brains we know to find the best stuff – that’ll be Gina Collecchia, engineer, technologist, and data scientist as well as writer/musician, the kind of person who studies acoustics in Peru and “conditional entropy” to help you navigate music. Pictures by Alex Park.

On September 20, 2014 in San Francisco, CA, over 70 of the top creative minds in music production, software, and product design came together for the first Audio Hack SF: a one-day hackathon dedicated to prototyping new music technologies and software. Participants were given the freedom to work on any topic in audio, with several projects proposed at the beginning of the day. Here’s a recap of the standouts.

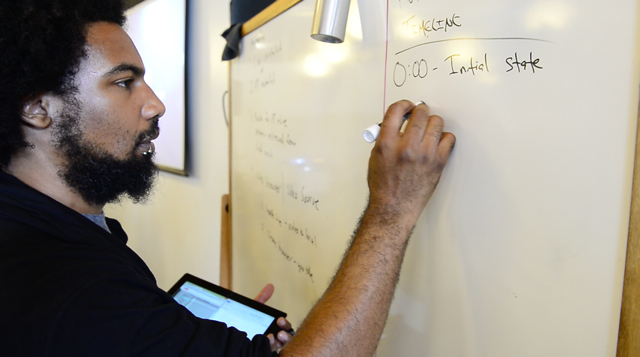

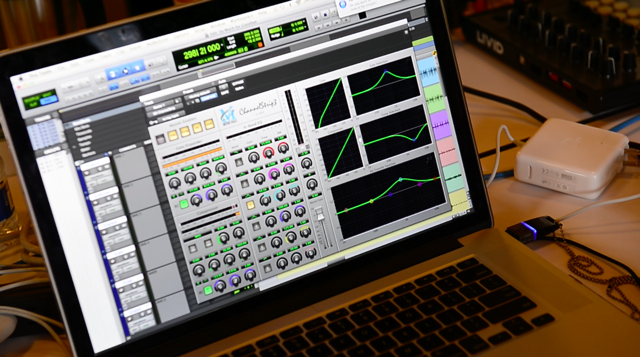

1. Ableton and YouTube tutorial syncing

Yeuda Ben-Atar, Thavius Beck, Peter Nyboer, Neal Riley, Andrew Stern, Michael McConnell, Chuck Knowledge, Andrew Hall

Flipping between applications during something as time-sensitive as making music can really hinder learning. This group, comprised of educators from Dubspot and Ableton and engineers from Roger Linn Instruments, Gobbler, and Livid Instruments, used a JSON file to mark cue points between one of Thavius Beck’s tutorial videos and the state of the Ableton session. They used Gobbler to download the Ableton sessions, which is a service designed specifically for music and media project files. Another version featured the video directly in Ableton, and with Max for Live, the actions of the tutorial were replicated in the working session. This gave the tutorial a native feel, as if it were included with the DAW.

2. Using the (future) Apple iWatch to make music

Alex Oana, Curtis Wang, Brett Clark, Tommy Hass, Lev Perrey

By only using a small square of the screen, an iWatch prototype was implemented on an iPhone strapped to the wrist. The app made heavy use of the gyroscope and accelerometer to control two different effects on an audio file. Tapping on the interface switched between the effects, while rolling the wrist forward and backward controlled a parameter of the active effect. It was a clever way to start prototyping for the iWatch and ideating about the potential it has for music creation.

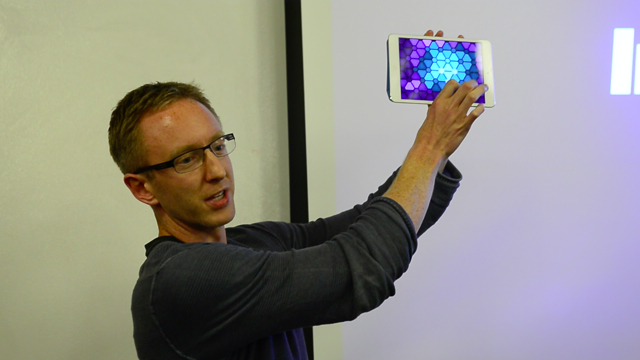

3. A wireless MIDI isomorphic keyboard for iPad

Johan Ismael, Paul Neyrinck, Luke Bradford, Adam Kleczenski, Michael McConnell

Targeted at users who are inspired by chord progressions, this group created an isomorphic keyboard interface that rivals the Musix Pro app—in a single day. “We started with only a Max patch that Adam had created, which was very helpful for designing the logic,” said Johan Ismael, a Stanford CS student. The end result was a glowing, stained glass soft synth that sends MIDI messages wirelessly over a WiFi network.

4. Composing in Minecraft through OSC messages

Brian Chrisman, Aaron Higgins, David O’Neil, Rob Currie

Moving around a virtual landscape and flipping switches here and there inspired this group to design musical moments around these actions in Minecraft, a game known for its largely user-generated content. Via Open Sound Control (OSC) messages, these engineers from Universal Audio, Gobbler, and Native Instruments built a train yard with cars moving around several independent tracks and audio events along the tracks. Cars (signifying the audio playback head) could travel to other tracks on the board with mouse-activated switches, and their course could also be paused and reversed a la Isle of Tune.

Check out this repository from Rob Hamilton for more about using OSC with Minecraft: https://github.com/robertkhamilton/osccraft

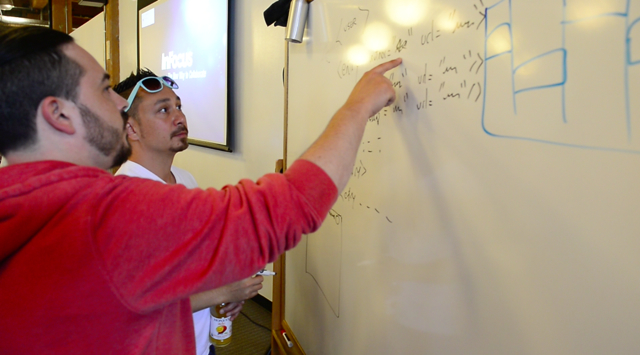

5. Algorithmic loop design from variable-order Markov models

Burkay Gur, Juan Hernandez, Joe Gallo, Stanislav Nikolav, Shawn Trail

Richie Mendelsohn and Andrew Braunstein

Two groups worked independently on algorithmic composition using Markov chains: the first on drum loops, and the second on chords and melodies. It was interesting to see their relative successes and failures with respect to rhythm and composition: perhaps unsurprisingly, the drum-based algorithm did a better job with rhythm, while the minor chord-based algorithm told more of a story. Models for kick, snare, and hihat were implemented independently, so the voices of the percussive composition were relatively disjointed.

Other notables:

One team designed an app for artists to sell their merchandise at live events, called AfterHours. The app only sent notifications to users inside of a geo-fenced area, and they could get access to exclusive content in this way. Another team used the Leap Motion infrared sensing device to control samples in Max/MSP, mapping gesture velocity to the loudness of the sample. Producer Andy Scheps led a team seeking to make a patchable, open-source modular synthesizer for Android, and they nearly got there (save the Android part).

Gina Collecchia is a freelance writer and author of the book Numbers and Notes: An Introduction to Musical Signal Processing. She holds a Masters degree from Stanford University’s Center for Computer Research in Music and Acoustics and a Bachelors degree in Mathematics from Reed College.