Digital music can go way beyond just playback. But if performers and DJs can remix and remake today’s music, why should music from past centuries be static?

An interactive team collaborating on the newly reopened Museum im Mendelssohn-Haus wanted to bring those same powers to average listeners. Now, of course, there’s no substitute for a real orchestra. But renting orchestral musicians and a hall is an epic expense, and the first thing most of those players will do when an average person gets in front of them and tries to conduct is, well – get angry. (They may do that with some so-called professional conductors.)

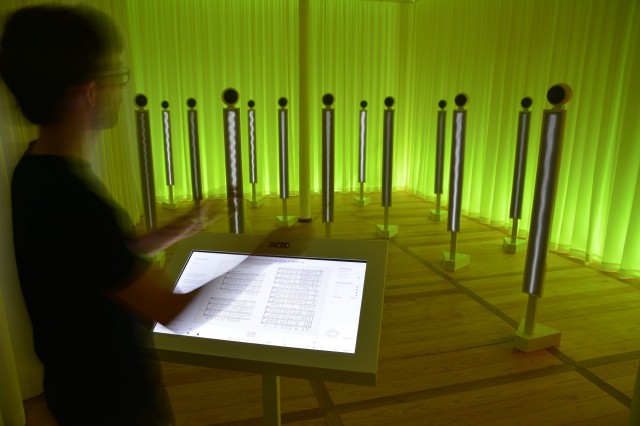

Instead, a museum installation takes the powers that allow on-the-fly performance and remixing of electronic music and applies it to the Classical realm. Touch and gestures let you listen actively to what’s happening in the orchestra, wander around the pit, compare different spaces and conductors, and even simulate conducting the music yourself. Rather than listening passively as the work of this giant flows into their ears, they’re encouraged to get directly involved.

We wanted to learn more about what that would mean for exploring the music – and just how the creators behind this installation pulled it off. Martin Backes of aconica, who led the recording and interaction design, gives us some insight into what it takes to turn an average museum visitor into a virtual conductor. He shares everything from the nuts and bolts of Leap Motion and Ableton Live to what happens when listeners get to run wild.

First, here’s a look at the results:

Mendelssohn Effektorium – Virtual orchestra for Mendelssohn-Bartholdy Museum Leipzig from WHITEvoid on Vimeo.

Creative Direction, GUI and Visuals by WHITEvoid

Interior Design by Bertron Schwarz Frey

Creative Direction, Sound, Supervision Recording, Production, Programming by aconica

CDM: What was your conception behind this piece? Why go this particular direction?

Martin: We wanted to communicate classical music in new ways, while keeping its essence and original quality. The music should be an object of investigation and an experimental playground for the audience.

The interactive media installation enables selective, partial access to the Mendelssohn compositions. The audience also has the opportunity to get in touch with conducting itself. They can easily conduct this virtual orchestra without any special knowledge — of course, in a more playful way.

It was all about getting in touch with Mendelssohn as a composer, while leaving his music untouched.

The idea for the Effektorium originated in the cooperation between Bertron Schwarz Frey Studio and WHITEvoid. WHITEvoid worked on the implementation.

What was your impression of the audience experience as they interacted with the work? Any surprises?

Oh yes, people really loved it.

The audience during the reopening was pretty mixed — from young to old. Most people were very surprised what they are able to do with the interactive installation. I mean, everything works in real time, so you would have direct feedback on whatever you’re doing. The audience could be an active part — I think that’s what people liked the most about it.

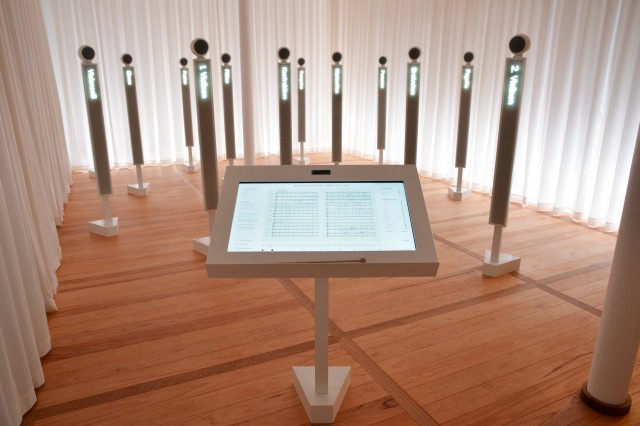

Visitors can also just move around within the “orchestra pit” in order to listen to the individual instruments through their respective speakers. This creates the illusion of walking through a real orchestra. Normally you are not allowed to do that in a real concert situation. So this was also a big plus, I would say.

I saw a lot of happy faces as people played around with the interactive system and as they walked around within the installation room.

Can you explain the relationship of tracking/vision and sound? How is the system set up?

There are basically two computers connected to each other and communicating via Ethernet to run the whole system. The first computer runs custom-made software, built in Java and OpenGL, for the touchscreen, Leap Motion control (via its Java SDK), and the whole room’s lighting and LED/loudspeaker sculptures. Participants can navigate through various songs of the composer and control them.

The second computer is equipped with Ableton Live and Max for Live. Ableton Live is the host for all the audio files we recorded at the MDR Studio in Leipzig, with some 70 people and lots of help. We had specific needs for that installation, for both the choir and the orchestra sounds. So everything had to be very isolated and very dry for the installation, which was very unusual for the MDR Studio and their engineers and conductor.

Within Live, we are using some EQs, the Convolution Reverb Pro, and some utility plug-ins. That’s it, more or less. Then there is a huge custom-made Max Patch/Max for Live Patch … or a couple of patches, to be exact.

We decided to just work exclusively with the Arrange view within Live. So this made it easy to arrange all the orchestral compositions within Live’s timeline, and to read out these files for controlling and visualisation.

Both computers need to know the exact position at the same time in order to control everything via touchscreen and Leap fluently. For the light visualisation, we also needed this data to control the LED`s properly to the music.

We basically read out the time of the audio files — we’re basically tracking the time and the position within Ableton’s timeline.

How does the control mechanism work – how is that visitors are able to become conductors, in effect – how do their gestures impact the sound?

The Leap Motion has influence on the tempo only (via OSC messages). One has to conduct in time to get the playback with the right tempo. There’s also an visualisation of the conducting for the visitors in order to see if they are too slow or too fast. You have two possibilities in the beginning when you enter the installation, playback audio with conducting or playback audio without conducting. If you choose “playback audio with conducting” you have to conduct all the time; otherwise the system will stop and ask you kindly to continue.

For the audio, we are working heavily with the warp function in Live to keep the right pitch. But we scaled it a bit to stay within a certain value range. The sound of the orchestra was very important, so we had to make sure that everything sounded normal to the ears of an orchestral musician. Extreme tempo changes and of course very slow and very fast tempo was a no-go.

And you’ve given those visitors quite a lot of options for navigation, too, yes – not only conducting, but other exploration options, as well?

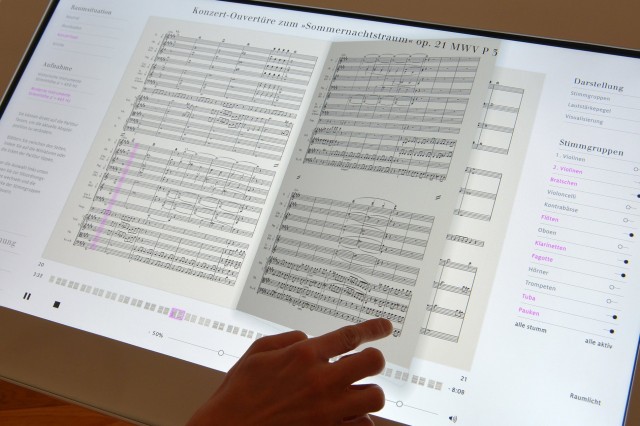

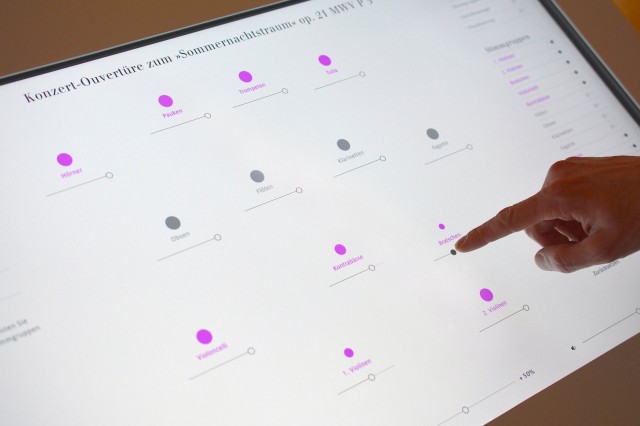

The touch screen serves as an interactive control center for the following parameters:

- position within the score

- volume for single orchestral or choral groups

- selective mute to hear individual instruments

- visualization of score and notes

- the ability to compare a music piece as directed by five different conductors

- room acoustics: dry, music salon, concert hall, church

- tuning: historical instruments (pitch 430 Hz) and modern instruments (pitch 443 Hz)

- visualization of timbre and volume (LEDs)

- general lighting

So all these features could be triggered by the visitor. The two computers communicate via OSC. Every time someone hits one of the features via touchscreen, Max for Live gets an OSC message to jump position within the score or to change the room acoustics (Convolution Reverb Pro) on the fly, for example.

Right, so you’re actually letting visitors change the acoustic of the environment? How is that working within Live?

We had to come up with a proper working signal routing to be able to switch room acoustics fluently with the IR-based plug-in. Especially the room acoustics were a big problem in the beginning. We really wanted to use the built-in Convolution Reverb, but figured out that we couldn’t use ten instances of that plug-in or more at the same time without any latency problems. So now we are basically using three of the them at the same time for one setting (room acoustics: concert hall for example). All the other Reverbs are turned off or on automatically while switching settings. Everything runs very fluently now and without any noticeable latency. I would say that this wouldn’t have been possible five years ago. So we are happy that we made it 🙂

How did you work with Leap Motion? Were there any challenges along the way; how happy were you with that system?

The Leap Motion is very limited when it comes to its radius: it’s only sensitive within about 60 cm. So we had to deal with that; that isn’t much. Some of the testers, conductors and orchestra musicians, were of course complaining about the limited radius. If you have a look how conductors work, you can see that they use their whole body. So this kind of limitation was one of our challenges, to satisfy everyone with the given technology.

We could’ve used Kinect, but we went for Leap, because it allowed us to monitor and track the conductor’s baton. The Kinect is not able to monitor and track such a tiny little thing (at least not the [first-generation] Kinect). This was much more important for us than to be able to monitor the whole body, and it was also part of the concept.

I would say that we were kind of happy with the Leap Motion, but the radius could be bigger. Maybe this will change with version 2, but I don’t know what they have planned for the future.

Another problem was the feature list. We had a lot more features in the beginning, but we found out very quickly that one feature would be enough for the Leap Motion tracking, especially when you think of the audience who will visit this kind of museum. It has to be easy to understand and more or less self-explanatory. So by gesturing up and down, one will have influence on the tempo of Mendelssohn’s music only – that’s basically it. All the other features were given to the touchscreen – functional wise. So we have basically two interactive components for the installation setting.

Your studio was one among others. How did you collaborate with these other studios?

Bertron Schwarz Frey and WHITEvoid were basically the lead agencies. Bertron Schwarz Frey is the agency that was responsible for the whole redesign of the Mendelssohn-Haus museum in Leipzig and we and WHITEvoid just worked on the centerpiece of the newly reopened Mendelssohn Museum – the interactive media installation “Effektorium”. So we worked directly with WHITEvoid from the very beginning and our part was mainly the sound part of the project.

I am very happy that Christopher Bauder from WHITEvoid asked us to work with him on this project. We are actually good friends, but this was the first project we worked on. So I am glad that it ended up very well. Then there were a lot of other people to deal with.

I would also like to mention the core team of implementation and production consisting of Patrick Muller and Markus Lerner who did a really great job here…. big up guys!

The sound engineers from the MDR studio, technicians, the conductor David Timm, the orchestra and choir, and of course the people from the Mendelssohn Haus museum itself.

Thanks, Martin! I was a Mendelssohn fan before ever even spotting a computer, so I have to say this tickles my interest in technology and Classical music alike. Time for a trip to Leipzig. Check out more:

“Mendelssohn Museum – Effektorium”