Imagine the powers of motion effects – but with the ability to control all of them, parameter by parameter, and use assets dynamically without only rendering video. From artists and VJs to big events, that’s significant. CDM’s Ted Pallas breaks down Notch in a review for the real world. -Ed.

I’ve been wanting to write this article for a long time.

My creative life as a video designer has historically been driven by access to computer hardware. My process is like a liquid; after a period of splashing around, it fills as much of its container as it can. Typically, the considerations for this kind of artmaking are threefold:

- How many cores you got, and how fast are they? Not enough, and kind of eh, I use laptops.

- How much RAM? Rarely enough to hold all the videos I want to play.

- How about hard drive space? Also rarely enough for those videos.

In the past, traditional video design for live music and events have overwhelmingly relied on rendered video content. The VJ or designer cues up a video, and at the appropriate moment, it gets played back. There might be some effects put on top, and maybe the effects are driven live with input controls or audio reaction. But at the end of the day, there’s a reliance on pre-baked frames.

These frames don’t just represent content, they represent weight – they are often huge, they can take a long time to render, and they can take a lot of horsepower even for playback. As resolutions like 1080p or 4K or greater have become the norm, render times have grown. Before I started using Notch in December of 2017, I had an especially hard time putting out 3D renders fast enough to keep up with making what I wanted to make. Being able to see a finished frame sequence goes a long way towards helping to bridge a lot of difficult-to-onboard 3D knowledge.

Notch gives me the ability to see finished frames very, very quickly, in the most flexible ways imaginable. Before I dive into why Notch is an irreplaceable pipeline tool, though, I want to spend a bit of time framing what Notch is all about.

Notch is a real-time visual effect authoring and rendering engine, with a compositing engine neatly fitted on-top of each to help glue them together. Its use cases vary as widely as you could imagine. And there’s a lot inside. Notch boasts:

- a 3D engine with import for all major formats, notably FBX [Autodesk Filmbox] and [Sony Pictures/Lucasfilm] Alembic.

- a built-in system for generating primitives.

- a set of deformers for manipulating the primitives and imported 3D assets.

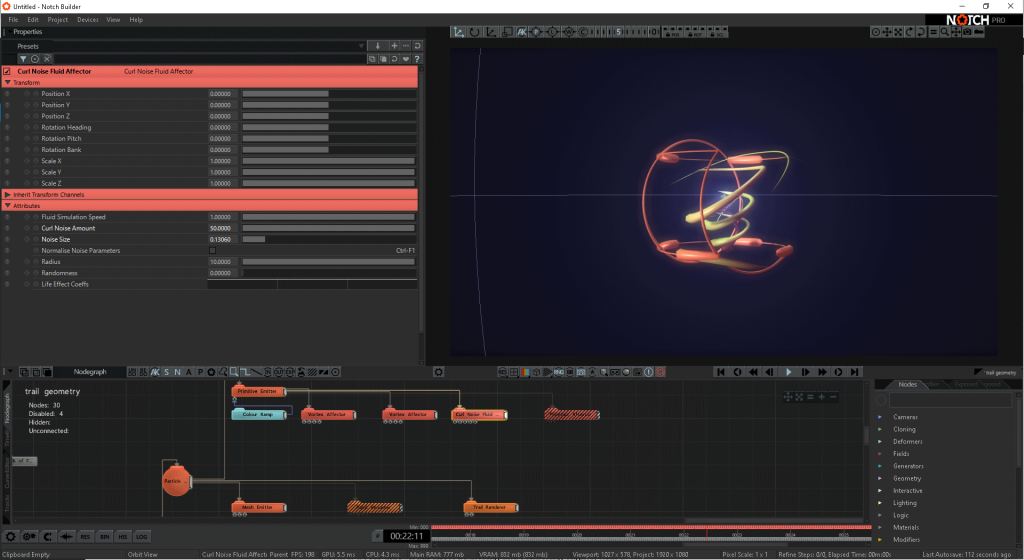

- a particle engine — and you can do a very wide range of effects with it, from paint smears to holograms.

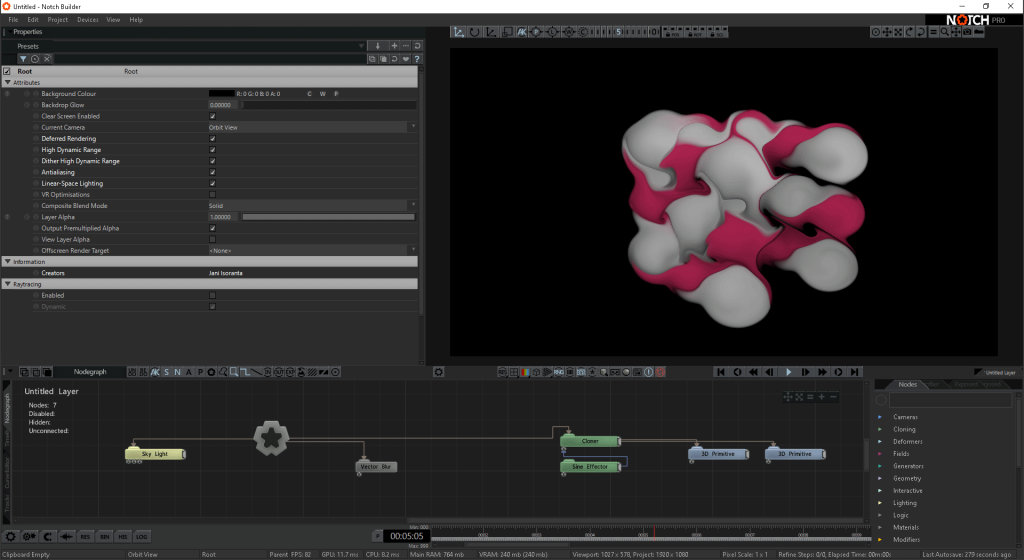

- a cloner system, one that rivals Cinema 4D’s [Maxon] MoGraph suite, with a similar cloner-effector system for manipulating up to hundreds of thousands of geometry instances.

- a node Notch calls a fields, which is a grid of voxels; used in a larger system of nodes, you can use these to make volumes that resemble clouds or smoke.

- — and if you need to get very detailed about how you generate geometry in real-time, there’s a procedural geometry system with bools and a variety of renderers. Here you can make even more detailed clouds.

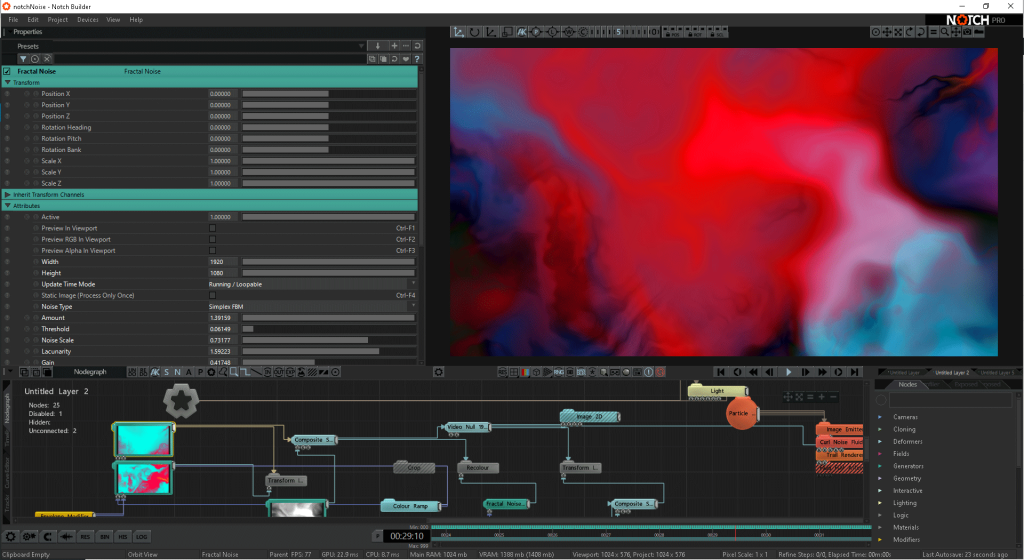

- a full compositing engine, ready for all of the traditional comping techniques you’d use in [compositing tools like] Flame or Nuke, as well as more radical processing techniques like you’d find in a VJ set.

All of the items listed above can be hooked up to a full set of modifiers for parametrized control, allowing for essentially unlimited arbitrary control scenarios to be fulfilled with minimal stress.

Wait — I’m going to repeat that last bit: all of the items listed above can be hooked up to a full set of modifiers for parametrized control, allowing for essentially unlimited arbitrary control scenarios to be fulfilled with minimal stress. And then it can output that work in a wide variety of formats – not only file formats but different kinds of results. In addition to outputting finished video from Notch Builder, the software produces a finished application or a block for a media server. The application is what it sounds like – it’s an EXE that outputs your Notch file to the screen you determine at launch, at a resolution determined at launch. Input modifiers can be exposed to Artnet, DMX, MIDI, or OSC. My preference, though, is the media server block.

Media server blocks are magic. You know what sucks? When you’ve rendered out a video for a client, it’s almost showtime, and they decide they want a small change. If this were a PowerPoint presentation or VJ set, this change would be no big deal – changing the text to a client’s favorite color, for example. But if you’re going to have to render a few thousand frames of high-res video, you have to calculate how much time you’ve got before your show and how long it’ll take to render, and then make a call. Sometimes you’ll wind up with a whole stack of change requests, and need to incorporate all of them, re-render … and wait. Everything stops while that render is chugging along.

With Notch blocks literally none of this baloney is an issue. I can expose the text color – and literally any other parameter, in what are so far limitless quantities (I’ve never topped it out.) This means when someone asks for a change, I can author it inside the media server. It’s the same as being able to reach inside a finished video and poke its After Effects file. If a parameter is not exposed, it’s usually never more than twelve minutes to bake an exceptionally large block and another seven to slurp it into the server. (Shoutout to Raul Herrera at Worldstage, Inc for learning this with me on-site in Vegas last summer.)

This “maximum of half an hour” to slurp in a new block once the change is authored transforms the entire production process. You can check a frame and see how it will look in the context of its final onstage context. Anything you see in a given moment you can imagine as final.

With Notch, it’s very reasonable to think about what’s in your frame at any given moment as a potential final frame, and then if you have the equipment on hand, go review the frame in the context of its final look on stage. For instance, Notch is terrific at ingesting files from [SideFX] Houdini and letting me turn those assets into finished content — without stopping for offline rendering.

Notch to me would be worth it for this functionality alone, even if it did nothing else, authoring elsewhere and then adapting assets to onstage use. But it’s also a very expressive authoring engine itself.

Everybody loves particles, for example. They are the analog synth of visual effects; they can look like many many many things, and are often fun to drive. Notch makes it uncommonly easy to break down a particle system into frames in your viewport. It’s all right there, and running in real-time, in a way that’s hard to match in other software. While something like Houdini’s FLIP [fluid simulation] or smoke simulation isn’t quite in the cards, you can get very close to many of these same looks with the same set of algorithms that underpins these larger simulations. If you’ve always wanted to make crazy particle effects, but you hate waiting for renders and you didn’t get into TouchDesigner, you need to check out Notch as soon as you can.

Assume that if you want to make clones, or field volumes, or procedural geometry, or deformed arrangements of primitives, or composites in 2D space from combinations of generators and video inputs – the systems are just as thoughtful as the systems designed for particles. Notch really is all about finished work – the systems themselves are transparent, and require the least amount of schooling before you get started, compared to any similarly-capable tools.

I’d like to talk a bit more about getting external geometry into Notch. For my VFX people – “it can slurp in alembics, and deal with UVs that change per frame.” For everyone else, this means Notch is capable of hosting what are traditionally effects rendered with cinema-grade techniques. I’ve gotten 12 minutes of animation distributed over four simultaneous files for a total of 64ish GB of data in the scene to playback at 60 FPS, lit and textured, on an [NVIDIA] 1080 GTX.

Notch can also take in FBX files (if textures are available to the system they will be associated on import), and animate skeletal FBX rigs. You can, for example, hook Notch up to a motion capture suit and animate your character that way. It’s even easy to do – now all that is stopping you is money.

There’s no getting around it – a Notch-based lifestyle requires a certain amount of commitment. It’s not cheap software – £99 a month to render finished video, £189/month to output media server blocks, £50/week to run finished applications (Playback Leasing license, which lets you move between jobs on a USB license).

See the full pricing below. There’s also a limited-time £99, non-production artists’ “Learning” license, which for now is on sale for £59. (A free trial is also available.)

https://www.notch.one/pricing/

However, let me be clear on one thing. My studio, Savages, is as indie as it gets, and even we find Notch to be our most valuable asset in our toolkit. There’s nothing like being able to render video fast, and there’s also nothing like being able to change content in a server, and there’s also nothing like being able to quickly and elegantly prototype interactive visual effects. For all of these features to exist in one software is intense, and the value even at $250/month is honestly unmet by any other software tool I’m aware of.

If the triple-threat (render, change, author) isn’t valuable to you, that’s ok – Notch might not be for you. If you make a living pushing pixels, or want to make a living pushing pixels, or just want to push pixels very quickly, it’s hard to compete with the level of service on offer with Notch. Even as I’m writing this during COVID-19 and all my jobs are canceling, it’s hard to imagine going without the dongle.

Previously:

Ted’s production studio: