Imagine a real-time Web for 3D, complete with ray-tracing and xR/AR support, based on open standards from Pixar and others. That’s the basic notion of Omniverse, the latest on NVIDIA’s campaign this year to both wow us and make us buy their GPUs.

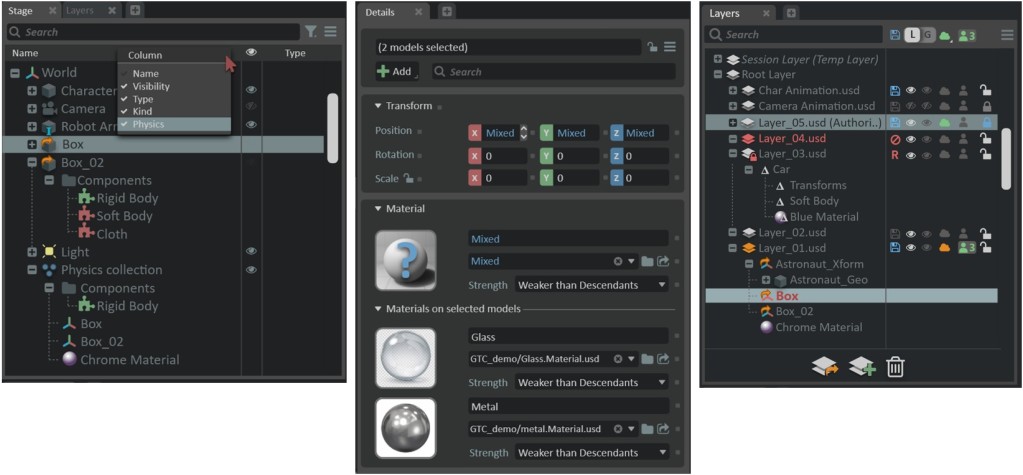

The key to Omniverse is that open standards for describing a scene – including Pixar’s Universal Scene Description (USD, which sounds like money to me) – could construct a shared 3D world that multiple parties can update in real-time. That means the early pitch for this thing is all about sharing a space for production. But it could open up other creative applications, too – including new creative and artistic possibilities – given who some of the parties are.

There are five parts to this project:

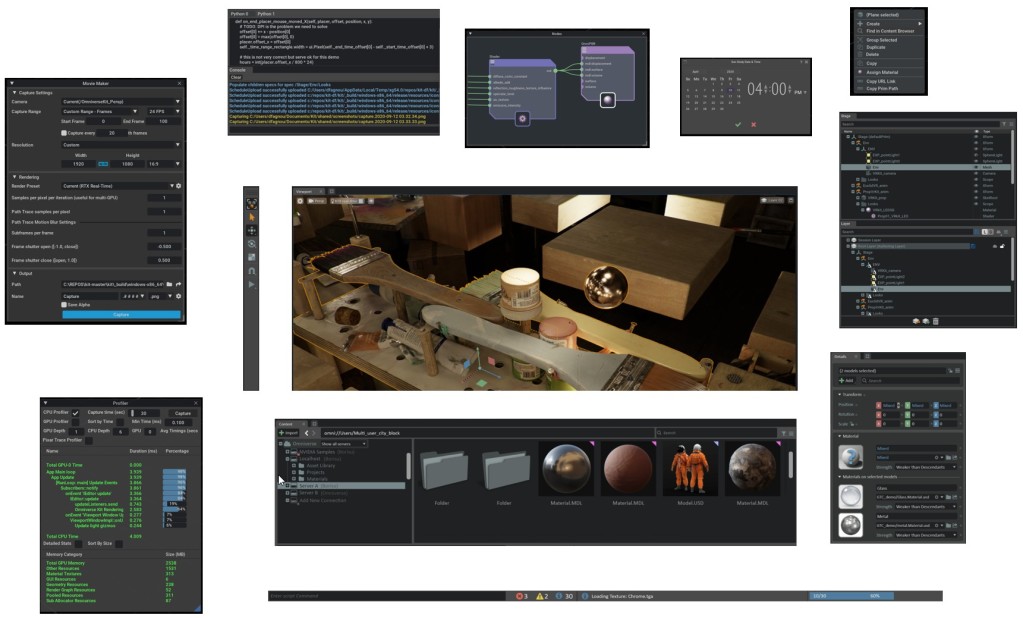

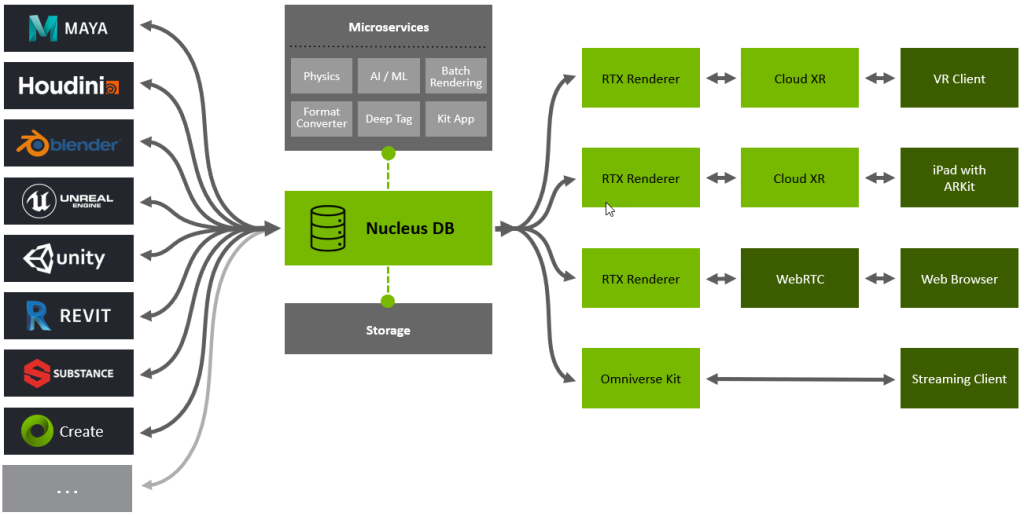

- Connect = the bit that glues together other content tools (from Unreal Engine to Rhinoceros to Photoshop)

- Nucleus = An online set of database-powered services, enabling real-time interactions and sharing this world

- Kit = a set of developer blocks for building your own tools

- Simulation = Built on a number of existing technologies, this adds physics and body and fluid dynamics and so on to the standardized schema for interchange

- RTX = the really NVIDIA-ish bit, which lets you use their GPUs for high-quality rendering

The shared production need is clear. Collaborating on an audio project is a mess of shared files. In 3D, it’s a straight-up nightmare – a tangled mob of assets from different tools that you have to slowly export, then import, then render… making actual collaboration a royal pain. And collaboration is essential, given the complexity of working in 3D worlds.

Here’s a perhaps left-field prediction about that, in fact. Even now as this is focused on industry, I can imagine more collaborative spaces would make 3D more democratic and allow more collective action by artists and other groups. Not to mention, a lot of these tools are free.

Connectivity is the key with Omniverse. There’s the scene description (Pixar’s open source USD), the materials to define what stuff that scene is made out of, (NVIDIA’s open source Material Definition Library, MDL), and then the ability to connect a ton of apps live in real-time. That includes artist-friendly tools like the free or free-ish Unity, Unreal, and Blender, plus Maya and Houdini.

Or one way to think of it – it’s Git for 3D artists:

But I think this could have potential not just for production, as we begin to work in collaborative spaces in 3D. Of course, Omniverse supports NVIDIA’s own RTX renderer. But it can also work with WebRTC in a browser, or on an iPad (with ARKit), or with a VR client.

And NVIDIA are hoping artists use it, too. They’re already using Omniverse to show of Omniverse Machinima, which turns the whole platform into a storytelling tool for creative folks.

Machinima is a word I haven’t heard for a while. Nice that it’s come back. See more:

https://www.nvidia.com/machinima/

You might not see Omniverse yourself as an artist or consumer, but it could enable the applications you use. (Anyone remember those old BASF ads? We don’t make the VR tools you use; we make the VR tools you use better.) These toolkits solve some of the more difficult problems in bringing 3D from the individual client model to something that’s shared – essential both to the people making 3D worlds, and people using games and tools that inhabit them.

Here’s the 2019 keynote that introduced the idea:

And here’s the new 2020 keynote – as this project has come a long way and now nears release:

Plus their talk from SIGGRAPH 2020:

https://developer.nvidia.com/siggraph/2020/video/sigg05

For users, there’s an open beta with a download coming “this fall” – no other application needed:

https://www.nvidia.com/en-us/design-visualization/omniverse/#ominiverse-notify-me

For the developers, NVIDIA promises an SDK it calls Omniverse Kit, with support for Python and C++. And then in turn you might not even need that, since both Unity and Unreal Engine are onboard as partners. For now, you’ll have to wait – NVIDIA is teasing us a bit, with only an online application for limited early developer access. It’s unclear how far they’ve gotten; I don’t yet have the SDK. Nicely enough, there’s even a UI framework built on Dear ImGui, which lets you describe inputs using breezy code and styles.

All of this means not only are 3D worlds connected, but they become agnostic about UI – with xR, VR, AR, and various inputs. That isn’t just acronym compliance; it’s clear we may soon interact with machines via various controllers (conventional and 3D), hands, and eyes. While some of the Facebook AR becomes a privacy concern, I might hasten to add this could also vastly improve accessibility for people who are incapable of traditional computer inputs. Our computer world is horribly ableist.

And at a time when musicians are crowding into weird hacked Minecraft servers, yeah, maybe shared 3D environments could look futuristic. Maybe they don’t have to range in quality from blocky pixels up to Second Life rip-offs.

Of course, if you think somewhere there still must be some hook for NVIDIA to use this to convince you to buy one of their GPUs, you’re right. So while they do support the iPad, you also get multi-GPU render support, Pixar’s Hydra architecture, and big RTX rendering with ray tracing. (Translation: use NVIDIA cards and get pretty pretty results.) It’s not just that, though – NVIDIA’s PhysX is there, too, so things like fluid dynamics and so on is included as well. I wonder if we’ll see competing microservices if this catches on; now that AMD is besting Intel in the CPU area and Apple is making all its own silicon, being platform-agnostic has a lot of appeal.

What fascinates me is that high-end architecture and simulation is starting to overlap with underground indie game and art. And that’s kind of fascinating, if we can work out how to take advantage of it without melting our brains. At least now we can do it at the price of what had been mid-range gaming enthusiast hardware, which is totally mind-blowing. Just a couple short years ago, any of this stuff required $10,000+ boxes.

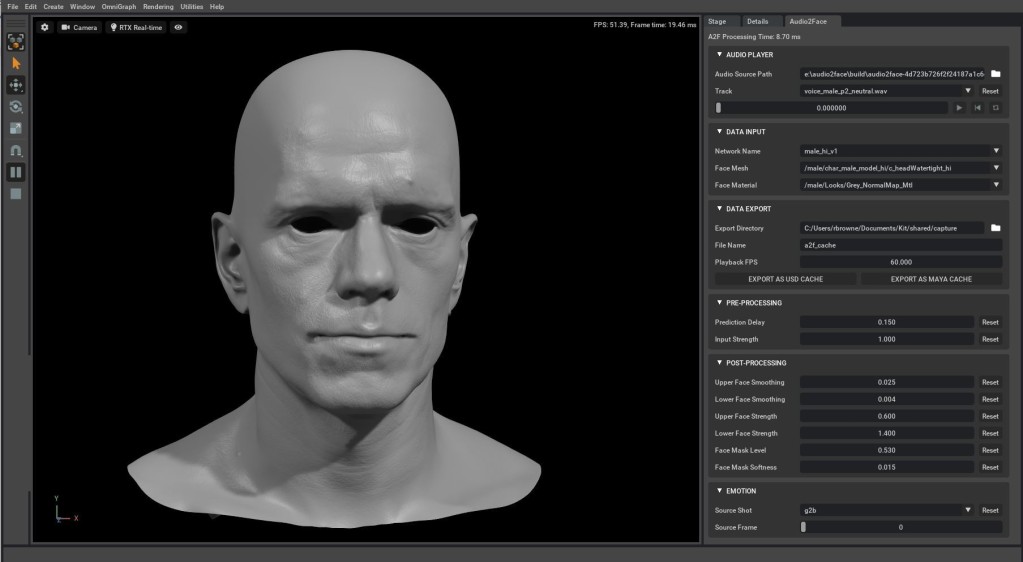

But yes, this also means you can hook up audio to a face.

Project page:

https://developer.nvidia.com/nvidia-omniverse-platform

News: