Microsoft may be running showy ads that show imagined applications of 3D computer vision, but using technology like Microsoft’s own Kinect, hackers are making sci fi reality, right now. Art && Code, held in Pittsburgh, Pennsylvania, was an epic gathering of artists and hackers and hackers become artists and artists become hackers. It was an extraordinary convergence of learning and making, workshop and hacklab, technical brain dumps and creative experimentation. Special to CDM, Sofy Yuditskaya brings us a number of highlights. For her part, Sofy herself presented how to make the Kinect camera work with free software graphical multimedia environment Pd, something I hope we’ll look at more soon. Here’s what Sofy brought back from the conference, so you can see what people are doing and learn yourself. -PK

Last month, Carnegie Mellon University held its now annual conference/festival Art && Code — and this one was in 3D!

Lovingly organized by Golan Levin and a core group of administrators, the gathering created a supportive and productive space for artists, students, hackers, makers, researchers & educators, and corporate developers to have a conversation. Props to everyone involved.

I came in on Thursday at the end of the hackathon preceding the main event. The power of structured light scanning was part of Elliot Woods’ and Kyle McDonald’s Calibrating Projectors and Cameras workshop, documented on an extensive wiki:

4A: Calibrating Projectors and Cameras: Practical Tools

There was a very universal performance by Jason Levine using Kinect tracking, beatboxing and throat singing:

jasonlevine.ca

A media archeology foray into the mysteries of the Meréorama by Erkki Huhtamo was another highlight. Live piano accompaniment by Stephen L. I. Murphy incorporated some little-known and totally enchanting compositions made specifically for the installation by Henry Kowalski. (There’s a private video, available directly via the University of Chicago’s Film Studies Center site.)

Even Jonathan Minard’s documentation used a hack, shot with a rig set up for merging Kinect tracking and DSLR footage, as constructed by James George. [Photo above.]

The presentations were broadcast live, in anagraphic 3d as point cloud data set up by Joel Gethin Lewis, and other festival-as-labratory participants. [Photo above.] Ed.: I hear this wound up being a major focus of the hack sessions, getting this working – so creators, would love to talk to you more about this! -PK

Of those available online, here are my favorite speed presentations:

Kyle Machulis

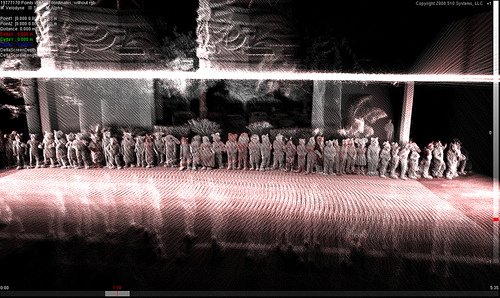

FC 2009 Point Cloud

Using 3D scanning equipment originally meant for massive geographic scale (cities and larger) surveying to take a scan of 512 fursuits during the Further Confusion 2009 convention. Resulting data released as open source.

Toby Schachman

Time Travelers

Time Travelers is an interactive video mirror installation.

A monitor shows a still image. As the user approaches the piece, her three dimensional form emerges on the screen as a subtle distortion in the image. Upon further exploration, we discover that the image is a time-lapse video, and that the user’s distance from the piece is reflected as a time warp in the video.

Time Travelers uses the Kinect 3D camera to capture a depth image of the viewer. This depth data is mapped pixel-by-pixel to time on a source video. The closer the viewer is to the camera, the later in time is the video.

Time Travelers was created in openFrameworks. Source code is available on GitHub.

Asa Foster and Caitlin Rose Boyle

We Be Monsters

Created in Processing for Golan Levin’s Interactive Art & Computational Design 2011 Spring Semester course at Carnegie Mellon University

by Caitlin Rose Boyle :: www.sadsadkiddie.com

twitter.com/#!/rattusRose& Asa Foster :: www.fosterthree.com

twitter.com/#!/foster_threeMusic :: “Chocolate Milk” – The Two Man Gentleman Band

from “Dos Amigos, Una Fiesta!”, Serious Business Records, 2010

Christopher Coleman

w3fi

The W3FI is a social movement, a philosophy, a path to responsible connectivity between our online/offline lives and to each other.

Every day we find more and more of our lives integrated in the digital world, no longer able to lead separate lives, one virtual and one real. This means that we have to take control of and responsibility for how others see and relate to us in the digital world. We are proposing a new philosophy, a new strategy for our online interactions. It is called “W3FI,” a combination of WiFi, the word “we” and the slang use of the number 3 in place of the letter “e” to reference the digital parts of our lives. Currently people already consider WiFi to be a sort of invisible shared connection that is all around us and shifting it to “We” indicates a new awareness of how interconnected we really are online. The W3FI project is much more than an awareness campaign, it is a movement in social activism to ask a new set of questions for each of us every time we click, text, or share a photo.

http://digitalcoleman.com/#1689034/W3FI

Caitlin Morris

ofxOpenCV + Grasshopper

Frame differencing in openFrameworks, based on ofxOpenCv and ofxOsc add-on examples. Amount of motion from frame to frame is translated to aperture size in a basic Grasshopper model. Ed.: Heh: for the 99% of you who didn’t get that, watch the video! -PK

http://a.parsons.edu/~morrc963/canopy.html

Nick Hardeman

Follow This

Follow This! is a twitter based first person shooter game utilizing the Microsoft Kinect camera. The game gives you gatling gun arms that allows you to pulverize twitter birds.

Thanks for all the help from the OF community!

http://nickhardeman.com/460/follow-this/

Molmol Natura

Building a sculpture with Kinect IR structured light

with openFrameworks and meshlab

CNC routing

Ed.: Translation – a scan from the 3D real world is translated to digital and translated to the 3D real world. Replicators have arrived. -PK

http://www.mamanatura.tv/blog/?p=749

[warning: that Vimeo video autoplays, and the drill is loud Do you know how often “autoplay” is the right choice? Never amount of the time. -Ed.]

James George

DepthEditorDebug

In mid-2005, six weeks after the tragic subway and bus bombings in London, New York’s Metro Transit Authority (MTA) signed a contract with the high-tech defense and military technology giant Lockheed Martin. Lockheed Martin promised the MTA a high-tech surveillance system driven by computer vision and artificial intelligence systems. The security system turned out to be vaporware and the contract collapsed under lawsuits. As a result, thousands of security cameras in the New York subway stations sit unused.

It is in this technological atmosphere that we chose to collaborate. We soldered together an inverter and motorcycle batteries to run the laptop and Kinect sensor on the go. We attached a Canon 5D DSLR to the sensor and plugged it in to a laptop. The entire kit went into a backpack.

We spent an evening in the New York Union Square subway capturing high resolution stills and and archiving depth data of pedestrians. We wrote an openFrameworks application to combine the data, allowing us to place fragments of the two dimensional images into three dimensional space, navigate through the resulting environment and render the output.

http://www.jamesgeorge.org/works/deptheditordebug.html

Eric Mica

Überbeamer

The überbeamer is a means of making any surface writeable. It was created by Eric Mika as an ITP Thesis project in the Spring of 2011. Development is ongoing.

David Stolarsky

SwimBrowser is a freehand web browser, powered by Kinect.

The following features are implemented:

– Clicking hyperlinks

– Zooming in and out

– Panning

– Scrolling

– Choose text field

– Open new tab

– Open menu (e.g. favorites) and select

– Back/forward

Shawn Lawson

Operation Human Shield

Operation Human Shield is a re-humanization of Missile Command. The original game had players shoot down missiles that were on a trajectory to destroy the player’s cities or bases. Subsequent iterations of Missile Command have changed styles, thematic opponents, and attacking/retaliation technologies. My variation puts the player into the game and forces them to use their body to deflect/swat away at incoming missiles. The integration of the body references real-world use of civilian human shields. While war tactic is illegal by any nation who is party to the fourth Geneva Convention (approximately 195 countries), the practice of human shields is still in use today.

http://www.shawnlawson.com/#operationhumanshield.html

Shawn Sims

roboScan

roboScan is a 3D modeler + scanner that uses a Kinect mounted on a ABB4400 robot arm. Motion planning and RAPID code are produced in Robot Studio and Robot Master. This code sends movement commands and positions to the robot as well as the 3D position of the camera. C++ and openFrameworks are used to plot the depth data of Kinect in digital 3D space to produce an accurate model of the environment.

This work was done by Shawn Sims and Karl Willis as the first project of the research group Interactive Robotic Fabrication. This project was also presented in Golan Levin’s sp2011 course Interactive Art + Computational Design. The facilities of the Digital Fabrication Lab in the School of Architecture at Carnegie Mellon were used in the making of this project.

Karl Willis http://www.darcy.co.nz/

CMU-dFab http://cmu-dfab.org/

CoDe Lab http://code.arc.cmu.edu/

IACD sp2011 Golan Levin http://golancourses.net/2011spring/

http://sy-lab.net/#1102351/roboScan

Thanks to Sofy for sharing this. If you were at Art && Code and want to share more of your own work, tips, code, art, or favorite moments from the event, get in touch.