Even as NVIDIA has a keynote with simulated robots making the rounds of a BMW factory, some of the GPU giant’s latest brings industry- and enterprise-grade tools to artists, too. That also could prove relevant as the pandemic has folks looking for work.

Music and live visual work means one thing – stuff has to happen live. And so that makes these chips more interesting. It means that fundamentally what musicians and artists do, which is to work with materials live in time, now matches up with the way graphics (and AI) chips work. Since they’re crunching numbers faster, it means the ability to create “liquid” interfaces. (That was the concept interactive visionary and legend Joy Mountford introduced years ago in a talk we had together on the South by Southwest stage, and it’s stuck with me.)

Now, I won’t lie, some of this is awaiting GDC, the game dev event. That’s because when you don’t have a BMW factory-sized budget, the punk-style approach of gaming has a ton of appeal. (You can see a bunch of gamers complaining on YouTube actually, I think misunderstanding that this is not a gaming keynote and … that’s coming later and … NVIDIA has always had workstation customers. But we know gaming trolls are not the most reasonable folks and – I for one enjoy watching Mercedes Benz simulate the Autobahn. It’s the Kraftwerk in me. GTA: Normal German Life Simulator. The Edeka parking lot is off the chain.)

But having listened to NVIDIA talk about their new offerings, I think there’s more here than just pro and enterprise applications and the usual workstation / gaming PC divide.

Follow along as there is a ton of geeky machine learning, metaverse, Omniverse, and 3D artistry stuff coming – https://gtc21.event.nvidia.com/

Omniverse

The big pillar here that impacts audiovisual creation is Omniverse. I wrote about this connected platform for collaboration and exchange of all things 3D, built on open tools like Pixar’s very own USD file format (also a subtle hint that y’all can make bank with this stuff):

This week, we get a lot of the questions answered about where NVIDIA was going strategically.

But yeah, if you’re wondering if this could allow audiovisual artists and musicians to connect to big-budget projects – at a time when even the shows you watch at night (Mandalorian, cough) are made with these tools? You bet.

First, the most exciting detail for me was a commitment that Omniverse for individuals and artists will always be free – meaning anyone can get at this platform. That also means that individual 3D artists and AV creators can play with big industry – so it’s a source of gigs.

Also, the Omniverse pricing is not astronomical for those “enterprise” use cases. A small team can buy into it at a per-seat license of $1800 a year, plus a $25,000 cost for a nucleus server. That’s within reach of interactive and design shops, and it seems NVIDIA may even work to adapt to those kinds of small use cases even beyond that.

I expect NVIDIA may even be underestimating the demand for those individuals – partly because as their tools and partner tools get massively more powerful and easier-to-use, it may not even take an entire team to do great work.

Now, the wait is on just for connectors. 3DS Max, Photoshop, Maya, Substance, and awesomely, Unreal Engine are all supported. But keep an eye out for Blender, Marvelous Designer, Solidworks, and Houdini for even more sign this is on.

It’s an open beta; keeping an eye on the convention is a chance to stay posted:

https://www.nvidia.com/omniverse/

And yes, yes Unreal:

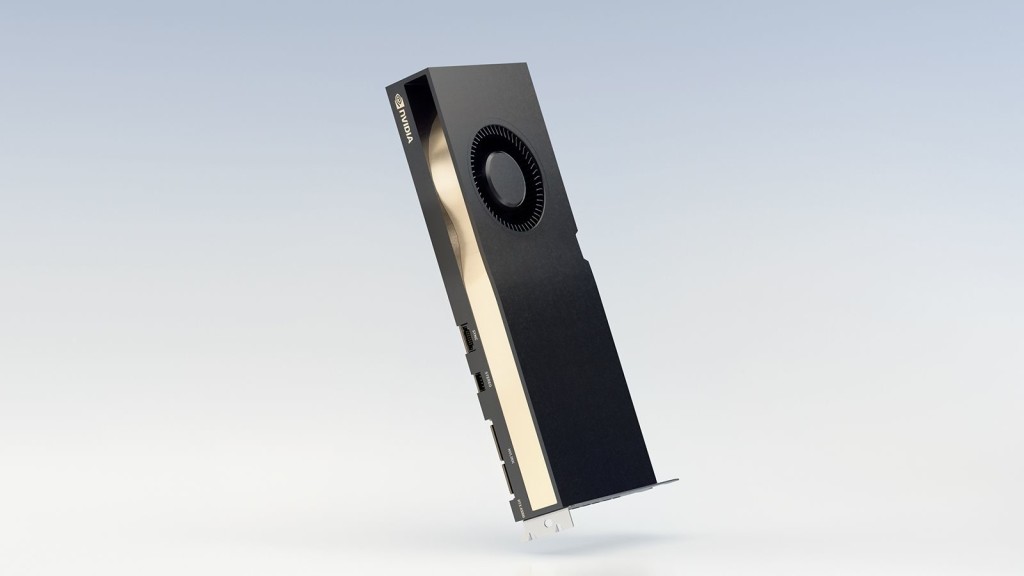

The new RTX stuff is here

It’s tough to mention anything to do with semiconductors right now, given the global shortage that’s on. But yeah, the new pro RTX architecture looks predictably insanely great, for anyone doing real-time visuals, rendering, graphics, and AI.

So, if you’re planning to make your proud reentry into music festivals in 2022 with that fully immersive 3D opera involving live artificial intelligence, you’ll want to go ahead and write these into the grant application.

Desktops get the A5000 and A4000. Laptops get A2000, A3000, A4000, and A5000.

Either way, you get all the new technology for tons of creative use. Also, even though there’s the mention of “pro,” these laptop chips fit in low-power, thin and light machines. You could wind up buying them in a reasonably inexpensive notebook computer and using it to run a live stream.

Even apart from all the utterly essential graphics applications, that’s good news for music, because the ongoing pandemic ripples are likely to disrupt at least some international travel for the foreseeable future. Oh yeah, and it also means thin-and-light PCs with NVIDIA architectures to compete with Apple’s own silicon solutions. (I wouldn’t write out Apple from possibly future pro interoperability with these architectures, too.)

But the performance gains are huge – in short:

- RT cores with up to 2X the previous generation’s throughput (for tracing your rays, shading your whatever, all that jazz)

- Third-generation Tensor Cores, also up to 2X throughput (so you can make a HAL that might open the pod bay doors even before someone has to ask)

- CUDA cores (2.5X FP32 throughput) for … everything (and possibly even some audio/music applications, but certainly anything that uses the word ‘render’ in it)

Specific to desktop:

- Up to 24GB GPU memory (or even 48GB with a two-GPU NVLink rig)

- Virtualization (tell the server admin)

- PCIe Gen 4 (twice the previous bandwidth – yeah, you might want your actual data transfer to catch up with the chip specs above, so this is essential)

Specific to laptop:

- Third-gen Max-Q – so it doesn’t sound like a you’re vacuuming the carpet any more (“whisper” quiet is the phrase you want)

- Up to 16 GB GPU memory

Also specific to pretty high-end workstation laptops, the the NVIDIA T1200 and NVIDIA T600 refresh of the Turing architecture is out. (That either means something to you because you use multiple-application workflows, or nothing to you and is a cool name.)

I might note, too, that these don’t look quite like the specs of that Apple Silicon stuff – not at the M1 level. I think it’s safe to say that for now, these are different use cases. But I also wouldn’t worry about it, either – the general scene is that working with 3D, video, AI, and streaming all get substantially easier in 2021 industry-wide, once chips get out there.

I also can imagine making an investment this year that lasts a good while, which is what happens when you do make a generational leap.

AI on the cloud

Without going into too much detail (I’ll leave that to NV), there is also a bunch of news this week for delivering GPU acceleration and (crucially for servers) AI computation via the cloud. There are a lot of “cool demo!” capabilities – machine translation, speech recognition, face recognition, eye contact, and live video processing continue to evolve through machine learning techniques from NVIDIA. (Yes, that also means more uncanny valley stuff and questions about the fabric of society, surveillance, and reality.)

But it means the ability to do stuff with big volumes of data, and in a way that doesn’t actually require you to be a huge enterprise to use.

This also deals with science – meaning artists who do understand machine learning now can make these topics relatable to the public. That’s potentially important, as we live in a world that demands more scientific understanding. (NVIDIA included an AstraZeneca chemistry example – and suddenly our lives are all revolving around that chemistry.)

I’ve been critical of some of the very examples NVIDIA uses here – like Spotify making playlist personalization more “efficient.” That’s nothing new – automating music based on trends and profit is basically as old as the music industry. But to really be able to criticize these things, I think it matters that musicians can understand, re-engineer, explain, and advocate with a solid grounding in the science and technology behind the topic. In the case of music, it’s now more complex to talk about the impact of playlists when they’re AI-driven than when you could point to something as intuitive as “payola.”

But science? Yeah, you can do genome analysis on your laptop.

And they continue to advance the state of the art in machine learning – even with smaller data sets:

Watch this space

I realize this was a very niche look at this stuff and will cause anyone not familiar with the area to have their eyes glaze over.

But AI + graphics + 3D + collaboration capabilities will pour into more recognizable use cases soon, powered by this tech.

And watch this space for what this might mean for artists, musicians, and creativity using tools like Unreal. Because there is no question in my mind that Unreal and Blender might well be mentioned in the same breath as Ableton Live and a Eurorack rig more frequently in the coming months and years.

But hey, at the very least, maybe tonight you’ll dream about standing on top of a surrealist skyscraper, gazing up at a King Kong-sized graphics card, and shouting at it “when are you shipping? why do these crypto people keep buying you? I just want to play some video games!“

Giant video card is listening. (Cue 2001-style Ligeti soundtrack… aeeeeeeeeeeeeeeee……)

Also, this is super cute – and great to see what young people are doing with this stuff, especially knowing this is a tough time for them and they deserve some fresh opportunities.