I’ve just gotten lost making my computer sing. And now I can’t stop.

You see, a funny thing happened on the way to the future. As speech synthesis vastly improved, it also became vastly more boring. Intelligibility robbed synthesized words and singing of its alien quality, which was what made it sound futuristic in the first place.

Chipspeech takes us back to speech synthesis as many of us remember it growing up. It’s weird-sounding, to be sure, to the point of sometimes being unable to understand the words. But it’s also loaded with character.

And there’s a history here. To fans of robotic baritones and sopranos, a particular chip can represent the Stradivarius or Steinway of machine song. These are the bots that sung Daisy Bell in the first-ever computer serenade, that have been featured in classic electro and techno records – and, perhaps, that inhabited your toy bin or represented your first encounter with the computer age, intoned in clicking, chirping magic.

But in a revolutionary transformation, you can make them do more than just speak. You can make them sing. And the result is one of the most enjoyable digital instruments to play you’ll see this year.

Montreal’s independent plug-in maker Plogue Art et Technologie embarked in a somewhat ludicrous labor of love, curating a collection of the greatest chips of yore and then painstakingly recreating them in software. Now, the chips themselves are great fun to work with for hardware geeks, whether directly or in circuit-bent form. But historical chips are a non-renewable resource; some are plentiful, others rare.

And there are advantages to reimagining these in virtual form. CDM has had world-exclusive early press access to Chipspeech, our chance to roam the possibilities of the software emulations. And the software allows these chips to perform whatever you ask them. You can type in lyrics, and immediately hear the results sung back to you, something the original chips couldn’t do in tune. You can mix sounds and transform the chips’ output in ways that would be impractical (or at least challenging) on the original hardware. And, in a unique touch, you can even make software modifications that are the equivalent of circuit-bending the hardware.

Here’s an in-depth look at what that means, how it sounds, and how it came to life.

Chipspeech, The Singing Robot Plug-in

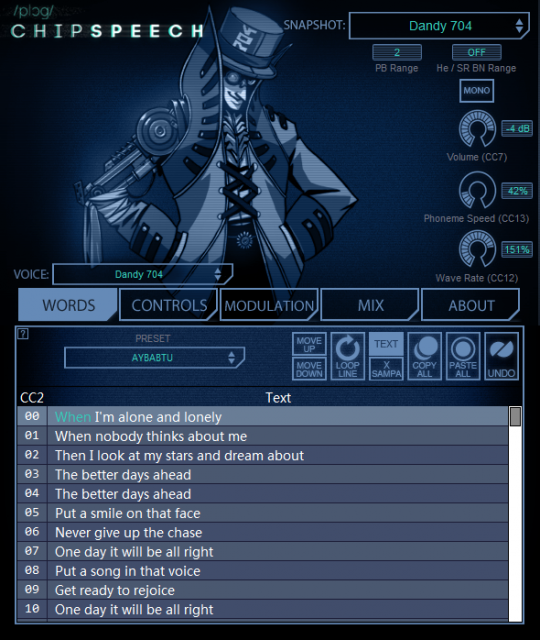

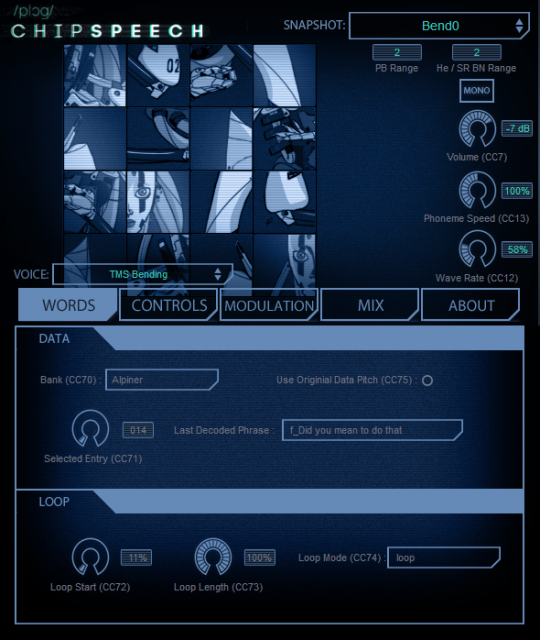

Like Yamaha’s Vocaloid synth, the basic arrangement is a pretty simple MIDI affair. Drop in a plug-in, add some text you want the machine to “sing,” and then trigger pitches with MIDI notes. Each MIDI note advances to the next syllable, with additional layered control available via MIDI Control Change (CC) message or plug-in automation.

The results are not always straightforward and predictable, but that’s part of the fun. (Black MIDI plus Chipspeech, anyone?) You can either enter text directly or, for greater control, type in specific syllables’ sounds – ideal for those obsessive with getting the pronunciation right (and allowing you to correct the odd pronunciation mistake). Japanese and English are each supported directly; I hope to follow up once I’ve used the tool with a native Japanese speaker. (Other languages could be “hacked” with direct entry of syllables.) But despite the incusion of Japanese, this isn’t a Yamaha engine: it’s something new, and it sounds amazing.

What’s surprising, in fact, is how expressive the instrument can be: with some of the voices and the addition of vibrato, you can get humanlike expression but with timbral qualities that are unmistakably more machine than man. And that’s partly owing to the range of classic voices. With these, there’s a little something for everyone. There are poetic descriptions on the Plogue site that seem cribbed from a vintage computer game, but here’s what you need to know:

Dandy704 is an emulation of the IBM 704-based voice that first sang “Daisy Bell.” That work, by John Kelly and Carol Lochbaum (working at Bell Labs with Max Mathews, the same place that birthed sound synthesis itself), inspired Arthur C. Clarke’s 2001. And you know it for its strange, rasp-y vowels.

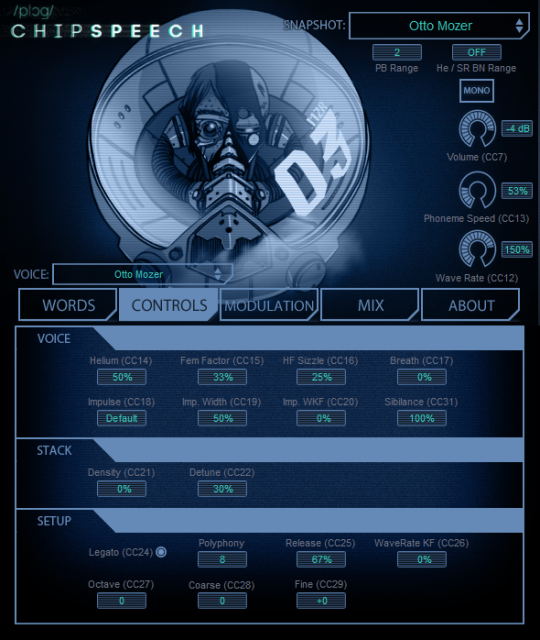

Otto Mozer is a recreation of the first speech chip, as seen in a feature video. You probably know it from some talking calculators or, more like, Commodore 64 games like Desert Fox and Impossible Mission. It’s surprisingly realistic.

Lady Parsec is one of the toughest to understand, but also the most beautiful – a high, warbly female voice featured in the TI game Parsec. (Probably listening to this voice was actually better than playing the game…)

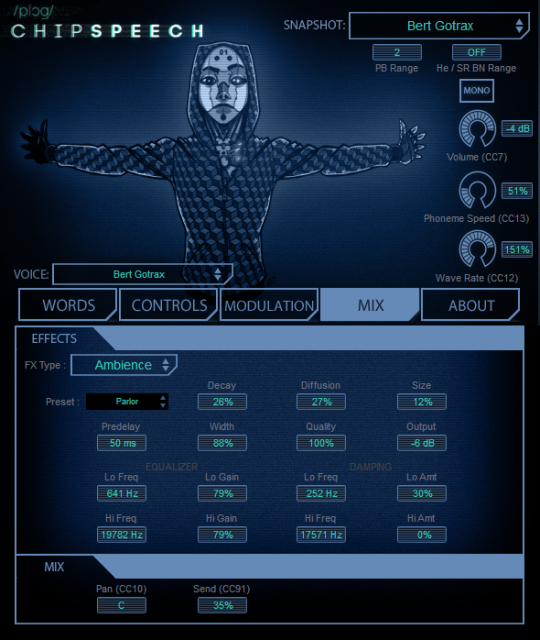

Bert Gotrax This is the chip I had heard the most as a kid; it’s the analog Votrax chip that was all over the place. It sounds absolutely, completely horrible – in the best possible way. It was in arcade machines from pinball to Gorf and connected to various desktop computers like the TRS-80. And perhaps most famously, it voiced Q*bert in the arcade.

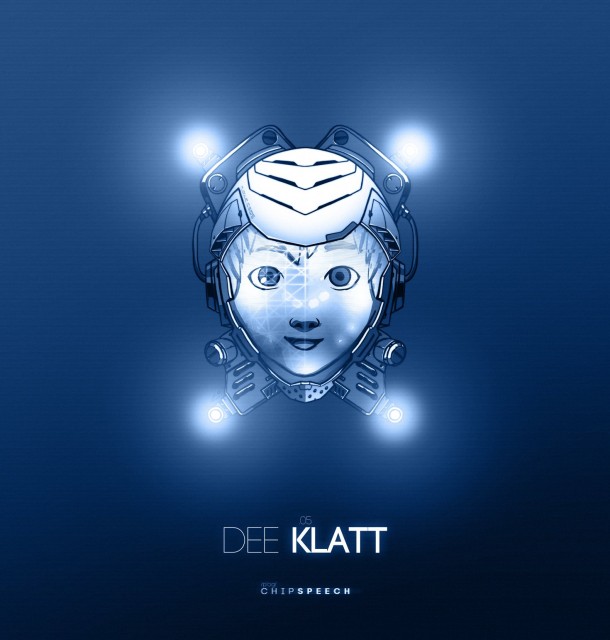

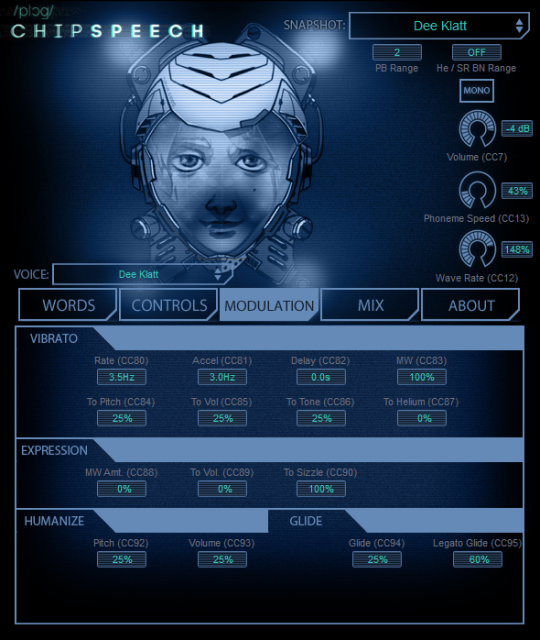

Dee Klatt It’s Stephen Hawking’s voice. It was also (formerly) the voice of the National Weather Service. Dennis Klatt at MIT built this implementation that became the DECtalk from DEC. It’s crisp and clear and versatile, and out of this whole bunch perhaps the best antecedent to today’s voice synths. But it’s still loaded with personality, a sort of distant robot character who has crawled from the depths of the uncanny valley even as it’s understood.

Spencer AL2 is the General Instrument SP0256 – truly alien-sounding and perhaps the most like a pure musical instrument. You could certainly apply this chip and use it more as abstract timbre. But it should also sound familiar to anyone who encountered a TRS-80 with an adapter, or an Intellivision or Magnavox Odyssey game console.

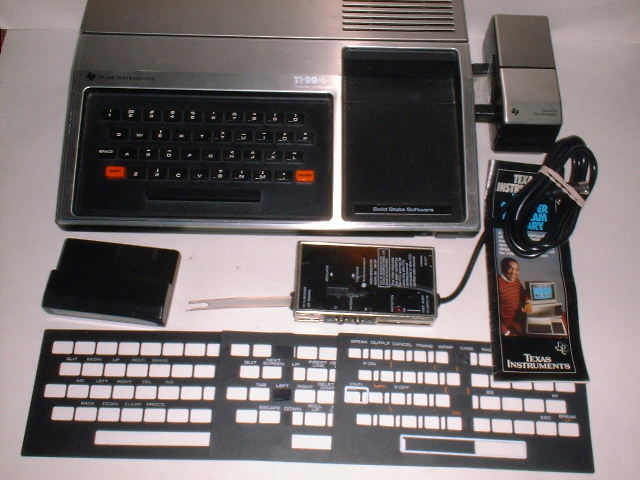

Terminal 99 is the voice chip I heard in Kindergarten, circa 1984, speaking from inside a TI-99. It … probably changed my life. I think I might as well have been beamed into a science fiction movie. This thing was the devil’s voice whispering into my ear, “you will forever be in love with computers.” Ironically, now all of the chips that work better sound nowhere near as cool. So, those of you with kids, maybe play this for your kids. Or cats – they probably like it, too. (I got it too late in the beta to play with it, but hope to do so.)

Photo (CC-BY-SA) User Yiddophile on en.wikipedia.

Using Chipspeech

Anyone familiar with Plogue’s amazingly-good, “we’re just completely obsessed lunatics” emulations of chip effects and instruments will feel right at home with Chipspeech. The interface itself is nothing special – you get mostly a series of knobs and number boxes. And sometimes lyric entry is clumsy because of differences in plug-in hosts – paste shortcuts don’t work in Ableton Live, for instance, on the Mac, but do if you right-click. But that said, everything you need is there, and there are enough options that you could easily spend months doing nothing but subtly tweaking sound parameters and syllable entry.

Fortunately, if you just want to start playing, that’s possible, too. There are loads of presets, including pre-entered text and circuit-bent options. And if you do make modifications, there’s a robust system for saving snapshots and presets as well as lyrics.

Best of all, this isn’t system intensive. I went nuts adding instances and couldn’t slow down my 13″ MacBook Pro; I just kept playing everything live. Operating system requirements are light, and you don’t need much storage or memory – a welcome change of pace from most plug-ins lately.

My only real complaint is that the interface could be friendlier to navigate. Plogue’s chip line can get overly geeky; it’s a shame the extensive descriptions on the product page aren’t available in the plug-in, and it can be hard to work out what the presets mean if you’re not in the know. But that’s a small complaint, because trial and error is a ridiculous amount of fun when you have robots singing your words for you.

You get nearly every format imaginable, including Windows and OS X, VST, AU, and Pro Tools AAX and RTAS, plus standalone. (The Windows formats can work on Linux.) There’s not any intrusive copy protection scheme; you license your plug-in with the aid of a drag-and-drop image file. And you support a product whose emulations were created in loving, respectful collaboration with the original makers and license holders.

The price is an absurdly reasonable US$75 (€63 before VAT – and yes, they’re prepared to charge VAT for downloads).

What it Sounds Like

For the best sense of Chipspeech, here’s a compilation record released alongside the software, with contributions from the likes of Future Sound of London, joined by chip artists like GOTO80 and XC3N, all on the chip-focused label Toy Company.

I also contributed a track to that compilation. Here’s my own first experiment with the plug-in – a combination of Chipspeech and (the blippy arpeggiated bits) Chipsounds. What struck me was how much fun I could have playing from a keyboard, which allowed these chips to sing, opera style. And I only began to scratch the surface of the possibilities here.

Talking to the Creator

I spoke to David Viens more about the process behind the plug.

CDM: How did this project come about in the first place?

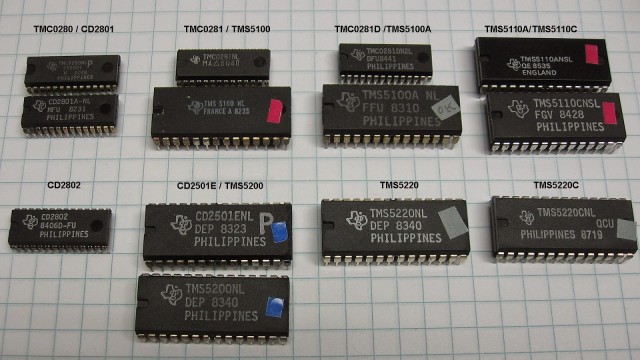

When the research behind chipsounds was at its peak (mid 2000’s), I kept on stumbling into speech chips. I bought them even if they didn’t fit my current obsession. Something about ‘I might not get the chance to get them again’ and probably some Pokemon tendencies. Votraxes, SP0256’s and TSI S14001A chips and others…

I knew at some point I would do something with them, but I don’t think I was ready for the complexity of such a project yet.

Speech chips are also a favorite topic a good friend of mine, Jonathan Gevaryahu (Lord Nightmare) – a regular MAME/MESS [arcade emulator] contributor. We both share the same fascination over the magic those tiny square of silicon that generate sound. Over our conversations, I often reminded myself how cool it would be to make a followup to chipsounds with speech chips.

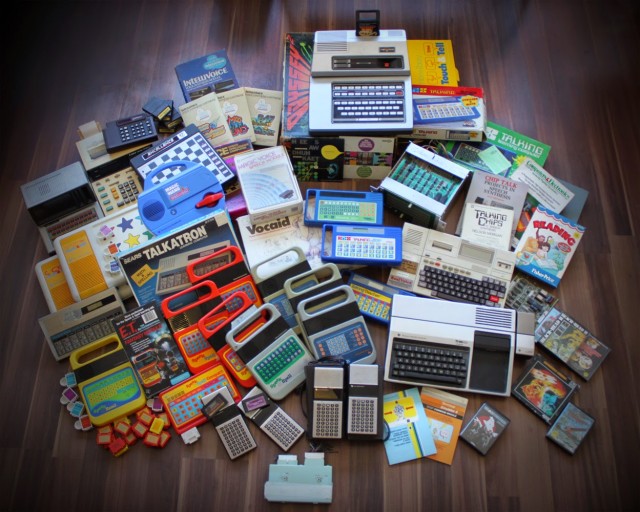

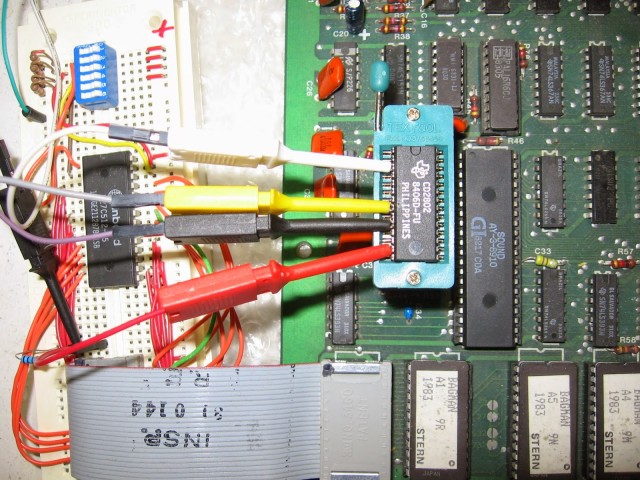

Since I work on those projects over periods of years, it’s hard for me to keep track of how much time exactly was spent on the hacking, protoboard making, probing, and reverse engineering of speech chips, but lets say that last year alone, it accounted for virtually all my evening time and kid’s nap times over the weekend. I also probably invested way over $10K of my personal money just in talking devices. You can judge from this picture (see chipspeech Diary part1).

Now I’ve got this emulation core that I know could sound better if it had greater DAC bit depth, and more pitch bits, and … if you go through that, don’t you make an inaccurate emulator?

Thus, I added tons of switches here and there to make things more musically useful, without breaking the case where you just want 100% emulation for regression tests with hardware. Remember we want a singing product, not a text-to-speech product. You expect to be able to have expression, dynamics, vibrato, and EXACT tuning, EVEN in the high pitch range, without adding aliasing… well that wasn’t there to start with.

Also, and this is a little bit hard without having done DSP/synths to understand, with source data like this, its often hard to have sample rates and pitch be two TOTALLY independent variables. The source data is linked to the sample rate it was made for. Typically 8kHz or 10kHz. In chipspeech, you can link and unlink them at will. So you can do typical samplerate drops where the formants dros with the pitch, or just the pitch, or just the formants (what we call the Helium factor) — all using the pitch bend wheel. Helium is one of the cool things about this, and can be abused to do some very cool alien tones. It scales the formants, kind of like when you dolly zoom with a camera.

But then I got stuck!

I was truly, totally clueless about phonetics, I barely knew different languages had different phonemes.

Then something truly unique happened: we received Hubert Lamontagne (MadBrains)’s resume, which on top of what you’d expect we would be interested in as a company (chipmusic, synthesizers), also mentioned “phonetics.” So I immediately invited him over.

What was supposed to be a 45-minute interview transformed into a whole afternoon of name-dropping chips, features, FM operator structures, etc. Then I asked him if he would be interested in working on a vintage singing speech synthesizer, and lucky for us, he was.

On my own, I would have made a sample+bending library, better than others, but still only a library.

But being able to type any English or Japanese words and phrases and directly playing that on the keyboard, combined with all the modulation he helped designed, made for something else entirely. Something far from static.

How did you manage to recreate all of these chips – how did you get the legal right do so?

What makes a speech chip unique? Two things.

1) Its internal workings

2) The data you feed it.

1) → for a guy like me, it’s the fun stuff. I have enough experience nowadays to know what to do in what order. Which battery of tests to run, what pitfalls to avoid , what tools to use, etc.

2) → The data is easy to extract (again for me). I’ve personally archived nearly all of the early TI line of products, including rare ones (for instance, the Japanese Speak & Spell – which speaks ‘easy’ English, for the education market teaching English to Japanese people), the Language Translators (used by Kraftwerk), and even Chrysler’s Electronic Voice Alert (EVA – car warnings).

But I respect IP owners too much. This is a major issue right there – I need to look myself into the mirror. I can’t just steal someone else’s IP, however old it is!

Edit: added for clarification. So, even though I have all those precious data files archived, not everything is cleared yet, so no Language Translator, EVA or Speak&Spell yet (my hopes are relatively high for the future).

In the mean time, we did get the rights to the speech data from three TI-99/4A games (Alpiner, Parsec and Moon Mine), and the internal vocabulary of the TI Speech Device.

You already have lots of cool Texas Instruments words to bend in there, and lots of Speak&Spell phrases like “Spell” “That is incorrect” are in there already, so I’m very happy overall!

The tech, you can emulate, but not the data. It’s ultimately data derived from someone’s voice, thus a recording. Some people say its just a few synth parameters, but where do you draw the line? An mp3 is really a bunch of parameters to a function, but its the end result that counts. Is an LPC10 recording that different from an mp3? I don’t think so.

Lots of phone calls and emails trying to find who owns this, bouncing emails, dead ends, no answer, bankruptcy, 404’s — you get the idea. Don’t think it would have been possible to do this in 10 years, would have been even harder… unless I completely disregarded the copyrights.

You then have to explain them why? Musicians? Singing? People want that? How does it work?

Me: The voices are UNIQUE, they sound cool! They have texture, they are musical in their way.

Them: Well ,really? We just did the best we could at the time with the cpu/memory constraints, much better things exist now.

Me: No, yeah! Sure, but we do not WANT them to be perfect!

I feel there is so much more aural history to preserve.

I had great exchanges with the likes of Dr Forrest Mozer (ESS) and Gene Frantz (formerly of TI). These guys are retired, and guess what? They still do research, they teach, they mentor, they code, they write technical articles.

I admire these guys immensely and hope that I’ll be fortunate enough to be able to do the same thing in 30 years’ time.

For more on how Chipspeech was made, read:

Chipspeech Diary, Part 1

Chipspeech Diary Part 2

The Plug-in

Find more here:

http://www.plogue.com/store/

http://www.plogue.com/products/chipspeech/

Bonus: here’s what happens when a Linnstrument meets Chipspeech…