Wade in the water, indeed. Set the Irish duo Lakker loose in a Dutch film archive, and what you get is a dense, heavy experimental techno album and a live show exploring the Netherlands’ ongoing battle with the sea.

It’s a 2016 album, but even if you caught it before, now we get some insight into its evolution into a live audiovisual show.

Even before you get the sense of the historical narrative behind it, the music itself is evocative, dark, and rich. I actually like that we’re calling all this music “techno” now – this isn’t in the four-in-the-floor sense, yet the influence of that music on futuristic sounds and bass-heavy spectrum is clear. And now, with adventurous clubs and festivals having cultivated the audience for it, it is something you could hear booked overnight on a dance floor. Crowds have an appetite for dark and even nightmarish ear spelunking. And woven in there are the rhythms and movement that club experience can provide. With Struggle & Emerge (R&S Records), you get a wonderful sound world – and the basis of a perfect live soundtrack to an exploration of the deeper meaning of Dutch water. You can give the album a listen on Spotify for a taste:

But there is a narrative behind that.

Whereas for so long tech had been about an endless, sometimes superficial pursuit of the new and novel, now media archaeology is an increasingly present aspect of artistic practice. That is, you can mine the old to produce something new, folding together past, present, and future.

Collaborations with institutions are essential to making that a success. In this case, the Netherlands Institute for Sound and Vision offers its RE:VIVE Initiative, which opens up archives to electronic music. They’ve done releases, performances, and even sound packs you can download:

Lakker (Dara Smith and Ian McDonnell a.k.a. Eomac) have a long-standing practice of deep commitment to creative sound design, and in the case of Dara Smith, to visual work. So, with RE:VIVE, they dug deep into the archives to construct a new album and performance. And they found in their theme the history of a country that has managed to survive for centuries below sea level.

There’s a fifteen minute documentary on the project:

The duo tell us that led them to talk even with top engineers and academics to understand what it means for a society to contend with water levels. (The relevance to those of us outside of the Netherlands in the age of climate change needs no explanation, of course.)

And they hope the result, in their words, will “capture humanity’s ongoing struggle with nature’s devastating power, our militaristic counterforce and the serenity found somewhere in between as we move towards an uncertain future.”

But how do you get from archives to new work?

From a text from the duo:

During the album writing process, one analogy that kept surfacing was that of the “Sonic magnifying glass” and how Lakker could use various audio processes to dig deeper into the archival material and reveal hidden sounds.

You’ll hear those murky sounds all over the record, producing landscapes of howling seas and powerful weather. There’s a detailed deconstruction at this minisite, track by track:

https://beeldengeluid.atavist.com/lakker

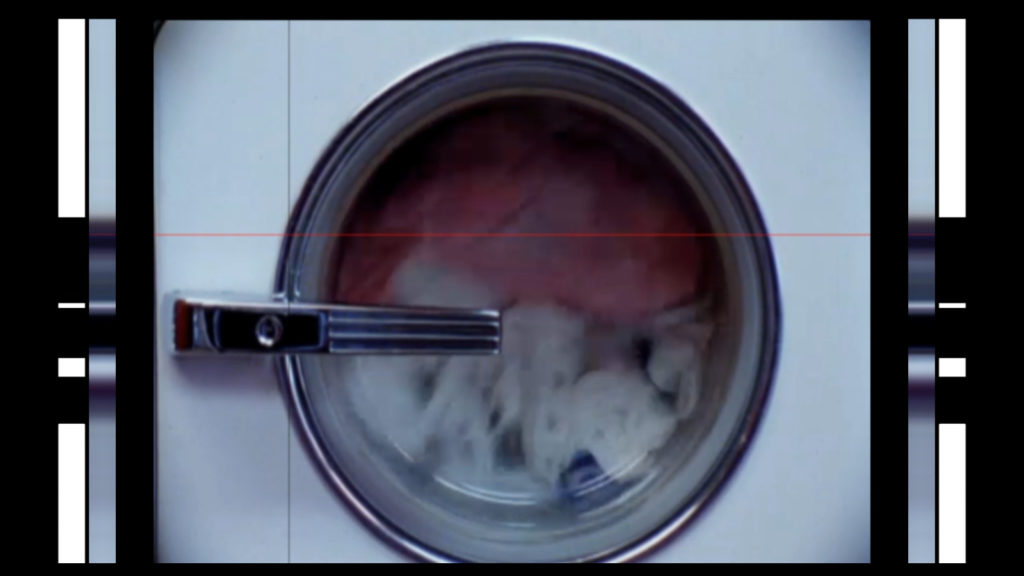

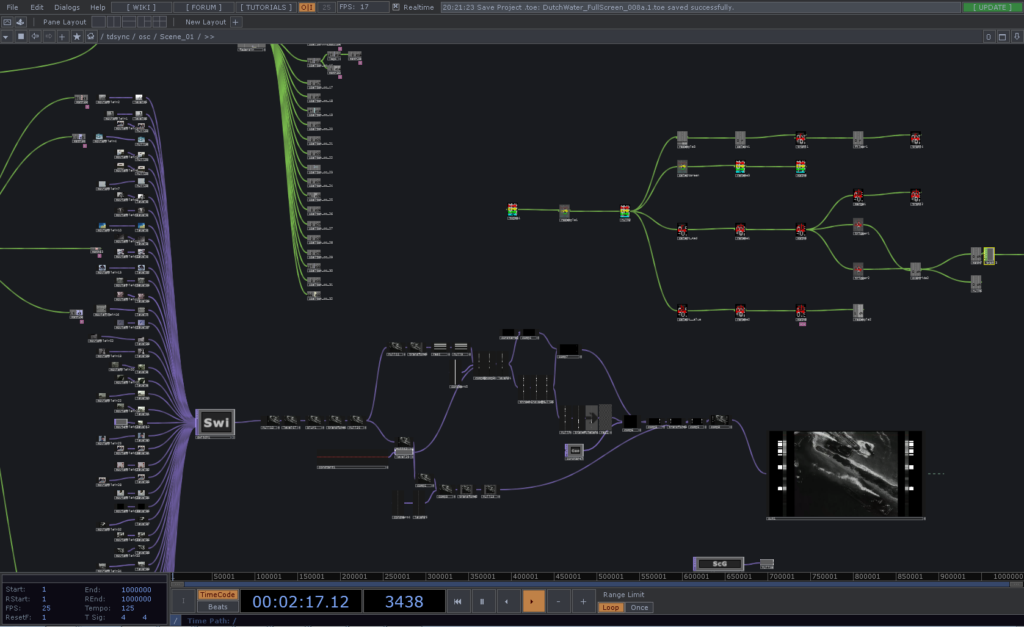

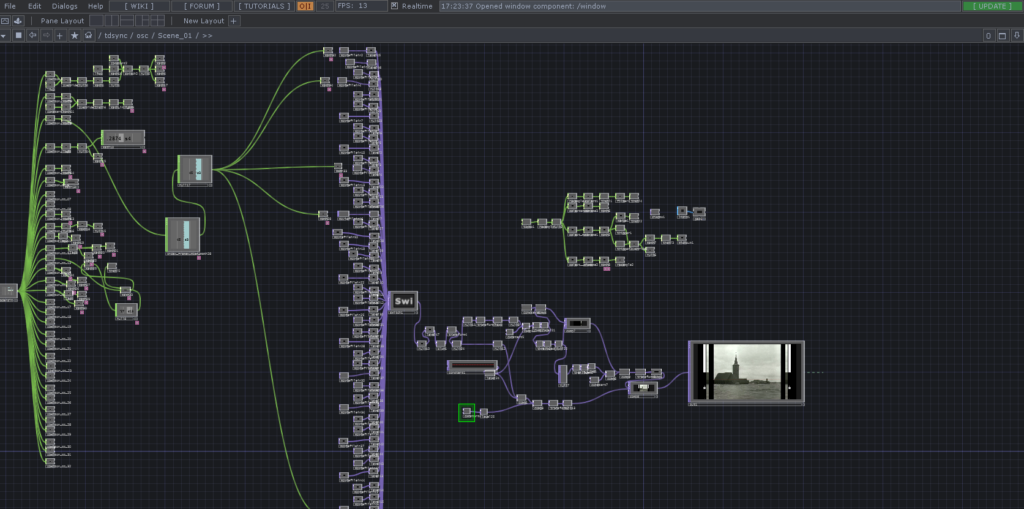

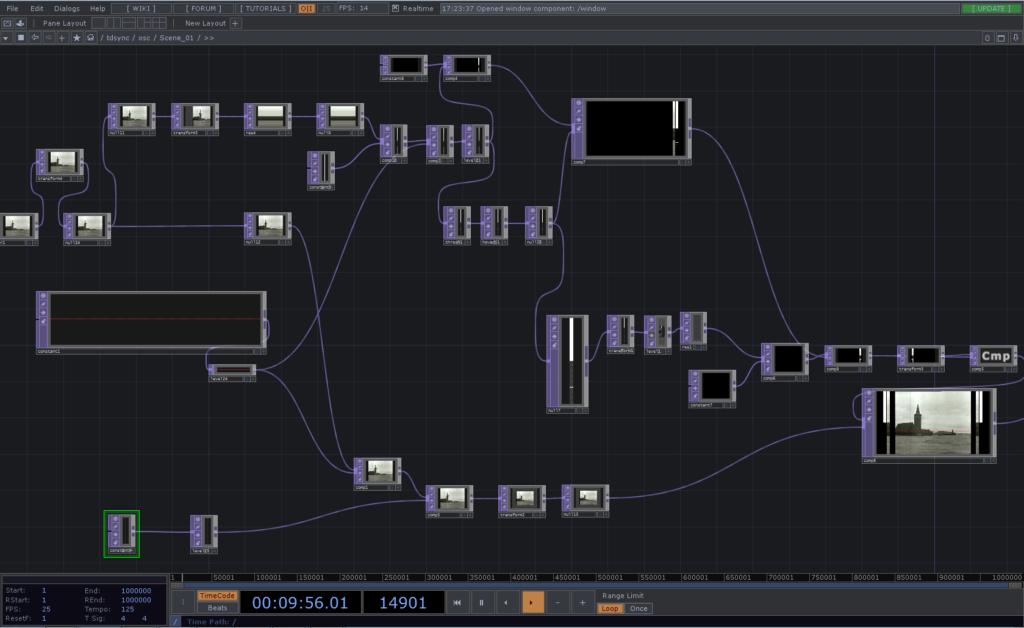

But there is a visual aspect, too — one that scans through the archives and algorithmically processes into the visual show — and triggering music and sonic performance in the process. They’re working with Derivative’s TouchDesigner, a graphical development environment for Windows and macOS designed for patching together visuals. Watch the results:

To exploit this with the films, Smith created a real-time video editing system using Ableton Live and TouchDesigner that allowed him to search through the video footage and create synced loops that emphasize the underlying music. As with sounds, in films, the real interesting material is sometimes obfuscated which can only be revealed by isolating and accentuating. For the films, Smith conceived a way of focusing on small loops of time and also zooming in on specific areas of each frame, drawing attention to minutiae that the eye misses.

A scanning system is reading pixel information from the archive video footage and this data through Touch Designer and then Ableton is creating and triggering samples, sounds and notes that are then integrated into the musical live set.

This data that is being transferred between the two programs via OSC [Open Sound Control] can be used to manipulate the music in any number of ways. The System is also set up that either preset video clips are used or else the video from the archive can be scanned through live and edited on the fly into loops and clips that sync with the music. Therefore, we have added a musical entity to the soundscape that is directly linked to the archival video footage.

Now, a lot of people tend to think in terms of “generative” works and VJing or “video mixing” broadly. This demonstrates that video can be generative — not only in the sense of living inside an interactive, graphical development environment like TouchDesigner, but also in the way in which video is manipulated live as a dynamic medium. That contrasts with the conventional approach to VJing with videos via two-channel mixing and timeline slicing.

I know Dara and Ian have been working on this in their live shows for some time, building a language by which visual and sound can relate. (Both work on the music, then Dara programs the visuals, and the two play both elements live onstage together — meaning it’s necessary to ensure the two relate during that performance.) I got some peek at this, even, when we played a show together – it’s not so much about automation as it is strengthening an aesthetic connection.

Now, in this piece, that’s bound up with the content itself in what seems a beautiful way.

I really hope to get to see the full show live.

Thanks to Dara for providing CDM with this text and images, including an exclusive look inside their TouchDesigner patch!