The Glitch Mob is one of the hot summer tickets for electronic music, and they’re fortunate enough to stage a massive live visual spectacle alongside the show. This week’s a perfect time to consider all that visual goodness, with the release of their latest original music, “We Can Make the World Stop” on EP.

So how does that scale of real-time performance come together? We find out, from the guy running the show live. He walks us through everything, from the technical setup to the performance elements to the team that brings it to life, in the kind of detail you normally only get touring the stage. -Ed.

This is Momo the Monster checking in from the road, on tour with The Glitch Mob for the summer of 2011. I’m here to spill the beans on who’s involved in making the show, and how it all works.

The Team

The man with the vision is Martin Phillips of Bionic League, who, along with John McGuire, designed the Daft Punk ‘Alive’ Tour, Deadmau5’s Cube, and tours for Wolfgang Gartner, Kanye West, Nine Inch Nails, Weezer, and many more. He worked with the band to design a set that would take their stage presence to the next level, creating three separate pods for the band members, using their existing BrightStripe lights in a new configuration, and adding an LED wall behind them to bind all the elements into one cohesive stage.

With the stage design in place, Martin worked with Mike Figge and his crew at Possible in the creation of visuals for each song in The Glitch Mob’s current repertoire. Both the LED Wall and the BrightStripes on stage are controlled by the videos that Possible created – more on that in a bit.

The last piece in the puzzle is yours truly, Momo the Monster, the final cog in the great visual machine of the tour. With the stage designed and most of the visuals created, my roles are:

- Pre-Tour: Build a playback system to control the wall (BasicTech F-11 Tiles) and tubes (Mega-Lite BrightStripes).

- Rehearsal: Help the team make the whole system work together and practice the songs with the band.

- Before First Show: Design visuals for songs that don’t have Possible tracks already.

- Tour: Oversee setup/teardown of the system for each show, troubleshoot issues, run the video for the show, working with the house Lighting Director at each venue to make best use of the in-house lighting system to supplement our stage.

The Hardware

The wall is made up of seven columns, each of which have three BasicTech F-11 LED Tiles. These tiles take a signal from a proprietary BasicTech processor, and display a section of the overall video on their LED Array. Each tile has an address you set on the back which describes its place on the grid, ie (0,0) for the topmost-left tile, (0,1) for the tile just to the right of it, etc. The processor takes a 1600×1200 image and splits it up amongst all the tiles. Each tile has one video input connector and two outputs, so a coaxial cable carries the signal into the first tile, which sends the signal up it’s column as well as over to the next column. Coaxial works well as you can run it long distances – in this case we have a 300-foot loom of two cables so we can accomodate the largest venues we play (like Red Rocks, Colorado).

The tiles all have custom plates on the back created by Flix FX, which have clamps on the back that are affixed to poles at each show, and inserted into heavy metal bases. Having each tile column on a separate pole instead of connected as one flat, contiguous wall allows us to shrink or expand the wall as necessary to fit on various size stages.

The stations are made up of risers with custom fittings and tables, created by Accurate Staging. The lights are Mega-Lite BrightStripes, which The Glitch Mob used in a different configuration on their previous tour. Each stripe is affixed to aluminum piping, which comes apart in two sections, leaving most of the cabling intact. Each stripe is controlled by DMX commands, and can be thought of as a 1×16-pixel screen.

At Front-Of-House, I’m using a MacBook Pro with an APC40, Akai LPK25, and MidiFighter as my controllers. The LED Processors live with my rig, and we run Coaxial and Cat6 cables all the way to the stage. The Cat6 carries ArtNet signals to a PRG Virtuoso Node, which converts the ArtNet signals to 6 DMX Universes to run the stripes on each station.

The Software

The main playback engine is VDMX, whose new beta8 includes Syphon integration, which is a major key to this show. It handles playback of the synced videos, and sends out a copy of the main video mixdown via Syphon.

Arkaos MediaMaster Express sees the VDMX Mixdown via a handy little trick. While it doesn’t support Syphon directly, I created a simple Quartz Composer patch which takes the Syphon image and displays it on a full-screen Billboard. MediaMaster supports Quartz Composer, so in this way it easily runs a Syphon input. MediaMaster does what is called PixelMapping (not to be confused with Projection Mapping), which translates the video image into DMX commands, which are used to drive the BrightStripes on stage. Originally, I’d rolled my own pixel mapping program, using Cinder to read the Syphon input and translate pixels to DMX values. It worked great in my studio, when I’d planned on four DMX universes due to some faulty logic. Unfortunately, it turned out that we needed at least five, and the ArtNet Server that my program depended on (OLA, which is mostly fantastic), relies on LibArtNet, which currently only supports four universes. Major props to Arkaos for a last-minute save with MediaMaster when I was beginning to tear my hair out over getting the lights up and running.

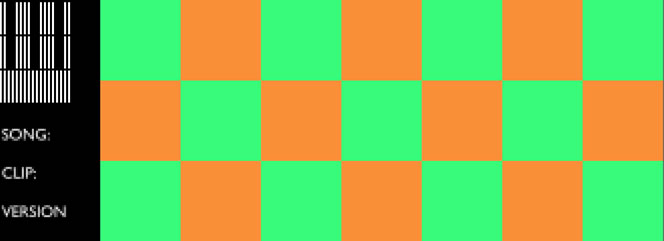

The final piece of the puzzle is a custom OpenFrameworks application with a working name of GlitchLights. Here is what a final video from Possible looks like:

Using this template as a starter, I created an application to draw boxes on each tile – I can draw them individually, or trigger a random number of them (mirrored on the Y Axis for prettiness). Once I had a little more time, I upgraded the program to draw a mini version of the wall inside each box, resulting in a space-invader-like creature instead of a solid box. The MidiFighter turned out to be a pretty perfect controller for this show – I’ve got a 7×3 grid to work with, so I use a 4×3 array of buttons from the MF to control the squares (always mirroring on Y), and I’ve still got a row free along the top for modifier buttons. I put the mini keyboard to use controlling the BrightStripes – each key lights up half a station, with another set of three keys which will light up a full station. This makes it easy to jam along with the band while still looking deliberate (I found that controlling columns of lights or even individual lights looked too messy with the limited amount of practice time I had).

A final bit that bears mentioning – I use the APC-40-22 MIDI Remote script with some custom changes to light up the buttons on my APC40, which I use for triggering videos, changing layer opacity, adding FX and jumping to cue points in the videos.

The Performance

For the many songs which have videos that are perfectly synced with them, my job is very simple. Each video has the audio of its song embedded, so I trigger the video on the first hit of the song, and slip the timeline forward/back to as necessary to match the audio in my headphones with the audio in the venue. Once it’s locked in, I mostly nod my head, pump my fist, and watch to make sure nothing goes wrong, checking in with my headphones every minute or so to make sure we’re still good. If needed, I’ve set 8 cue points at key parts of the song that I can jump to via buttons on my APC40. For the few songs which have not been designed by the good folks at Possible, I have one or two background clips that I’ve created (mostly by modifying the amazing Beeple’s videos) and I trigger visuals via my GlitchLights app in time with the band. I’ve also found Memo’s BadTV Quartz Composer plugin to fit quite well with the show, with the parameter mapped to four knobs on my APC40.

The Future

I continue to work on GlitchLights as time allows, adding new animations and objects to my live playback repertoire. I’d like to get a Midi-to-Cat5 extender so I can receive live data from the band as they play and have them control the wall directly. I’ve also been inspired by their Lemur playback style, and I’m working on an iPad app that’s both a controller and a game, influenced by The Glitch Mob. The band is amazingly receptive to new ideas, I’m sure the show will continue to grow and change as we travel.

Thanks for reading, and come see the show! It’s a great spectacle, and an amazing thing to be a part of. I’m happy to chat before or after the shows, and you’re welcome to look over my shoulder during – just don’t try to talk to me while we’re running, and for the love of FSM – don’t put your beer on my table.