From the extraordinary first digital breakthroughs of the 70s, when lightbulbs stood in for LEDs, to what may have been the first use of the word “plug-in,”* we the inventors of Eventide’s classics – who now have a Grammy nod of their own.

Rock and pop have their heroes, their great records. But when you’ve got an engineering hero, their work finds realization behind the scenes in all that music, in hit music and obscure music. And then it can find its way into your work, too.

These inventions have already indirectly won plenty of Grammy Awards, if you care about that sort of thing. But at the beginning of this year, the pioneers at Eventide got a Lifetime Achievement Award, putting their technical achievements alongside the musical contributions of Tina Turner, Emmylou Harris, and Queen, among others.

Why are these engineers smiling? Because they got a Grammy for their inventions. Tony Agnello (left) and Richard Factor (right) at the headquarters.

Electrical engineers and inventors are rarely household names. But you’ve heard the creations of Richard Factor and Tony Agnello, who remain at Eventide today (as do those inventions, in various hardware and software recreations, including for the Universal Audio platform). For instance, David Bowie’s “Low,” Kraftwerk’s “Computer World” and AC/DC’s “Back In Black” all use their H910 harmonizer, the gear called out specifically by the Grammy organization. And that’s before even getting into Eventide’s harmonizers, delays, the Omnipressor, and many others.

1974 radio advertising:

Here’s the thing – whether or not you care about sounding like a classic record or lived through all of the 1970s (that’s, uh, “not so much” for me on both of those, sorry), the story of how this gear was made is totally fascinating. You’d expect an electrical engineering tale to be dry as dust, but – this is frontier adventure stuff, like, if you’re a total nerd.

Here’s the story of the DDL 1745 from 1971, back when engineers had to “rewind the f***ing tape machines” just to hear a delay.

Eventide founder Richard Factor started experimenting with digital delays while working a day job in the defense industry, at the height of the Vietnam War, working with shift registers that work in bits.

Their advice from the 70s still holds. What do you do with a delay? “Put stuff in it!” Do you need to know what the knobs are doing? No! (Sorry, I may have just spoiled potentially thousands of dollars in audio training. My apologies to the sound schools of the world.)

Susan Rogers of Prince fame (who we’ve been talking about lately) also talks about how she “had to have” her Eventide harmonizer and delays. I now have come to feel that way about my plug-in folder, and their software recreations, just because then you have the ability to dial up unexpected possibilities.

Or, there’s the Omnipressor, the classic early 70s gear that introduced the very concept of the dynamics processor. Here, inventor Richard Factor explains how its creation grew out of the Richard Nixon tapes. No – seriously. I’ll let him tell the story:

Tony deals with those philosophical questions of imaginative possibility, perhaps most eloquently – in a way perhaps only an engineer can. Let’s get to it.

* I should note the plug-in reference is probably first in regards to audio gear; other domains may have seen its use first.

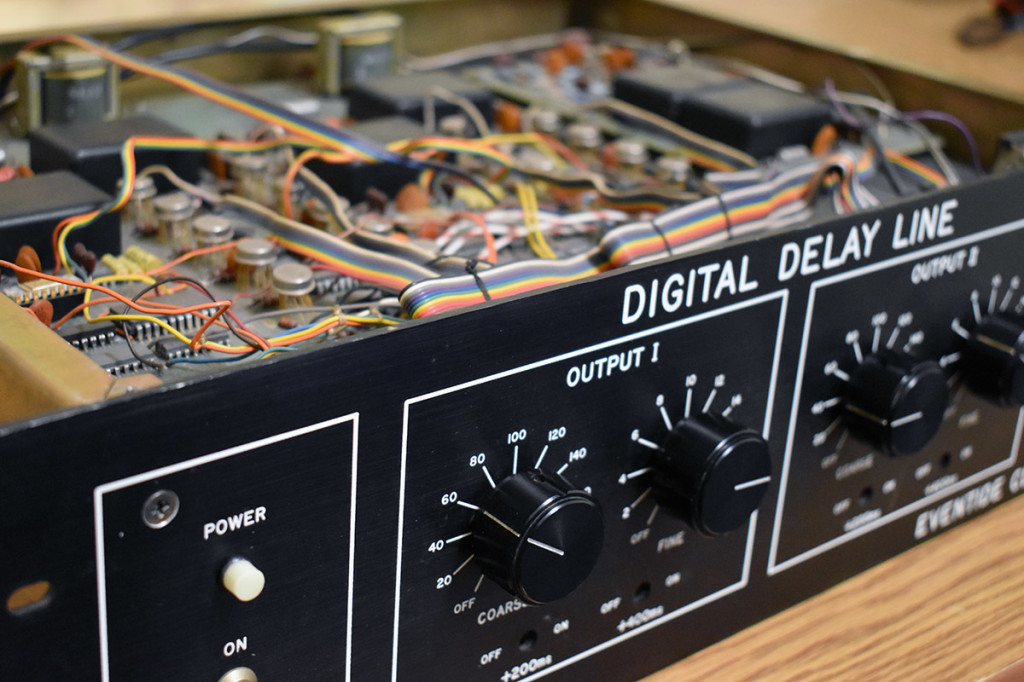

The first commercial digital delay looked like… this. DDL1745, 1971.

So you’ve already told this amazing story of the Omnipressor. Maybe you can tell us a bit about how the H910 came about?

When I joined Eventide in early 1973, the first model of the Digital Delay Line, the DDL1745, had just started shipping. At that time, there were no digital audio products of any kind in any studio anywhere.

The DDL was a primitive box. It predated memory (no RAM), LEDs (it had incandescent bulbs), and integrated Analog-to-Digital Converters [ADCs]. It offered 200 msec of delay for the price of a new car — US$4,100 in 1973 which is equivalent to ~$22,000 today! The fact is that DDLs were expensive and rare and only installed in a few world-class studios. They were used to replace tape delay.

At the time, studios were using tape delay for ADT (automatic double tracking) and, in some cases, as a pre-delay to feed plate reverbs. Plate reverbs had replaced ‘echo chambers’ but fell short in that, unlike a real room, a plate reverb’s onset is instantaneous.

I don’t believe that any recording studio had more than one DDL installed because they were so expensive. I was lucky. On the second floor of Eventide’s building was a recording studio – Sound Exchange. I was able to use the studio when it wasn’t booked to record my friends and relatives. And I had access to several DDLs! I remember carrying a few DDLs up to the studio and patching them into the console and having fun (a la Les Paul) with varying delay and using the console’s faders and feedback. By 1974 Richard Factor had designed the 1745M DDL which used RAM and had an option for a simple pitch change module.

At that point, I became convinced that I could create a product that combined delay, feedback, and pitch change that would open up a world of possible effects. I also thought that a keyboard would make it possible to ‘play’ a harmony while singing. In fact, my prototype had a 2-octave keyboard bolted to the top. Playing the keyboard was unorthodox in that center C was unison, C# would shift the voice up a half step, B down a half step, etc.

The H910 – tagline: F@*ks with the Fabric of Time”. (Cool – kind of like me and deadlines, actually.)

Now you can “f***” (to use the technical term) with the H910 in plug-in form, which turns out to be f***ing fun, actually.

Squint at this outboard gear shot for Michael Jackson’s “Thriller” and you can see the H910 – essential.

I liked in particular the idea of trying things out from an engineering perspective – as you put it, from what you think might sound interesting, rather than guessing in advance what the musical application would be. So, how do you decide something will sound interesting before it exists? How much is trial and error; how much do you envision how things will sound in advance?

Hmmm. First off, it starts with a technical advance. Integrated circuits made digital audio practical and every advance in technology makes new techniques/things possible, and new capabilities ensue.

At the dawn of digital audio, the mission was clear and simple from my perspective. I had studied DSP in grad school and read about the work being done at places like Bell Labs. At the time, the researchers couldn’t experiment with real-time audio, which was a huge limitation.

It was obvious that if you could digitize audio, you could delay it. It was also somewhat obvious that you should be able to play the audio back at a different rate than it was recorded (sampled). The question was, how can you do that without changing duration? In retrospect, splicing is obvious and that’s what I did in the H910. Splicing resulted in glitches, however (I’m pretty sure that we introduced that word into the audio lexicon). So, my next challenge: I needed to come up with a method for splicing without glitches.

My design of the H949 was the first de-glitched pitch changer. With that project behind me, the next obvious challenge was digitally simulating a room – reverb. At Bell Labs, Manfred Schroeder had done some preliminary work, and I tried implementing his approach, but the results were awful. I came to the conclusion that I needed a programmable array processor to meet this challenge. This was before DSP chips became available. I designed the SP2016 and developed reverb algorithms that are now available as plug-ins and still highly regarded.

The “de-glitched” classic, the H949, also in plug-in form (thanks to Eventide Anthology).

Given that the SP2016 was general purpose, I had some other ideas that seemed obvious. For instance, Band Delays — create a set of band pass filters and delay their outputs differentially. Suzanne Ciani famously used Band Delays on her ground-breaking “Seven Waves” composition.

I also developed vocoders, timescramble, and gated reverb for the SP2016. The SP2016 had a complete development system that allowed third parties to create their own effects. The effects were stored in EPROMs (Erasable Programmable Read Only Memory) that plugged into sockets. We called them ‘plug-ins’ back in 1982 long before anyone else in the audio community used that phase.

Did I think that these effects would be musical? Yes! For example, while my goal with reverb was to create a convincing simulation of a real room, I mindfully brought out user controls to allow the algorithm to sound unreal. I was never concerned that an artist would have a ‘failure of imagination.’ I simply strove to create new and flexible tools.

On that same note, I wonder if maybe what made this inventions – and hopefully future inventions – useful to musicians is that they were just some new sound. Do you get the sense that this makes them more useful in different musical applications, more novel? Or maybe you just don’t know in advance?

I think that novel is good in that it broadens the acoustic pallet. Music is a uniquely human phenomenon. It conveys emotion in a rich and powerful way. Broadening the pallet broadens the impact. We don’t create a single static effect; we create a tool that can be manipulated. Our recent breakthrough with Physion is a wonderful example. We’re now able to surgically separate the tonal and transient components of a sound – what the artist does what does pieces of the puzzle is up to them.

It’s funny in that a sound is a sound. It’s tonal and transient components are simply have we perceive the sound. I find it amazing that our team has developed software that perceives these components of sound the way that we humans do and have figured out how to split sounds accordingly.

We’re really fortunate to have all these reissues. Your Grammy nomination referred mainly seminal, big-selling records. Do you think there’s special significance to that – or have you found interest in more experimental applications? What about your users, are they largely looking to recreate those things, or to find new applications – or is it a balance of those two things?

Well the H910 was used not only because it did something new but because it had a particular sound. In the same sense that artists prefer different mics or EQs or amps, a device like the H910 has a certain characteristic. The digital portion of the H910 was simple – most of the audio path was analog and the analog portion was tuned to sound good to me! Recreating the analog subtleties and (not so subtleties) was quite the challenge but I think nailed it. The Omnipressor is another case in point. That product deserves a lot more respect and attention than it gets and the plugin emulation is excellent. On the other hand, our emulation of the Instant Phaser isn’t even close. That’s why we don’t offer it as a standalone plugin. In fact, we’re working on a much improved version of it and are getting pretty darn close. Stay tuned…

On the third hand, our Stereo Room emulation of the original reverb of the SP2016 is very close, but even so, we’re not satisfied so we’re busily measuring it in fine detail with the hope of improving it. In fact, there are a couple of other SP2016 reverbs that were popular and we’ve taken a look at emulating those.

The Stereo Room plug-in recreates the Eventide SP2016 reverb. And while it’s really good, Tony says they’re still thinking how to make it better – ah, obsessive engineers, we love you.

And, yes while there’s a balance between old and new, our goal is always to take the next step. The algorithms in our stompboxes and plugins are mostly new and in a few cases ground-breaking. Crushstation, PitchFuzz and Sculpt represent advances in simulating the non-linearities of analog distortion.

[Ed.: This is a topic I’ve heard repeated many, many times by DSP engineers. If you’re curious why software sounds better, and why it now can pass for outboard gear whereas in the past it very much couldn’t, the ability to recreate analog distortion is a big key. And it turns out our ears seem to like those kind of non-linearities, with or without a historical context.]

What’s the relationship you have with engineers and artists? What kind of feedback do you get from them – and does it change your products at all? (Any specific examples in terms of products we’d know?)

We have a good relationship with artists. They give us ideas for new products and, more often, help us create better UIs by explaining how they would like to work.

One specific example that is our work with Tony Visconti. I am honored that he was open to working with us to create a plug-in, Tverb, that emulated his 3 mic recording technique used on Bowie’s “Heroes.” Tony was generous with his time and brilliant in suggesting enhancements that weren’t possible in the real world. The industry response to Tverb has been incredibly gratifying – there is nothing else like it.

https://www.eventideaudio.com/products/plugins/visconti-reverb/tverb

Eventide’s Tverb plug-in, which allows you, impossibly, to say “I wish I had Tony Visconti’s entire recording studio rig from “Heroes” on this channel in my DAW.” And it does still more from there. Visconti himself was a collaborator.

We are currently exploring new ways to use our structural effects method and having discussions with engineers and artists. We also have a few secret projects.

How would you relate what something like the H9 or the H9000 [Eventide’s new digital effects platforms] is to the early history like the H910 and Omnipressor? What does that heritage mean – and what do you do to move it forward? Where do recreations fit in with the newer ideas?

The consistent thread over all these years is ‘the next step.’ As technology advances, as processing power increases, new techniques and new approaches become possible. The H9000 is capable of thousands of times the sheer processing power of the H910, plus it is our first network-attached processor. Its ability to sit on an audio network and handle 32 channels of audio opens up possibilities for surround processing.

Ed.: I tried out the H9000 in a technical demo at AES in Berlin last year. It’s astonishingly powerful – and also represents the first Eventide gear to make use of the ARM platform instead of DSPs (or native software running on Intel, etc.).

One major difference, obviously, is that you now have so many plug-in users – even so many more hardware users than before. What does it mean for Eventide to have a global culture where there are so many producers? Is that expanding the kind of musical applications?

As I said earlier, there is no fear of failure of imagination of our species. Art and music define us, enrich us. The more the merrier.

What was your experience of the Grammies – obviously, nice to have this recognition; did anything come out of it personally or in terms of how this made people reflect on Eventide’s history and present?

The ‘lifetime achievement’ aspect if the Grammy award is confirmation that I’m old.

Ha, well you just have to achieve more after, and you’re fine! Thanks, Tony – as far as I’m concerned, your stuff always makes me feel like a kid.

Eventide’s Richard Factor and Tony Agnello Join Queen, Tina Turner, Neil Diamond, Bill Graham and Others Named as Grammy Honorees [Eventide Press Release]

Check out Eventide’s stuff at their site:

https://www.eventideaudio.com/

Including the Anthology bundle:

https://www.eventideaudio.com/products/plugins/bundle/anthology-xi

Also, because I know that bundle is out of reach of beginning producers or musicians on a budget, it’s worth checking out Gobbler’s subscription plans. That gives you all the essentials here, including my personal must-haves, the H3000 band delays, Omnipressor, Blackhole reverb, and the H910, plus – well a lot of other great ones, too:

https://www.gobbler.com/subscription-plan/eventide-ensemble-bundle/

This is both cheaper than and way more fun than many of the Adobe subscription bundles. Just sayin’.