Reimagine pixels and color, melt your screen live into glitches and textures, and do it all for free on the Web – as you play with others. We talk to Olivia Jack about her invention, live coding visual environment Hydra.

Inspired by analog video synths and vintage image processors, Hydra is open, free, collaborative, and all runs as code in the browser. It’s the creation of US-born, Colombia-based artist Olivia Jack. Olivia joined our MusicMakers Hacklab at CTM Festival earlier this winter, where she presented her creation and its inspirations, and jumped in as a participant – spreading Hydra along the way.

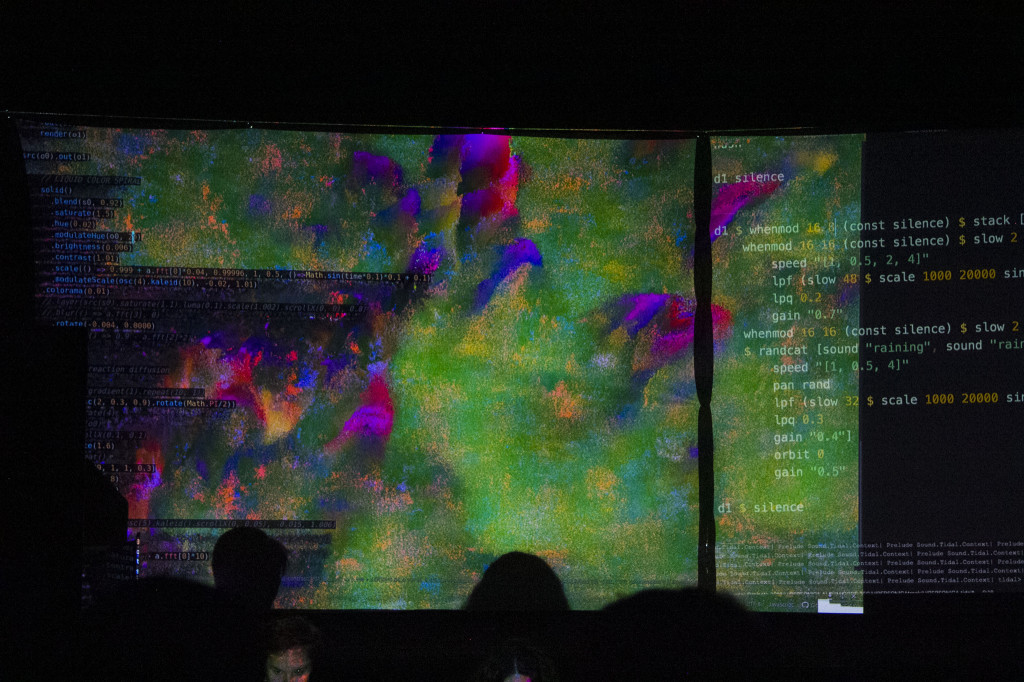

Olivia’s Hydra performances are explosions of color and texture, where even the code becomes part of the aesthetic. And it’s helped take Olivia’s ideas across borders, both in the Americas and Europe. It’s part of a growing interest in the live coding scene, even as that scene enters its second or third decade (depending on how you count), but Hydra also represents an exploration of what visuals can mean and what it means for them to be shared between participants. Olivia has rooted those concepts in the legacy of cybernetic thought.

Oh, and this isn’t just for nerd gatherings – her work has also lit up one of Bogota’s hotter queer parties. (Not that such things need be thought of as a binary, anyway, but in case you had a particular expectation about that.) And yes, that also means you might catch Olivia at a JavaScript conference; I last saw her back from making Hydra run off solar power in Hawaii.

Following her CTM appearance in Berlin, I wanted to find out more about how Olivia’s tool has evolved and its relation to DIY culture and self-fashioned tools for expression.

Olivia with Alexandra Cardenas in Madrid. Photo: Tatiana Soshenina.

CDM: Can you tell us a little about your background? Did you come from some experience in programming?

Olivia: I have been programming now for ten years. Since 2011, I’ve worked freelance — doing audiovisual installations and data visualization, interactive visuals for dance performances, teaching video games to kids, and teaching programming to art students at a university, and all of these things have involved programming.

Had you worked with any existing VJ tools before you started creating your own?

Very few; almost all of my visual experience has been through creating my own software in Processing, openFrameworks, or JavaScript rather than using software. I have used Resolume in one or two projects. I don’t even really know how to edit video, but I sometimes use [Adobe] After Effects. I had no intention of making software for visuals, but started an investigative process related to streaming on the internet and also trying to learn about analog video synthesis without having access to modular synth hardware.

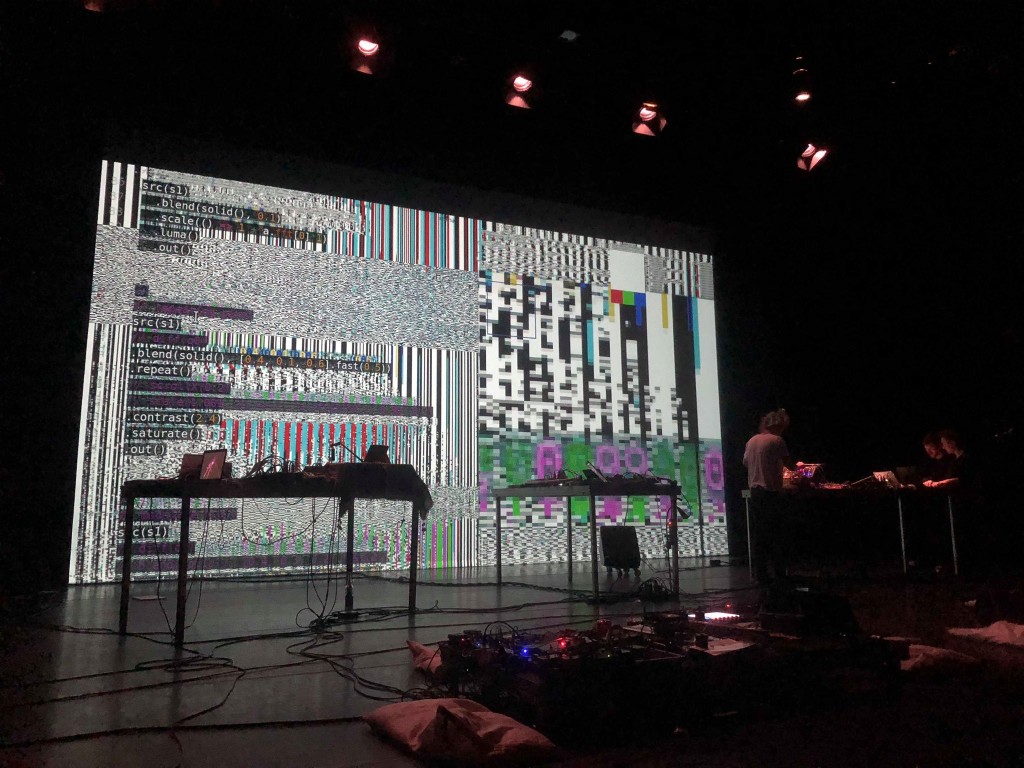

Alexandra Cárdenas and Olivia Jack @ ICLC 2019:

In your presentation in Berlin, you walked us through some of the origins of this project. Can you share a bit about how this germinated, what some of the precursors to Hydra were and why you made them?

It’s based on an ongoing Investigation of:

- Collaboration in the creation of live visuals

- Possibilities of peer-to-peer [P2P] technology on the web

- Feedback loops

Precursors:

- Satellite Arts project by Kit Galloway and Sherrie Rabinowitz http://www.ecafe.com/getty/SA/ (1977)

- Internet as a live immersive place

- Sandin image processor, analog synthesizer: https://en.wikipedia.org/wiki/Sandin_Image_Processor

- My work with CultureHub starting in 2015 creating browser-based tools for network performance https://www.culturehub.org/

A significant moment came as I was doing a residency in Platohedro in Medellin in May of 2017. I was teaching beginning programming, but also wanted to have larger conversations about the internet and talk about some possibilities of peer-to-peer protocols. So I taught programming using p5.js (the JavaScript version of Processing). I developed a library so that the participants of the workshop could share in real-time what they were doing, and the other participants could use what they were doing as part of the visuals they were developing in their own code. I created a class/library in JavaScript called pixel parche to make this sharing possible. “Parche” is a very Colombian word in Spanish for group of friends; this reflected the community I felt while at Platoedro, the idea of just hanging out and jamming and bouncing ideas off of each other. The tool clogged the network and I tried to cram too much information in a very short amount of time, but I learned a lot.

I was also questioning some of the metaphors we use to understand and interact with the web. “Visiting” a website is exchanging a bunch of bytes with a faraway place and routed through other far away places. Rather than think about a webpage as a “page”, “site”, or “place” that you can “go” to, what if we think about it as a flow of information where you can configure connections in realtime? I like the browser as a place to share creative ideas – anyone can load it without having to go to a gallery or install something.

And I was interested in using the idea of a modular synthesizer as a way to understand the web. Each window can receive video streams from and send video to other windows, and you can configure them in real time suing WebRTC (realtime web streaming).

Here’s one of the early tests I did:

hiperconectadxs from Olivia Jack on Vimeo.

I really liked this philosophical idea you introduced of putting yourself in a feedback loop. What does that mean to you? Did you discover any new reflections of that during our hacklab, for that matter, or in other community environments?

It’s processes of creation, not having a specific idea of where it will end up – trying something, seeing what happens, and then trying something else.

Code tries to define the world using specific set of rules, but at the end of the day ends up chaotic. Maybe the world is chaotic. It’s important to be self-reflective.

How did you come to developing Hydra itself? I love that it has this analog synth model – and these multiple frame buffers. What was some of the inspiration?

I had no intention of creating a “tool”… I gave a workshop at the International Conference on Live Coding in December 2017 about collaborative visuals on the web, and made an editor to make the workshop easier. Then afterwards people kept using it.

I didn’t think too much about the name but [had in mind] something about multiplicity. Hydra organisms have no central nervous system; their nervous system is distributed. There’s no hierarchy of one thing controlling everything else, but rather interconnections between pieces.

Ed.: Okay, Olivia asked me to look this up and – wow, check out nerve nets. There’s nothing like a head, let alone a central brain. Instead the aquatic creatures in the genus hydra has sense and neuron essentially as one interconnected network, with cells that detect light and touch forming a distributed sensory awareness.

Most graphics abstractions are based on the idea of a 2d canvas or 3d rendering, but the computer graphics card actually knows nothing about this; it’s just concerned with pixel colors. I wanted to make it easy to play with the idea of routing and transforming a signal rather than drawing on a canvas or creating a 3d scene.

This also contrasts with directly programming a shader (one of the other common ways that people make visuals using live coding), where you generally only have access to one frame buffer for rendering things to. In Hydra, you have multiple frame buffers that you can dynamically route and feed into each other.

Livecoding is of course what a lot of people focus on in your work. But what’s the significance of code as the interface here? How important is it that it’s functional coding?

It’s inspired by [Alex McLean’s sound/music pattern environment] TidalCycles — the idea of taking a simple concept and working from there. In Tidal, the base element is a pattern in time, and everything is a transformation of that pattern. In Hydra, the base element is a transformation from coordinates to color. All of the other functions either transform coordinates or transform colors. This directly corresponds to how fragment shaders and low-level graphics programming work — the GPU runs a program simultaneously on each pixel, and that receives the coordinates of that pixel and outputs a single color.

I think immutability in functional (and declarative) coding paradigms is helpful in live coding; you don’t have to worry about mentally keeping track of a variable and what its value is or the ways you’ve changed it leading up to this moment. Functional paradigms are really helpful in describing analog synthesis – each module is a function that always does the same thing when it receives the same input. (Parameters are like knobs.) I’m very inspired by the modular idea of defining the pieces to maximize the amount that they can be rearranged with each other. The code describes the composition of those functions with each other. The main logic is functional, but things like setting up external sources from a webcam or live stream are not at all; JavaScript allows mixing these things as needed. I’m not super opinionated about it, just interested in the ways that the code is legible and makes it easy to describe what is happening.

What’s the experience you have of the code being onscreen? Are some people actually reading it / learning from it? I mean, in your work it also seems like a texture.

I am interested in it being somewhat understandable even if you don’t know what it is doing or that much about coding.

Code is often a visual element in a live coding performance, but I am not always sure how to integrate it in a way that feels intentional. I like using my screen itself as a video texture within the visuals, because then everything I do — like highlighting, scrolling, moving the mouse, or changing the size of the text — becomes part of the performance. It is really fun! Recently I learned about prepared desktop performances and related to the live-coding mantra of “show your screens,” I like the idea that everything I’m doing is a part of the performance. And that’s also why I directly mirror the screen from my laptop to the projector. You can contrast that to just seeing the output of an AV set, and having no idea how it was created or what the performer is doing. I don’t think it’s necessary all the time, but it feels like using the computer as an instrument and exploring different ways that it is an interface.

The algorave thing is now getting a lot of attention, but you’re taking this tool into other contexts. Can you talk about some of the other parties you’ve played in Colombia, or when you turned the live code display off?

Most of my inspiration and references for what I’ve been researching and creating have been outside of live coding — analog video synthesis, net art, graphics programming, peer-to-peer technology.

Having just said I like showing the screen, I think it can sometimes be distracting and isn’t always necessary. I did visuals for Putivuelta, a queer collective and party focused on diasporic Latin club music and wanted to just focus on the visuals. Also I am just getting started with this and I like to experiment each time; I usually develop a new function or try something new every time I do visuals.

Community is such an interesting element of this whole scene. So I know with Hydra so far there haven’t been a lot of outside contributions to the codebase – though this is a typical experience of open source projects. But how has it been significant to your work to both use this as an artist, and teach and spread the tool? And what does it mean to do that in this larger livecoding scene?

I’m interested in how technical details of Hydra foster community — as soon as you log in, you see something that someone has made. It’s easy to share via twitter bot, see and edit the code live of what someone has made, and make your own. It acts as a gallery of shareable things that people have made:

https://t.co/DsbfBSPtfq by @daltonsaffe

(based on https://t.co/LMeP3HFWu0) pic.twitter.com/8kzJYKQjR8

— hydra patterns (@hydra_patterns) 11 de febrero de 2019

https://twitter.com/hydra_patterns

Although I’ve developed this tool, I’m still learning how to use it myself. Seeing how other people use it has also helped me learn how to use it.

I’m inspired by work that Alex McLean and Alexandra Cardenas and many others in live coding have done on this — just the idea that you’re showing your screen and sharing your code with other people to me opens a conversation about what is going on, that as a community we learn and share knowledge about what we are doing. Also I like online communities such as talk.lurk.org and streaming events where you can participate no matter where you are.

I’m also really amazed at how this is spreading through Latin America. What has the scene been like there for you – especially now living in Bogota, having grown up in California?

I think people are more critical about technology and so that makes the art involving technology more interesting to me. (I grew up in San Francisco.) I’m impressed by the amount of interest in art and technology spaces such as Plataforma Bogota that provide funding and opportunities at the intersection of art, science, and technology.

The press lately has fixated on live coding or algorave but maybe not seen connections to other open source / DIY / shared music technologies. But – maybe now especially after the hacklab – do you see some potential there to make other connections?

To me it is all really related, about creating and hacking your own tools, learning, and sharing knowledge with other people.

Oh, and lastly – want to tell us a little about where Hydra itself is at now, and what comes next?

Right now, it’s improving documentation and making it easier for others to contribute.

Personally, I’m interested in performing more and developing my own performance process.

Thanks, Olivia!

Check out Hydra for yourself, right now:

https://hydra-editor.glitch.me/

Previously:

Inside the livecoding algorave movement, and what it says about music

Magical 3D visuals, patched together with wires in browser: Cables.gl