Even before Max for Live was available, hackers had found a way of interacting with “secret” APIs inside Live for custom control, allowing them to customize Live’s behavior and make it work more seamlessly with hardware. That included providing something Ableton themselves had not: real, native control of Live via OSC, for more control than MIDI alone can provide. I was assured such hacks would continue to work, and sure enough, they have. Here’s how to get started.

You may wonder, of course, why even bother now that Max for Live is available? Max for Live is a powerful environment for creating instruments, effects, sequencers, and other devices within Ableton Live, and via its access to the Live API, it can even be a tool for customizing how Live works. But it adds an additional layer of abstraction, it is somewhat limited in how much it can manipulate interaction with hardware, and anyone wanting to use your creations will need to own Max for Live and not just Ableton Live. And not only that, but some people will simply prefer scripting in a language like Python to working with visual patching. (There’s still reason to consider M4L, too; see the full link to its “API” for Live, below. But we do have multiple options)

So, with that out of the way, here are the current solutions:

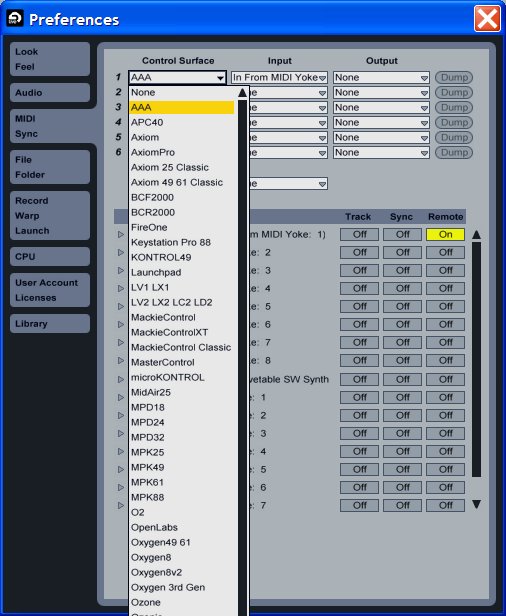

Make your own MIDI remote scripts.

Hanz Petrov has written an intensive introduction to creating your own MIDI remote scripts in Python, using the new Framework classes:

Introduction to the Framework Classes

http://remotescripts.blogspot.com/

Use OSC, via the Live OSC API Hack (or MIDI)

Ableton doesn’t have native support for OSC — unfortunate, given that’s now a feature of major visual applications (Resolume, VDMX, GrandVJ, Modul8, and others). But while we keep bugging Ableton for OSC to be on equal footing with MIDI, you can make use of a special Python hack that provides an OSC API to Live.

If the above scripting seems intimidating – and I can certainly see why it might be – the LiveOSC API is refreshingly simple. Because you can simply send OSC messages directly, controlling Live with tools like iPhone apps or Processing sketches or even hardware could become comparatively simple – and yes, simpler than working in Max for Live. If you only have MIDI, there’s even a MIDI API, too. Here’s where to start:

Complete documentation of the LiveAPI project [assembla]

http://monome.q3f.org/wiki/LiveOSC

Why it’s nice: you can send something as simple as /live/play/clip (track, clip) and trigger a clip. That’s even more direct than the usual MIDI interface.

Most importantly, this now works with Live 8.1. See the video below for an example of this in action:

mlrV4live tutorial (&casio madness) from StevieRaySean on Vimeo.

Check out his Arduinome build documentation, too. (Arduinome is an authorized clone of the monome using readily-available parts.)

The Max for Live way: Live Object Model

Complete LOM documentation at Cycling ’74

And yes, it makes my head spin a little, too. (Or perhaps the word is “oscillate.”)

Max4Live.info (Michael Chenetz) has done a great job of making this a bit more manageable. In the video below, he explains how to use the interaction between Max for Live and Live; there’s also a tutorial on sending messages to a control surface like the Launchpad. But note that some of this can actually more complex, and more hardware-specific (APC/Launchpad-only) than the hacks above. It’s a case in which the hacked version actually works a little better than (cough) the official version.

Max For Live Paths, Objects, and Observers from Michael Chenetz on Vimeo.

My own challenge for myself: just make the Launchpad intelligently control device parameters, something it currently doesn’t do. I’ll let you know how it goes.

Thoughts on the merits of these different approaches? Projects you’ve made using one or another? We’d love to see them.