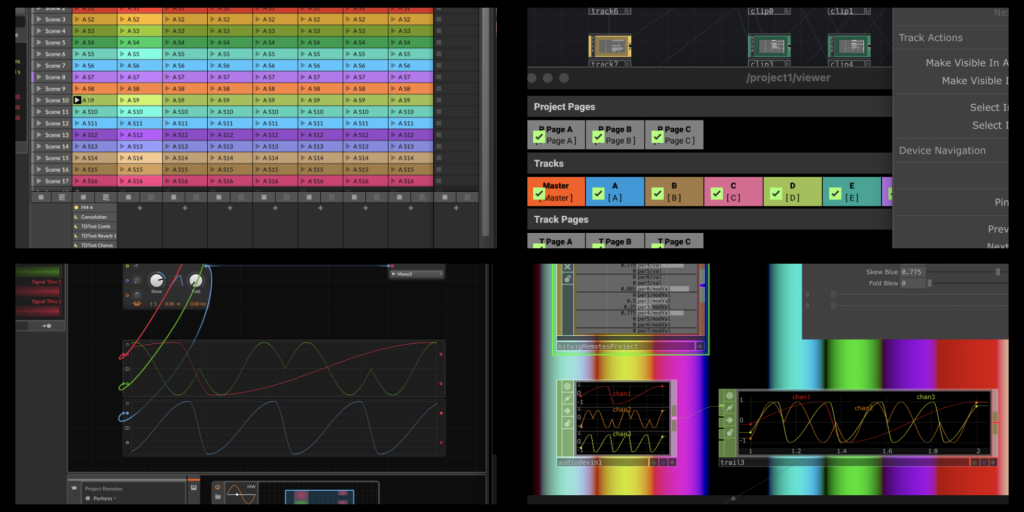

Welp, now that we’ve been putting modular environments inside modules inside modular rigs, next we get to make TouchDesigner play Bitwig Studio and Bitwig Studio play TouchDesigner — and Bitwig’s modular The Grid and TouchDesigner’s modular UI interact. It’s all coming on the eve of the TouchDesigner Roundtable in Berlin – and it’s even part of the official Touch build. 3D and generative visuals, meet the DAW.

This isn’t just nerdy for the sake of it. The integration means you can use Bitwig as your sound/music and audio mixing engine – complete with its own modular engine – and TouchDesigner for your visuals. Or you can more easily work with controllers via Bitwig Studio, but controlling your Touch patch.

Here this is in action, featuring our friend Stas – and hey, while we’re adding Things to Other Things, let’s add in MediaPipe (AI-powered pose estimation):

In this example I’m using MediaPipe Pose Estimation and sending control channels to the Poly Grid device. Than I capture se sound generated back to TouchDesigner and use the sound analysis to control the video effect created with the custom GLSL Shader

Let’s skip ahead and break this down, because if you’ve read this far (or, like, at all) I expect you know these environments reasonably well. So here’s what’s included in TDBitwig – part of the official 2022 TouchDesigner build:

- bitwigSong for transport/arranger communication (including timeline cues)

- bitwigTrack for bidirectional volume/mute/solo controls and control rate amplitude envelopes

- bitwigClip and bitwigClipSlot for bidirectional access to color, loop length, quantization, plus launching ability, playback control, and clip events with callbacks.

- bitwigNote which turns your note events into individual CHOP channels – like, one channel per note

- bitwigRemotesDevice and bitwigRemotesTrack for remote control pages and global track control, respectively. That in turn lets you control any parameter – 8 controls per page.

- bitwigRemotesProject for global project control.

- bitwigSelect essentially lets you filter through particular channels, etc.

It’s all built on bidirectional OSC. A Python extension communicates using OSC with the Java-based Controller Extension API in Bitwig Studio.

But – one more thing. All of the above are control-rate interactions. But you can also pass audio-rate signals – both audio content and audio-rate control signals – via the Bitwig Grid. I’m a bit unclear on how that bit works, since it’s not part of TDBitwig, but it sounds promising. In fact, it might even be worth dumping content created in other DAWs into Bitwig Studio just to take advantage of this audio interaction.

Full docs are on the Derivative side:

https://docs.derivative.ca/TDBitwig

And from Isabelle at Derivative, a great overview:

INTRODUCING TDBITWIG – BRIDGING TOUCHDESIGNER AND BITWIG

This one looks really great. Watch this space.

By the way, if you’re wondering “what about Ableton Live” – and “doesn’t it have something with OSC” – yes. And so sure enough, there’s also TDAbleton in TouchDesigner:

https://docs.derivative.ca/TDAbleton

But scroll to the bottom and you get straight to the catch, which also speaks to a shortcoming in Live’s current architecture as far as its API:

“Because TDAbleton is linked to Ableton Live’s Python Remote Scripts via OSC and the Python Remote Scripts are linked to the Ableton app through a lower-level system, troubleshooting can be a bit tricky.”

So, right – that, that’s effectively what Live is missing (something this site has been observing via much smarter readers for many, many years). Especially as there are many reasons to love Max for Live, it’d be great to see an updated API for interacting with Live and working with controllers. I’m still rooting for that.

That being said, there are many, many ways to skin this cat as far as AV integration – and for some of those use cases, both TDAbleton and TDBitwig are overkill. But they’re interesting ways of looking into how you work with software, far beyond just like taking your mouse and pointing at stuff.