3D, spatialized sound is some part of the future of listening – both privately and in public performances. But the question is, how?

Right now, there are various competing formats, most of them proprietary in some way. There are cinema formats (hello, Dolby), meant mainly for theaters. There are research installations, such as those in Germany (TU Berlin, Frauenhofer) and Switzerland (ZHDK), to name a few. And then there are specific environments like the 4DSOUND installation I performed on and on which CDM hosted an intensive weekend hacklab – beautiful, but only in one place in the world, and served up with a proprietary secret sauce. (4DSOUND has, to my knowledge, two systems, but one is privately-owned and not presenting work, and spatial positioning information is stored in a format that for now is read only by 4DSOUND’s system of Max patches.)

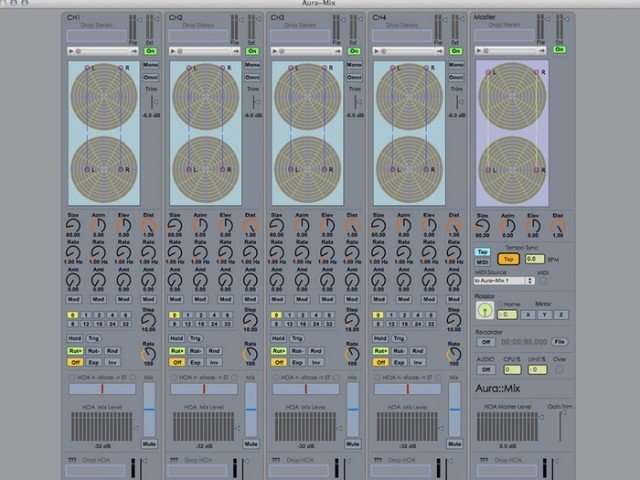

Now, we see a different approach: crowd funding to create a space, and opening up tools in Ableton Live, Max for Live, and Lemur. The result looks quite similar to 4DSOUND’s approach in the speaker configuration and tooling, but with a different approach to how people access those tools and how the project is funded.

Artist Christopher Willits has teamed up with two sound engineers / DSP scientists and someone researching the impact on the body to produce ENVELOP – basically, a venue/club for performances and research. It, too, will be in just one place, but they’re promising to open the tools used to make it, as well as use a standard format for positioning data (Ambisonics). We’ll see whether that’s sufficient to make this delivery more widely used.

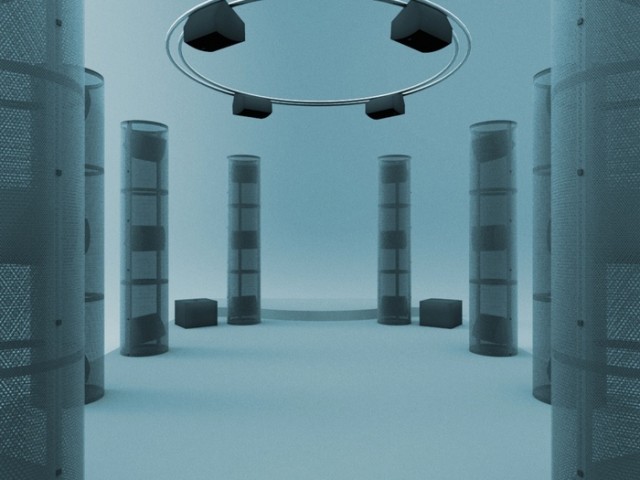

The speaker diffusion system is relatively straightforward for this kind of advanced spatial sound. You get a sphere of speakers to produce the immersive effect – 28 in total, plus 4 positioned subwoofers. (A common misconception is that bass sound isn’t spatialized; in fact, I’ve heard researchers demonstrate that you can hear low frequencies as well as high frequencies.) Like the 4DSOUND project (and, incidentally, less like some competing systems), the speaker install is built into a set of columns.

And while the crowd-funding project is largely to finish building the physical venue, the goal is wider. They want to not only create the system, but they say they want to host workshops, hackathons, and courses in immersive audio, as well.

You can watch the intro video:

The key technical difference between ENVELOP and the 4DSOUND system is that ENVELOP is built around Ambisonics. The idea with this approach, in theory, at least, is that sound designers and composers choose coordinates once and then can adapt a work to different speaker installations. An article on Ambisonics is probably a worthy topic for CDM (some time after I’ve recovered from Musikmesse, please), but here’s what the ENVELOP folks have to say:

With Ambisonics, artists determine a virtual location in space where they want to place a sound source, and the source is then rendered within a spherical array of speakers. Ambisonics is a coordinate based mapping system; rather than positioning sounds to different locations around the room based on speaker locations (as with conventional surround sound techniques), sounds are digitally mapped to different locations using x,y,z coordinates. All the speakers then work simultaneously to position and move sound around the listener from any direction – above, below, and even between speakers.

One of our hackers at the 4DSOUND day did try “porting” a multichannel ambisonic recording to 4DSOUND with some success, I might add. But 4DSOUND’s own spatialization system uses its own coordinate system, which can be expressed in Open Sound Control messages.

The ENVELOP project is “open source” – but it’s based on proprietary tools. That includes some powerful-looking panners built in Max for Live which I would have loved to have whilst working on 4DSOUND. But it also means that the system isn’t really “open source” – I’d be interested to know how you’d interact, say, with genuinely open tools like Pure Data and SuperCollider. (For instance, presumably you might be able to just plug in a machine running Pd and HOALibrary, a free and excellent tool for ambisonics?) That’s not just a philosophical question; the workflow is different if you build tools that interface directly with a spatial system.

It seems open to other possibilities, at least – with CCRMA and Stanford nearby, as well as the headquarters of Cycling ’74 (no word from Dolby, who are also in the area), the brainpower is certainly in the neighborhood.

Of course, the scene around spatial audio is hardly centered exclusively on the Bay Area. So I’d be really interested to put together a virtual panel discussion with some competing players here – 4DSOUND being one obvious choice, alongside Fraunhofer Institute and some of the German research institutions, and… well, the list goes on. I imagine some of those folks are raising their hands and shouting objections, as there are strong opinions here about what works and what doesn’t.

As noted in comments, there are other open source project – the ZHDK tools for ambisonics are completely open, and don’t require any proprietary tools in order to run them. You will need a speaker system, which remains the main stumbling block.

If you’re interested in a discussion of this scene, let us know. Believe me, I’m not a partisan of any one system – I’m keen to see different ideas play out.

ENVELOP – 3D Sound [Kickstarter]

For background, here’s a look at some of the “hacking” we did of spatial audio in Amsterdam at ADE in the fall. Part of our idea was really that hands-on experimentation with artists could lead to new ideas – and I was overwhelmed with the results.

4DSOUND Spatial Sound Hack Lab at ADE 2014 from FIBER on Vimeo.

And for more on spatial audio research, this site is invaluable – including the various conferences now held round the world on the topic:

http://spatialaudio.net/

http://spatialaudio.net/conferences/