Ed.: He’s worked to translate classic hardware like the landmark Rutt-Etra to digital media – in that case, working with its original co-creator. He’s been a virtuoso of Mac visual development, building his own tools and working out an innovative way of piping live textures between applications in ways never before possible. And he’s been an important member of the visual community and accomplished live performer, including on occasion being nice enough to write for us here on CDM. Anton Marini talks to another luminary of our community, Kyle McDonald, about … well, just about everything you could think of to talk about related to the above topics. Here are Kyle and Anton… -PK

Kyle: This is the third interview in a series about creators sharing work, with an emphasis on open source, media art, and digital communities. The interviews are edited and backed up on GitHub after being conducted on PiratePad, where you can walk through the interview history as it was written. Anton completed the interview offline, but the questions are still available on PiratePad. All content is licensed under a Creative Commons Attribution 3.0 Unported License.

with Kyle McDonald interviewing Anton Marini (vade)

Who are you and what do you do?

My name is Anton Marini. I make pretty things with pixels for work and for play.

Most people probably know you from the work you’ve been sharing online for years now, using Jitter, Quartz Composer, and other visually-oriented software. How did you get started sharing? What communities and people would you identify as influences?

Sharing has been an important part of my learning process. I am not the type of learner who can read a formula or an abstract process and immediately understand its key points and figure out ways to use it. I have to get my hands on things, play, experiment, and learn to intuit behavior and properties. I’ve found sharing results and processes to help me as much as it helps others, and I certainly would not be where I am on my own, isolated.

In middle and high school we were fortunate enough to have access to a media lab, where we could go off class hours and experiment and learn on our own. Somehow our media lab was amazingly well equipped – we had some A/V Macs, a video toaster with Lightwave, the first versions of Photoshop, Premier, After Effects, a Quick-take camera the week it came out, and even a public access TV show (really). The culture fostered in the lab was that where students helped mentor other students (there was only one “teacher” and their capacity was more to help troubleshoot technical issues, not run classes). That experience helped hugely shape my passion and excitement for visual media, and really set a tone for how I learned the trade, through exploration, experimentation and collaboration.

I also could not help but be inspired by the community of people who run Share. I first became aware of the VJ / visualist scene when I stumbled onto Share by reading a totally mis-reported story about the weekly event in New York City. It was described as a place to go to share music files, mp3s and the like. Bring your iPod, hang out, listen to music, trade files. Always looking for new music, I went, and was greeted by a sort of modern take on free jazz. Anyone could join in, any instrument and style welcome. The venue (Open Air) had multiple video screens in the walls for visualists to plug in to. It was the first place I ever saw video being manipulated in realtime and I was instantly struck. My job at the time was working as a tech in a post production studio, so my conception and understanding of video manipulation was that it was a long, tiresome, error prone rendering process. Seeing it malleable (sure, at 320×240, 15 fps), was an amazing experience.

The people who were “performing” (I should probably say playing, it’s much more accurate in describing the mood) were amazingly open in sharing tools and techniques. Eric Redlinger was one of the people who welcomed me to Share that day, and showed me how he was performing video. He was part of a team who wrote a piece of software called KeyWorx – a networked, multi user patching environment. Eric showed me how to use it, helped me install it, and next thing I knew I had a realtime video system in my lap. I was too shy to put anything on the screen at the time, but I spent countless hours at home experimenting and performing for an audience of one with KeyWorx before I plugged in at Share.

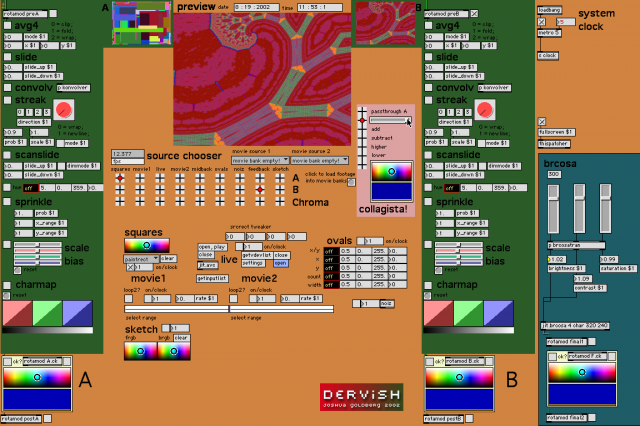

I later met Joshua Goldberg, who introduced me to the depths of Max/MSP/Jitter. After watching Joshua play and experiment, I had to ask him how he was making it (the now cliche “what software are you using?” question). It was unlike things I had seen before, and he generously showed me, and then gave me his patch. Having learned the most basic Max/MSP/Jitter, seeing his patch Dervish was like trying to decipher the plans to the space shuttle after just seeing the Wright brothers fly for the first time. I learned an incredible amount from Dervish, both technically and artistically. What has struck me most about that experience however (and it only really struck me after painstakingly crafting my own solutions to similar problems – literally years later), is how much of Josh is encoded in that software. It is not simply a practical way of working with video in Jitter, it is a style, an aesthetic, and a methodology. It is really an artistic embodiment of himself encoded in patch coords, oscillators and feedback loops. The fact he gave it someone so fresh and naive is a feat of character. That still resonates today.

Those two moments stick out most in my head, but there are countless of others that took place at Share. There are many many people who deserve equal thanks, but Josh and Eric were the first, and I owe them both very much. Share was about not just technical knowledge, but about approach, process and how people played. That was invaluable as a new performer.

The Max/MSP/Jitter community has an ongoing set of threads named “Sharing is Fun”, where users post riffs or takes on how to accomplish particular tasks – which helped greatly technically with Jitter, finding creative and almost… abusive… uses for objects. I could go on and on, Kineme, the openFrameworks forums, the Demo Scene…

Syphon is one of the most important pieces of software in years for visualists. Why is it completely free and open source?

Because that model simply would not work. In order for Syphon to be useful, it needs to have an ecosystem of implemented applications that will spur further adoption, and make exciting combinations possible. The more working applications, the more creative potential just due to the combinatorics. Tom and I worked towards what seemed to be the easiest path to getting the most implementations and acceptance of a “standard” with least resistance.

Cost is a barrier to entry, and Syphon is a tool that in many ways ‘outsources’ application functionality, something commercial applications may not want to do. Taking a commercial approach seems like a way to hinder adoption (Think of it from the commercial developers point of view: you want me to pay you to license your code, so my app will work with competing applications!?), and scares away the most important demographic who would find the most utility and from it (people who leverage exciting realtime environments like openFrameworks, Max/MSP/Jitter, Cinder, Quartz Composer, Unity etc – usually open source, or very open communities). Adobe and kin is not our (initial) target for adoption. Allowing easy access, and thus easy experimentation was a definite goal, and that is a sweet spot for the open source and new media communities. I do want to see a Photoshop / Syphon plugin though. That would be awesome.

In a way, it could not be anything but open source. Syphon’s implementation transparency and licensing allows quick and painless adoption by communities and developers that use small, niche tools that want integration into larger environments. It was a matter of necessity if we wanted Syphon to gain traction.

Syphon’s interesting in that it had a very careful development. It wasn’t on GitHub or Google Code from the beginning. You and Tom Butterworth spent a lot of time developing it without telling anyone, then releasing it as a private beta, and finally releasing it publicly. Why was it done this way?

Prior to Syphon (actually, a few years before), I had mass emailed every contact I knew who might be interested and helpful in making what I had called “Open Video Tap”. Every commercial and open source developer, be they in the VJ field, new media, or game development, all expressed interest in the idea – but had no real time or money to devote to developing a workable solution. The idea was not a really a novel one, or a well kept secret, but it was very non-trivial at the time. However, with the introduction of Mac OS X 10.6 Apple introduced the necessary framework for something like Open Video Tap with IOSurface, and told no one about it. Now, it seemed as though it could be handled by a small development group, and Tom and I saw an opportunity make this ourselves. The barrier was a lot lower.

Keeping development somewhat closed was a very deliberate strategy to ensure that incompatible and competing implementations did not accidentally or purposefully get made or released in the wild before a finished, tested and working framework was designed. The concept is that sharing is seamless and just works. If you download it, and it fails miserably, or works inconsistently because you have two different versions or implementations of the design, it’s not going to inspire confidence in the users and developers who would help spread adoption, or write new implementations.

Keeping the development of Syphon out of sight also allowed us to identify and correct issues with the API, so that once we let Syphon out of the box, developers would have a clean and tested API for rolling out support. Something that made sense and worked. We wanted to do it “right” from the start. We also wanted to announce it with a set of “out of the box” working implementations to demonstrate Syphon, so it’s appeal would be apparent and immediately useful. Writing those implementations was also a real world test suite to see if the idea was even remotely sound and useful and importantly stable. Releasing something as a sharing toolkit, with no useful options from the start is a bit of a lackluster, self defeating announcement. We wanted to avoid that. I guess In a way we approached its like a real commercial product. Weird.

How do you balance development of free tools like Syphon with your “day job” with Noise Industries? Do you ever think “I wish I could open source this cool thing I’m building”?

Its kind of a mixed bag. Noise Industries has already created an amazingly useful set of plugins for Quartz Composer that you can use and leverage if you’ve installed FXFactoryPro. The main product are the plugins for After Effects, Final Cut and Motion. We build those based on some additional “lower level” tools we add to Quartz Composer. Those lower level tools are actually usable outside of FXFactoryPro (and the hosts), so while they are not free and open-source, they are accessible and usable to “power users” who want to build their own effects for Final Cut, Motion and After Effects (or play with inside of Quartz Composer and friends). There are tons of useful utility objects that make working with Quartz Composer easier, most of which I wish were standard in the environment.

Some personal projects of mine, for example specific effects and “looks” that I have a personal attachment to, I don’t release – even though they are not even work related. Those are not re-usable, general utilities or commonly useful effects – they have specific looks and feels, and therefore have more of a personal “signature” within them. My private policy is: when I get bored and tired of a specific effect (that I’ve found has a sort of “signature”) it’s a candidate for release. I have a lot of things I am no where near finished exploring with aesthetically, but also have a myriad of unfinished, generally useful tools for end users.

That said, If I had my way, I would like everything to eventually be made open source, only because it pushes the field and the art along further and faster, and in more unexpected and unintended ways. Sadly however, donations for open source projects are so rare that a living wage is pretty much impossible to earn (strictly on the programming side). So I think there is some balance to be struck, but I definitely err on the side of wanting to share, and discuss pretty much everything.

I remember a funny comment someone left on your Vimeo recently: “Vade: you solve all the right problems !” Besides Syphon, you’ve spent a lot of time working on other essential tools: like solid playback and recording, or, more recently, 3d model loading in Quartz Composer. How do you decide what “problems to solve”, and how do you go about solving them?

Ha! If only!

I try to solve the problems I encounter when working on a project, or trying to develop or explore an aesthetic, I don’t really go “looking” for problems. If I encounter something that seems like a huge task, I ask myself if I really need to be thorough, or am I going for just an aesthetic look.

If its a pure aesthetic issue and the “correct” solution is non-trivial my first impulse is usually to cheat. I.e., “how can I emulate this look without doing all of the hard work”. Take the glitch plugins. Some of them are real, genuine glitch “artifacts” (in the sense of you are getting incomplete frames, data that is interpreted incorrectly – and you don’t always know what you will get back), and others are total fakes – coined “glitch alike” – that simply emulate looks. Most of the time I can settle for emulation, because its usually faster (both in a development and a performance sense). If I can make genuine glitch easily enough, I’ll go for it.

On the other hand, some solutions by their nature need to be “correct” in a technical sense (movie playback, 3D model loading – cheating those tends to work to your disadvantage, it just won’t work at all, or inconsistently). So if that is the task at hand, and it can be done, I’ll try and go that route.

My thought process tends to be: “is this fast enough for a realtime performance environment?”. If not, fake it! If I cant fake it, or can’t solve it, research it some more, and put it on the back burner until someone else does, and move along in the mean time. I think I’m fairly pragmatic in my approaches, and try not to beat a dead horse too often. I can’t even begin to tell you how many half implemented, “unsolved” projects I have sitting around.

A big part of your work is very purely about visual aesthetics and performing live visuals. When you’re releasing tools that are essential to your “look”, do you ever worry about other people “looking the same”?

At some level, different people use the same tools in different capacities and styles. Two people might play the same piano, but the style, mastery, artistry and creativity between them is unique. It’s apparent pretty quickly, I think. So in the sense of “looking the same”, generally I am not too worried about that. Some effects however, really do have a very very specific aesthetic, and can, for lack of a better word, be far too personal to release.

Let me elaborate.

I recall going to a performance and seeing a wonderful visual set, and in speaking to the artist I discovered some components of his show used some effects I had released. I had no idea. It was fabulous. They really made it their own, integrated it and used it in ways I would not have. That was really fun and unexpected, and gave me ideas I would not have had otherwise.

Devan Simunovich took some v001 code and made an amazingly awesome performance environment he shared called loryder, taking approaches I never would have with the interface, and getting fantastic results.

When I was in Rome for Live Performers Meeting I guest lectured at a Quartz Composer workshop and showed multiple plugins, shared ideas and techniques. This was the first time I shared the glitch plugins publicly. The next day I saw a classroom full of people getting different looks out of the same basic tools. Those are the sorts of users that make sharing very worthwhile.

There was also enough flexibility in all of the above tools that people could find their own voices.

I mentioned a bit of my personal policy above; I think there are some effects that strictly speaking have a very finely tuned aesthetic signature, and even thinking about giving that away is really uncomfortable for me, because its a bit too close to home – its a bit too… “me”… They are essentially “make it look like this” buttons. There is not much room to explore in them, but they may have striking results. Those ones are really hard for me to let go of. In essence, I’ve strongly encoded an aesthetic sensibility into them, and they are not very flexible in expressing more than just that one sensibility – sort of visual pop-culture one hit wonders (hah). I’ve gotten emails from people looking at images or videos I’ve posted, asking for specific effects, or where to download them. Some people even email me attempts at re-creating them, finding different solutions (some totally unlike my original, but really nice and fun to explore in their own right). This also makes me appreciate Josh Goldberg’s openness about Dervish that much more. Those “one hit wonders” tend to be approaches or looks I’ve not yet finished exploring, and am, in a way, attached to because I still find them maddening.

I’m not sure I’ve really found a right approach, but I try to keep a balance. It’s not set in stone, and I change my mind about it every week or so (really). It’s a very nuanced issue for me, and I don’t think there is a right or wrong answer. Currently, I try to release flexible tools, that have enough push and pull in them that you can stumble on and find unique looks. If something I’ve made does not have that flexibility, in a way, its not quite finished, and ready for release.

v001 and v002 are licensed as cc-attribution-noncommerical. And your interpretation is that it only applies to the code — so people are free to perform with it in a commercial context, just not free to sell it or integrate it into commercial software. Syphon is Simplified BSD, which basically means you can do whatever you want with it. Can you explain why you chose these licenses?

I’ve explained the licensing of Syphon above. Its more of a utility – meaning it carries little creative inertia in and of itself. Its not a tool that guides or informs visuals – it helps you mix tools that otherwise might not play well together. Licensing was set up to spur adoption and creation of tools like MadMapper, so I think the BSD licensing was really necessary.

The v002 / v001 licenses (and code) are much more specific in their creative intent, and so in many ways more personal, and more purely artistic endeavors. They are meant to spur creative dialog and hopefully have people learn from them, to make new and better open tools and interfaces. The licensing is meant to reflect that, and strike a balance to help give back to that dialogue. I think allowing commercialization can stifle that impulse. Its really just a way to encourage more discourse and experimentation. I’m still not sure if those licenses are correct (why not GPL, for example), but I like their simplicity, even though they are not strictly intended for code.

I really wish I never had to deal with it licensing at all. It’s not fun to think about, but a sort of necessary evil.

How do you stay connected with the people using your tools?

There are some communities I follow pretty closely, almost daily – the Quartz Composer, Jitter communities – so I tend to eventually stumble on to postings and questions that I can help with, or that happen to use my tools. Other times, I will get emails from users, discussing a particular issue regarding Syphon and some other 3rd party app. If that’s the case, I try to discuss the problems on the softwares board or forum. Tom and I also have set up a v002 and Syphon forum that gets some activity.

Most of the communication I have is, unfortunately trouble shooting or feature requests. I would love to see more people showing me how they have used the tools, and what they have created. That is far more rewarding and interesting to me.

Glitch, which is a huge part of your visual work, has an interesting relationship with sharing. Some people react to discovery of a glitch by holding the glitch captive, making it their secret, and sharing the results without sharing the process. But it’s much more common for people to try and explain to others how it happened, how they might recreate it, and work on a mutual understanding of the phenomena. Last year there was some discussion around motion compression artifacts/datamoshing. There was a funny balance between a few people keeping it to themselves, and tons of others making software to recreate the effect. I’m also thinking of the more academic sharing in Rosa Menkman’s “Vernacular of File Formats” or Cory Arcangel’s “On Compression“, which explore glitch through explanation. You don’t really write tutorials, and you don’t write essays, but your software is accomplishing something similar by democratizing the means to the creation of these glitches. How would you describe your relationship with glitch?

When I first ‘understood” Glitch, I saw it purely as an aesthetic experience. I am interested mostly beauty (ha), and trying to re-create what I see in my mind on to the screen. A lot of glitch theory discusses politics of systems and how glitches expose the internals of systems to the public in unexpected and unintended ways, and how glitch is larger than just technical issues, but can be understood on a more social context, can help understand the power politics, and expose all sorts of other phenomenon.

Going to the GLI.TC/H festival in Chicago made me much more aware of these sorts of… “academic” viewpoints on the phenomenon. I am personally not as interested in that aspect for my own work. One might say I make more “Glitch Design” than “Glitch Art”, if I were to use Rosa’s terminology. I have no problems with emulations of looks, cheating, or “controlling” the Glitch to get looks that I like or want, when I like or want them, because for me it is a tool, and a means to an end. The end is expressing what is in my mind when I play along to a song, hear a sound, or want to create a mood.

In many ways, at the time I was interested in Glitch, it was a relatively new and unexplored space (at least in the circles I was running in), rife with opportunities. I clung on and perhaps rode some of that wave. It resonated with my musical tastes and with what I was interested, and, lets be frank, breaking things is always cool. It was just fun.

But from a strict community perspective, I tend to not participate in the more academic discussions. I make tools, and I share them with others because at the end of the day, it’s just as beneficial to me as it is to them. Using a term I heard in Chicago, I “domesticate” glitch into something more controllable, and easier to approach.

You worked with Bill Etra on your Rutt-Etra synth. For him, it’s “the beginnings of what [he] had dreamt of” after decades of waiting. Did he ever imagine it would exist as software instead of hardware? How do you both feel about this, given the fact that it can be duplicated and given away at zero cost whereas before it was a bulky knob-covered box?

The real hardware is so capable and does so much more than what it’s commonly understood to do. Benton C. Bainbridge has shown me up close and personal some of its quirkier and more interesting aspects, things the software cannot currently emulate at all, at some level just because of its digital roots, compared to the hardwares genuine analog circuitry.

However, I’m sure Bill expected “visual instruments” to move to software, he was and is acutely aware of trends in the field. He has a vision of a “video orchestra”, where multiple users can play multiple instruments all in tune, on one screen, in visual harmony, where orchestral sections contribute differently to the overall picture. Software is the logical extension and choice for that metaphor, but I think there is a long way to go for even single user instruments and interfaces. I think Bill is happy that versions of the Rutt-Etra are available for free, more people can experience and experiment with his work, and can spend hours upon hours toying and experimenting.

For me, I think its great. I feel very strongly that anyone should be able to explore realtime creativity without the need for bulky boxes, expensive software or hardware (I say this, ironically, while typing on an $2000 laptop, har). The trend has always been towards cheaper, smaller and more flexible solutions. I think the software Rutt is simply part of that trend, and I was and am very fortunate to work with Bill on it.

How do you see performance of live visuals changing in the future, and what effect do you see the visualist community having? Do you see more people making their own tools and sharing them, or more people using others’ tools?

I think we will see much more custom tool creation, and I suspect, sharing of those new tools.

Pre-designed software, be it commercial or open source, pre-supposes how users intend to interact with it, what they want to do, and how they ought to do it, what the pipeline and the process ought to be. Those interactions and assumptions can be limiting, or totally off base if someone has a specific performance or interaction idea in mind. I think as environments like openFrameworks, Cinder, Max/MSP/Jitter and Quartz Composer (and friends) evolve, we will see even more approaches to how visual media is performed, what sorts of interface metaphors make sense, and paradigm shifts in control surfaces, interaction, etc. Traditional VJ software is going to have a lot of adapting to do, I think.

Some of those approaches will only make sense for specific performance pieces (very specific needs or very specific interaction paradigms that can’t be implemented elsewhere, simply because you won’t have say, 50 dancers, each wearing infra red LEDs and over-head cameras tracking them). Others may be more general performance software and experimental interfaces to performing visuals, or just cheaper, faster, open source takes on current paradigms. But the trend I think is already leaning towards more open source, written by artists, for artistic solutions.

The cost of entry for rolling your own solution is getting lower and lower by the day, which means more and more ideas and experiments will come into play. I think there is a lot of work to be done on the interface side alone. I hope I can be a part of that process. I have lots of ideas.