If you haven’t yet seen commentary on this at Music Thing or (originally) WWMNA, David Merrill of the MIT Media Lab has a project for controlling guitar effects with his face. (It’s not new, incidentally — dates to 2003, and you can look forward to more of this sort of thing at the annual New Instruments for Musical Expression aka NIME, due next in Paris in June 2006.)

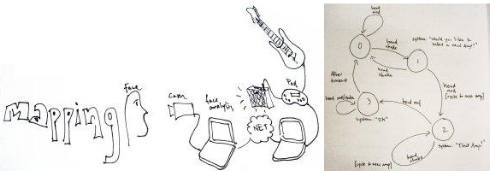

So, uh, aside from being weird, could this ever actually be useful and not just freaky? Possibly: gestural mapping gets especially interesting, as David uses a nod to trigger an event, for instance. As video processing gets less intensive relative to computer speed, and gestural processing gets more intelligent, you could eventually interact with your tech like a musician. Hell, if your musician friends ignore you the way mine do, you may have even more luck with the tech.

What’s mysterious here is the software David mentions — check the PDF for more project details. The software is a modification to something called FaceSense, running on Linux. Don’t think this is something you can just go download, though. See also:

Vicon, providers of pro-level motion capture and control systems

Visual Tracking of Movements for a Gesture-Based Interface, a detailed paper by Daniel Hunnisett

Mouthesizer, which reads mouth positions (also from NIME 2003, demoed mapping to guitar effects)

Motion capture does promise to ultimately provide performance and expression without the aid of physical objects, using full-body motion as performance. Now go build something that lets you control your synth with your eyebrows, okay?