Apple is filling in a key piece in the Spatial Audio puzzle – tracking the position of your head through the company’s headphones for more realistic immersion.

Spatial Audio is already popular just weeks after launch on Apple Music, backed by a full-court press from Apple themselves on their own platforms and in the media. Spatial Audio plays are reportedly growing 20 times faster than normal plays, reaching some 40 million Apple Music listeners. You’d expect a spike when the tech is new, but it still says folks are interested.

All in your head

But plays aside, does it actually sound as immersive as advertised? Well, there’s the first problem. Playback of formats like Dolby Atmos really benefits from carefully positioned speakers located all around you. That’s of course the advantage cinema has – you walk into a space where the speakers are already positioned and calibrated. You might not need thirteen speakers as in an Atmos cinema rig – for music, four is actually not a bad number. But for headphone listening, you run into an age-old problem. Headphone spatialization would work perfectly if we all had the same head and ears. But we don’t, which means that the quality of the effect is going to be crude until someone works out easy calibration for consumers.

That’s why I think head tracking matters. Assuming you’re in a location with any ambient sounds, if you have even basic hearing sensitivity in both ears, you can prove this to yourself. Close your eyes, and listen for a particular sound – dog barking, car, fly buzzing, whatever – and try to imagine where it is. Now cock your head slightly. It’s easier to hear where that sound is, right? What researchers will tell us is that slightly re-orienting your head almost instantly helps you to perceive positioning more clearly. (Science!)

So this makes head tracking really important. On one level, it will allow the positions of sound objects in space to stay in place when you move your head, as if they’re really there – just as if a band or orchestra were playing on a stage, for instance. And that already opens up cool creative possibilities and a reason to use the format. But on another level, it should immediately deepen the perception of Spatial Audio tracks over others.

This kind of immersive sound is also related to any push into virtual reality, augmented reality, or mixed reality – so related to Apple’s larger strategy.

Apple is really out ahead of the curve on this as the first streaming service to offer the technology. And they’re also unique in that they’re a company with a streaming service and a phone and headphones and a computer platform and music production software (Apple Music, iPhone, AirPods Pro and AirPods Max, the Mac and iPad, Logic Pro and GarageBand – phew). Google and Facebook and Amazon come closest of their competitors, and you can bet they’ll try similar, but Apple’s inclusion of us – the folks actually making and mixing the music – may ultimately be the deciding factor in making this interesting. So I do expect you’ll see other streaming services follow suit, but the combinations of tools for authoring and playback will likely be more complicated.

The playback picture

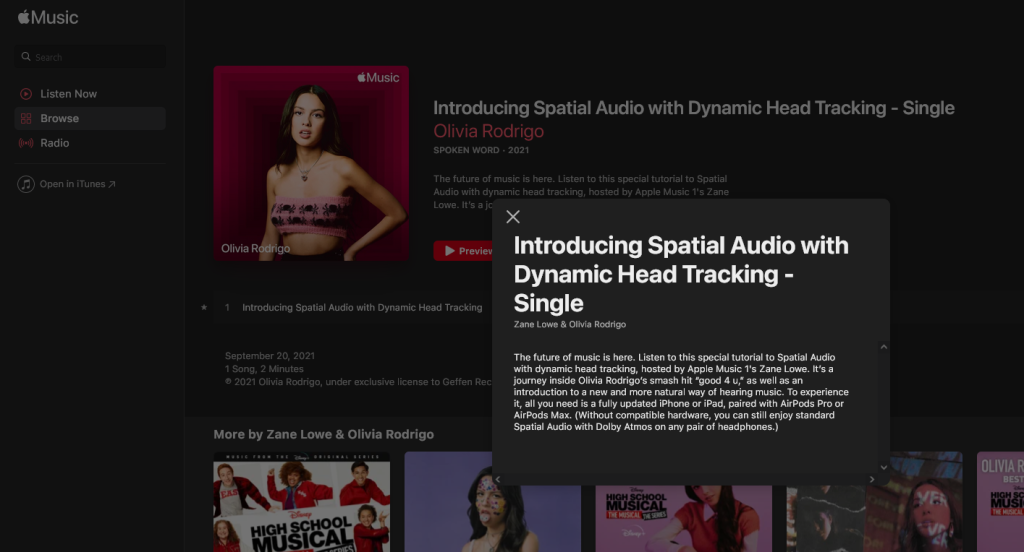

For now, Spatial Audio with head tracking is very limited, though requires essentially zero effort to set up if you’ve got the hardware. (Apple calls the tech “dynamic head-tracking,” which seems redundant – I’m not sure what nondynamic head tracking would be. But okay, sure. Probably not the worst jargon in immersive audio ever.)

You need either Apple’s AirPods Pro or over-the-ear AirPods Max. AirPods Pro already works really well from a head-tracking standpoint, and they’re the ones you most likely already own. I’m eager to try the Max to actually hear the music, as the earbuds just can’t provide the sense of scale of soundstage over-ear headphones can. (I’ve talked to researchers using the AirPods pro just for the tracking feature, actually, which is accessible through the iOS SDKs.)

And you need iOS 15, out right now – so it’s time to plug in your iPhone or iPad and stop dismissing that update prompt!

Once you have that combo, Spatial Audio and dynamic head-tracking are on by default, unless you switch them off.

My headphones, my choice

Speaking of which, you’ll have easy(-ish) ways to toggle this on and off, which is good. If you’re like me, you’re annoyed by hearing some music that sounds better as mono even in stereo. (“Wouldn’t it be nice,” anyone? Even Brian Wilson couldn’t convince me otherwise.)

Spatial Audio can be toggled on and off with the Control Center in iOS 15 (that pop-up where you go to switch brightness and airplane mode etc.):

Control spatial audio on AirPods with iPhone [Apple support notes]

Head tracking seems to also be on by default, and so far I can’t work out how to toggle it from the Control Center if it’s possible. It appears Apple has put this inside Settings > Accessibility > AirPods:

Adjust the accessibility settings for AirPods Max and AirPods Pro on iPhone

If music supports Spatial Audio – as in the music itself is encoded as Dolby Atmos Music for Apple Music – you’ll hear the multichannel mix spatialized wherever the mix engineer decided to put the sounds. (Whether they made that decision wisely is a discussion for another time – it’s clear some music engineers understand and have experience with spatialization, and some in the music world simply do not. Cinema has a long head start on this, obviously – music went stereo in the 1930s and hasn’t really progressed widely beyond that, whereas cinema made its first foray into multichannel audio and rear speakers in 1940 with Disney’s Fantasia.)

If music is stereo only, Apple can still spatialize and head track the sound – basically positioning it on a soundstage and tracking your head accordingly. There’s a precedent for this – receivers supporting Dolby’s surround decoder, for instance, for years had modes labeled things like ‘cinema’ or ‘stadium’ or ‘hall’ which would provide some spatialization and reverberation effects from the decoding algorithms for stereo streams, either simulated for stereo speakers or with multichannel decoding to multi-speaker setups. (I think I said that accurately – it’s been a while since the 1990s, folks.)

Will the terms confuse consumers? Yeah, probably. Everything old is new again; Dolby has been doing that for generations.

“It was mixed wrong” … well, actually, possibly. Dublin Matmos? Doubly Hat Moss?

Certainly can can can

But the key ace in the hole for Apple here is, of course, Apple hardware. Dolby Atmos may be the industry-standard delivery format, but Apple is using their own 11 herbs + spices for encoding for the streaming platform and then rendering the audio and spatializing what you hear on the headphones. The onboard custom-Apple chips inside the AirPods Pro and Max sense where your head is positioned and decode the audio to something that sounds immersive and convincing.

There’s also a bit of a peculiarity here, which is that Apple is simultaneously touting Lossless Audio and Spatial Audio and comparing them to “compressed audio” as the legacy streaming format. The peculiarity is, neither AirPods Pro nor AirPods Max support lossless audio, so it’s really one or the other for now. Both are good technologies and likely the future of music listening and all that, but for now, you can’t have both simultaneously.

Of course, a lot of us would like to use our own headphones or speakers, but don’t sweat the walled garden too much here. Apple providing an end-to-end solution helps jump-start the basic idea; you can bet there will be other options for how to listen to it. If you’ve got Atmos-ready equipment, it’ll play Spatial Audio – minus the head-tracking. I expect third-party head-tracking solutions will also work on their own, though probably without Apple Music support (it seems).

And the reason for this compatibility is, the actual mixing of this is not dependent on particular hardware or even particular channel configurations. Which brings us to the next part of this story…

Independents before majors – from the start of the process, not the end

Maybe the biggest danger to Apple Music’s approach is that majors will go do some brainless weird Atmos versions of their hit tracks, and put people off the format. I still need to listen through Apple Music’s offerings properly, and I don’t feel I can hear well enough on AirPods Pro to really judge. I’m pretty happy with productions intended for stereo mastered for stereo, to be honest, so I’d have to be convinced.

But I think there’s still reason to be more bullish on music mixed for the format, or the focused potential of hearing stereo positioned on a soundstage instead of “stuck” in your head – it might even encourage you to sit back and really listen.

So for that reason, I’m eager to hear music made for the format and conceived as a multichannel, spatialized composition from the start. There’s also reason to think that you might compose music for spatial contexts from VR/AR/xR to live events with spatial sound setups to games and apps and want to offer that consistent spatial setup. I think it’s exciting to see those things democratized, in that we’re already used to hearing their impact in cinema contexts, but most of us haven’t been able to make music for that context or afford the tools needed to make them. And about that:

The production picture

Apple has quietly pushed their own brand front and center, and you’ll see discussion of Spatial Audio rather than Dolby Atmos – which makes sense, as Spatial Audio on iOS includes SDKs that have nothing to do with Atmos. But Atmos does remain the delivery format for music beyond stereo.

And there’s a real danger that Atmos could scare people off, understandably. For instance:

Okay, first – totally. (I’ll admit I don’t have twelve – actually thirteen – speakers.) But second – not exactly.

So the confusion here is, there’s Dolby Atmos and then there’s Dolby Atmos. Cough. An Atmos studio properly equipped for delivery to cinema (and by extension games using the format) in fact is supposed to have a 12.1 setup. Expensive, yes – but not remotely the worst of the massively expensive toolchains those industries use. (They charge more, too, so maybe we should all switch.) The full toolset needed for Atmos mastering and delivery can also get pricey if you want all the bells and whistles.

Unless you’re working on sound for the next Marvel movie, though, building that studio yourself or even renting one is probably overkill. The Dolby Atmos Production Suite specs say as much. (See Avid’s site – the tools are available for other DAWs, too, but Avid is a reseller. The panner works in other DAWs, too, like Ableton Live or Nuendo or Logic or whatever you like. The panner is free and there’s a 90-day free trial – so crank out your Apple Music Spatial Audio mixes in a hurry, kids!)

So Atmos works the way other multichannel formats have always worked, back to the days of quadraphonic setup or – keeping in mind stereo itself is essentially an “immersive” spatial format, stereo, too. You’ve got your multichannel recording (whether in the box or using mics), you have some way of encoding spatial information (putting it into a format to distribute), and some way of decoding it (on the other end, so people can hear it). And you need some way of monitoring it while you work on the mix. The reality is, the simple way to get started on “immersive” sound and not just stereo is… find four matched speakers and set up two of them behind and two of them in front. Really. That’s the basic minimum. (See the story I did on quad with KamranV back in June.)

For its part, Dolby Atmos’ tools will happily let you monitor – and render, while you’re at it – 2.0 to 9.1.6, binaural, B-format, and monitor through headphones. Part of the very reasonable criticism of all these Spatial Audio mixes – as the tweet points out above – is that people who did them may well be monitoring through headphones. And that is indeed a bad idea, because anyone doing that probably did not take the time to set up headphones calibrated to their head. (See the Head-related transfer function. Oh and expect you may wind up making ear molds.) Worse, they may just not have any idea what these mixes are meant to sound like if they don’t have experience working in these formats. I was just in an Atmos studio that I won’t name where I was told some engineers came in and tried to position one microphone per Atmos speaker which is not the way any multichannel format is supposed to work. (Imagine keeping a ‘left’ mic and a ‘right’ mic, not in a pair, but isolated from one another, so each speaker has its own mix. It’d be horrible.)

There is a way out of this mess, though. Apple has already said that it’s working on tools for Logic later this year. I hope that these will offer both the ability to monitor through a minimum of 4-speaker studio setups and integrated support for Apple’s own headphones for quick checks of head-tracked Spatial Audio. And yeah, then we’re all likely to wind up buying AirPods Max for an extra fix – maybe not perfect studio headphones, but something that would give us a decent idea of what listeners would hear on the other end.

A four-speaker setup is not particularly elitist – at least not any more than a studio rig already is. You could get four of IK Multimedia’s iLoud Micro Monitor, for instance, and have an ideal setup pretty cheaply, and small and portable enough that you could rig monitoring sessions in your bedroom or a hotel room, even. Add a pair of AirPods Max and you’d still be under a grand. The idea of course when then be to go earn more than you spend, or at least I’ve been told by other people that’s how businesses are supposed to operate. (Eep!)

It’s also not the case that this rig would have to restrict you to Apple Music. I think the added cost and time of producing Spatial Audio in the hopes of some streaming revenue will not be worth it for small producers, underground music, and whatnot, just as streaming generally has been problematic. But you have the option of delivering to Apple Music if you want, and you still have the SDKs built into iOS if you want to deliver your music as an app or a game (or the immersive soundtrack for an app or game). It’s also not hard to imagine that at some point you could be buying downloads of immersive music the way you do stereo music on Bandcamp.

Expect, too, that your multichannel mix could be adapted to other Atmos systems and other consumer multichannel / surround systems.

It’s also a good thing that there are formats other than Dolby Atmos, some of which support more immersive sound (more speakers, more precise speaker technologies, more channels, more flexibility), and toolchains that deliver additional bang-for-the-buck. Some of these are also free and open-source, which apart from saving money or supporting open ethos can also mean the ability to take advantage of new research in sound. Cinemas – because of the inflexibility of the physical theaters and distribution systems – very rarely represent the state of the art, even if they do have very nice sound systems.

But I do expect Apple could make things easier for us on the Atmos side, which isn’t a bad thing. Their headphones already offer an alternative to DIY head tracking solutions (in case you don’t want to duct-tape a circuit board inside your Yankees baseball cap). But moreover, I’m really crossing my fingers that the few hundred bucks you have to fork over for Atmos encoding may give way to some built-in stuff in Logic. Just don’t be surprised if Apple unveils some kind of “Apple Pro” subscription service in a few weeks in the process – just $xx.99 a month for Final Cut Pro and Logic Pro and more iCloud space! and… you know how this goes.

I don’t want to sound overly optimistic here. I’m still concerned that, relative to stereo which is easy, open, and doesn’t require any ecosystem, these spatial tools are heavily proprietary, including in Apple’s vision. (They’ve also been kind of a mess, both in how Atmos is supported and how new color gamuts work.) On the other hand, the locked-down cinema version of this was already inaccessible. This is at least a step back toward democratizing those tools and providing other options. And the onus is on the vendors here – because otherwise consumers and producers alike will simply go back to stereo, which is cheaper and easier. So keeping in mind that in the end you want this stuff to work across music, gaming, and virtual/mixed reality, the winner in the long run might need to make stuff inexpensive, accessible, and interoperable.

Let’s see. New Macs seem imminent. A new Logic with more spatial features. These may all be the easiest predictions ever. And then maybe we’ll all be listening in Doubly.