It’s a dark and uncertain time across Latin America, so sending some serious love to everyone. All the more reason to take in the futuristic voices in today’s hybrid MUTEK session, from our friends Interspecifics and CNDSD + Iván Abreu.

Both of these programs are on today and streaming online as the pandemic rages on. And both acts have recent releases out, too, so – ¡Hola, viernes de Bandcamp! These folks are all serious inspirations to me.

Head to MUTEK at:

And see ths schedule: https://virtual.mutek.org/en/es-ar-2021/schedule

CNDSD + Iván Abreu

I got to speak to Malitzin Cortes (CNDSD) in some detail for an interview about work by her and Iván Abreu for the Patch XR Blog. They’re a live coding, audiovisual, machine-human interface power duo like none other – like they add up to I think about four people.

CNDSD & Iván Abreu: Expanding mind, self, and music in digital realms

This bit from Mali stuck out at me. I think it’s great, in that it pushes us all to embrace the new technology, stop worrying and love VR:

Whenever I talk about the tool or the concert that is going to be presented at Mutek 2021, in some other interviews many people find the VR element to be – and I quote – “unnecessary,” “too much,” “inaccessible for most,” “accessory” …

I think that this taboo weighs like a ghost on virtual reality, even in the year 2021. It is still in people’s imagination, an excess that lives in the world of science fiction. I think that when you have the experience, those taboos disappear and instead the mind expands.

And then take in some live-coded, utterly wild music in Mali’s new EP, just released last week.

Stuttering vocals, organic rhythms intermixed with machine patterning, ethereal textures and glitch – it’s a heady, liberated, free-flowing mix of musical ideas.

More to say about SuperCollider, TidalCycles, and the like – I’m adding some of those links, because this is something that for now is really new, mixing VR and code. And Patch XR is itself unique in that it’s not a pre-built environment, but a fully VR-native tool for constructive modular, generative synths.

Interspecifics

Interspecifics collective, and our dear friends Leslie Garcia. Paloma López, Emme Ang (Emmanuel Anguiano) and Felipe Rebolledo, continue their prolific romp through audiovisual media with still more work.

So, you can tune into their 24/7, algorithmic Recurrent Morphing Radio, which is still going strong with AI neural audio production ever since Berlin’s The Disappearance of Music this fall:

It has a wonderful post-apocalyptic vibe, where only surviving AI has supplanted ClearChannel.

And they have this new work “Almost Non-Human” as an experimental installation in Brooklyn:

Here’s a brief teaser of their AV show:

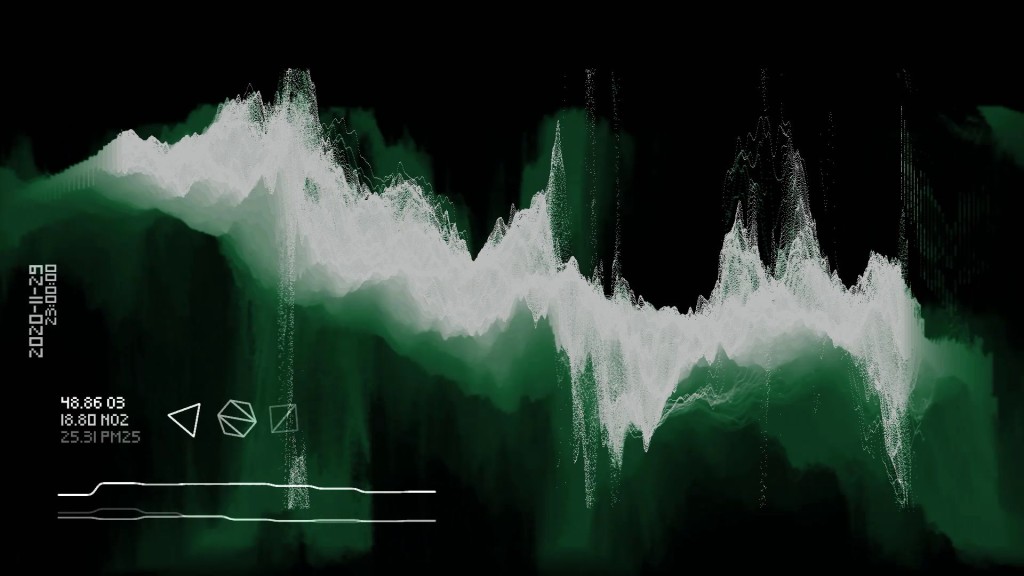

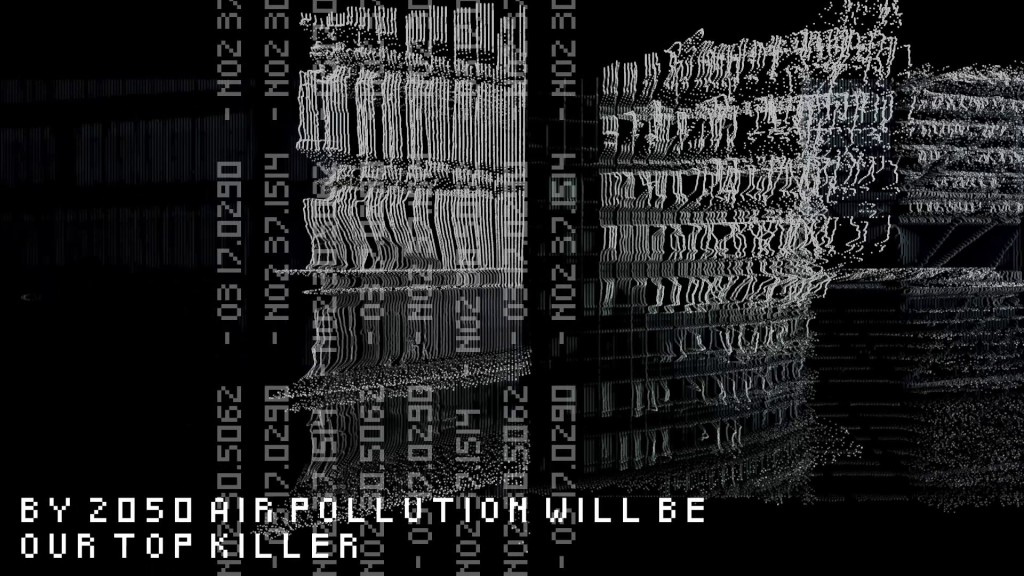

And there’s this achingly lovely if poignant score based on tracking pollution. That’s vital now – even with COVID-19 on our radar, air pollution remains a potent killer, and indeed pandemic lockdowns have not curbed its effects as some initially imagined it would.

They write:

Aire v.3 is an audiovisual album generated with machine learning tools that analyze patterns of the pollution from different cities around the world: Mexico City, Bogotá and Sao Paulo. The data comes from the predictive system of the Resource Watch, designed by the World Resource Institute and the NASA. Interspecifics has created a series of sound and visual compositions showing the pollution dynamics in the air of each of these three cities.

The first stage of this project revolves around an audiovisual experience in 10 minute segments where cityscapes made of elevations from 3D maps and satellite images are shown alongside generative sound compositions.

The pollutants are analyzed in a saturation scale, with each one assigned its own sound identity that responds to the flow of data by the means of a modulation or control signal. The most relevant and particular patterns detected by the machine learning tool developed by Interspecifics–, have the function of activating and silencing sound events as they occur, establishing the structure of the entire composition.

This project was developed with the support of the Air Quality Department of the World Resource Institute in Mexico, and with advice from Dr. Beatriz Cárdenas.credits

released April 16, 2021

Code, music and visualizations by Interspecifics

(Emmanuel Anguiano, Leslie Garcia, Paloma López and Felipe Rebolledo).

Mastering by: Modos Estudio

Design by: Diego Madero

Interspecifics Aire V.3 Website: Diego Montesinos

Visit int-lab.cc/airev3 to get to know the full project.

OTN-015 / www.otono.space/interspecifics

Plus more images to whet your appetite:

And more on Interspecifics’ Instagram.