The latest from NVIDIA Research is an AI-powered machine that spits out 3D models – in standard formats. Code is inbound shortly. And that opens up some possibilities for virtual worlds, especially for solo artists.

The best part of this particular approach is, it outputs reasonably high-quality textures and meshes from whatever dataset you want. (I may soon sound like a broken record, but the most enduring AI techniques are likely to be the ones that let you use your own datasets.)

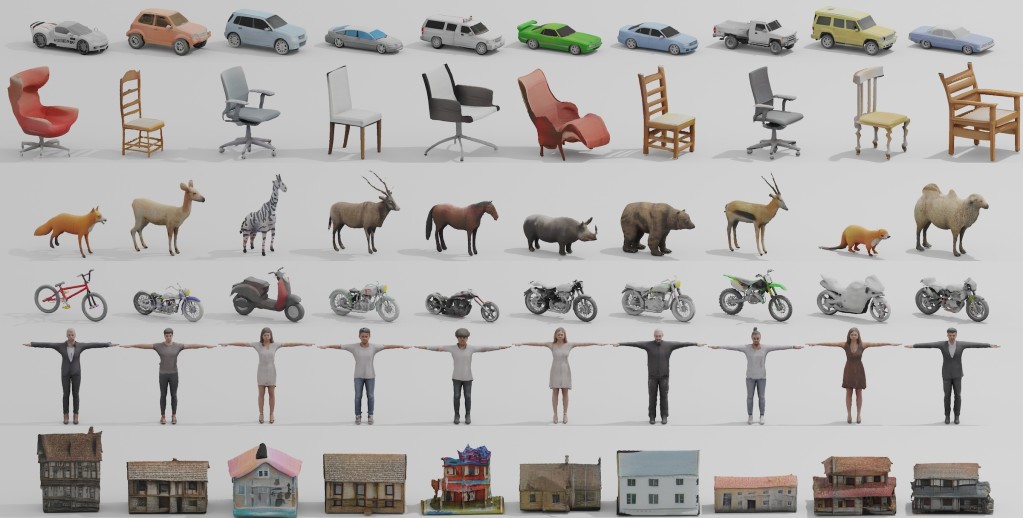

Input images, output textured 3D models you can import anywhere:

With a training dataset of 2D car images, for example, it creates a collection of sedans, trucks, race cars and vans. When trained on animal images, it comes up with creatures such as foxes, rhinos, horses and bears. Given chairs, the model generates assorted swivel chairs, dining chairs and cozy recliners.

It’ll sure make things like flight simulators more interesting – but artistic possibilities make sense here, too. (Plus, since it’s running locally – if you want to make a bunch of dicks, you know, you’re free to do that, too, really. No judgment.)

It makes me think of my fascination growing up with Preiser figures and accessories for model trains. There’s just something about having lots of things filling up a world.

It runs efficiently on a local GPU, too – NVIDIA promises 20 shapes per second on just one GPU running inference. So as we’ve seen recently from creative vendors, this is one of the big applications of AI for visuals – local training sets, local output, scaled to even consumer-class GPUs. I imagine that even legal issues could provide incentive for local production, especially as the supply chain constraints on graphics cards are now looking better than they were recently.

Check out the blog post:

World-Class: NVIDIA Research Builds AI Model to Populate Virtual Worlds With 3D Objects, Characters

And the paper is already live:

https://nv-tlabs.github.io/GET3D/

(Jun Gao, Tianchang Shen, Zian Wang, Wenzheng Chen, Kangxue Yin, Daiqing Li, Or Litany, Zan Gojcic, Sanja Fidler)

Plus on GitHub (code forthcoming):

https://github.com/nv-tlabs/GET3D

I’ll post an update when the code is live. (PyTorch!)

The conference the researchers are presenting at sounds interesting, too – that’s the NeurIPS AI conference, taking place in New Orleans and virtually, Nov. 26-Dec. 4.