The concept prototype Project LYDIA by Roland Future Design Lab uses machine learning tech from Neutone to turn any input into a “tone.” But this isn’t just about “modeling” — far from it. It lets you process anything with anything else. Beatboxing, field recordings — anything becomes an input. That emphasis on sampling and messing around in the real world might make it the opposite of genAI sound. This is tech that demands you go out and play.

Roland is building on some history from decades past here. The company’s AMDEK “Analog Music Digital Electronic Kits” DIY pedals, first introduced in 1981, were a low-cost way for tinkerers to get into pedals. So hopefully this is a signal to a return to that AMDEK heritage.

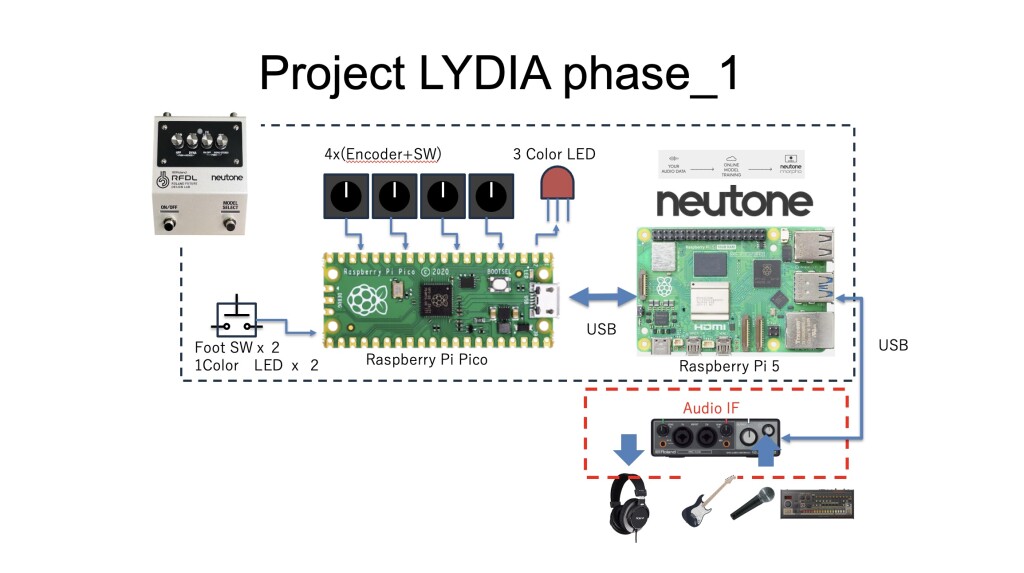

80s meets 2020s here, because inside the Project LYDIA prototype is a Raspberry Pi 5 board. (Audio is currently outboard via USB, but they plan to add some hardware make those jacks work so it’s self-contained.)

Doing this kind of anything-goes style transfer with sound is way more interesting than the usual demo. A lot of early machine learning demos had touted the use of AI to model vintage analog circuits. The thing is, we already have a zillion hardware and software tools that do that, and humans are pretty darned good at sitting next to a board and tweaking code with nuances.

But this is something your average pedal won’t do. It’s clearest in their demo video:

And this seems a way better vision of what this stuff can be about. Instead of still more screen time writing prompts like we’re trying to argue with an automated airline support bot, you go out and do the stuff we like to do with sound — listen, record, play. So of course it’s great that Roland put this in a classic metal box.

This doesn’t replace what humans can do, because just like processes like granular synthesis or convolution, it’s a digital process that opens up sounds you really can’t make any other way. (Well, I say that, but humans being humans, we do find ways to mimic things once we hear them, so never say never! It’s certainly boldly going where no BOSS pedal has gone before.)

This enabling tech here comes from Neutone Mopho, and you don’t have to use this pedal concept to get at it — their plug-in is available and rather appealing. But in this video, too, I think you’ll agree that it’s at its best when it’s doing the things that are the least conventional:

It’s also worth saying that you can do this sort of training with very small data sets and work locally, without the cloud. It’s a little like the difference between a leaded gas-guzzling V12 car and a bicycle; they’re both transportation, but one can do more harm than the other.

Here’s a closer look at the state of the hardware prototype, which apparently was already a big hit at Brighton’s now-legendary Audio Developer Conference where Project LYDIA was unveiled yesterday:

Because of the nature of the data science, I really do hope there are some experimental, open approaches to this. AI in its current state, including the commercial applications, exists because of those experiments. So if this has you itching to just try to do something like this yourself, go for it. I mean, that was really the spirit that brought guitar pedals into being in the first place, including the healthy level of competition.

But I’m sure we’ll have more to say on this topic, especially as I’ve been talking to Paul McCabe from Roland who leads the Roland Future Design Lab.

PS, enjoy some AMDEK pedals here. (I mean, this pedal feels like something I would come up with. Hell, yes. I think I want this more than I want a TR-1000.)

And as for building the idea of intelligent listening into your product, well, it worked for Sega! I mean, it totally didn’t work for them, but I have a Dreamcast and a copy of Seaman and I find it amusing to look back on this ad:

More:

Introducing Project LYDIA [Roland]