First, there was DSP (digital signal processing). Now, there’s AI. But what does that mean? Let’s find out from the people developing it.

We spoke to Accusonus, the developers of loop unmixer/remixer Regroover, to try to better understand what artificial intelligence will do for music making – beyond just the buzzwords. It’s a topic they presented recently at the Audio Engineering Society conference, alongside some other developers exploring machine learning.

At a time when a lot of music software retreads existing ground, machine learning is a relatively fresh frontier. One important distinction to make: machine learning involves training the software in advance, then applying those algorithms on your computer. But that already opens up some new sound capabilities, as I wrote about in our preview of Regroover, and can change how you work as a producer.

And the timing is great, too, as we take on the topic of AI and art with CTM Festival and our 2018 edition of our MusicMakers Hacklab. (That call is still open!)

CDM spoke with Accusonus’ co-founders, Alex Tsilfidis (CEO) and Elias Kokkinis (CTO). Elias explains the story from a behind-the-scenes perspective – but in a way that I think remains accessible to us non-mathematicians!

How do you wind up getting into machine learning in the first place? What led this team to that place; what research background do they have?

Elias: Alex and I started out our academic work with audio enhancement, combining DSP with the study of human hearing. Toward the end of our studies, we realized that the convergence of machine learning and signal processing was the way to actually solve problems in real life. After the release of drumatom, the team started growing, and we brought people on board who had diverse backgrounds, from audio effect design to image processing. For me, audio is hard because it’s one of the most interdisciplinary fields out there, and we believe a successful team must reflect that.

It seems like there’s been movement in audio software from what had been pure electrical engineering or signal processing to, additionally, understanding how machines learn. Has that shifted somehow?

I think of this more as a convergence than a “shift.” Electrical engineering (EE) and signal processing (SP) are always at the heart of what we do, but when combined with machine learning (ML), it can lead to powerful solutions. We are far from understanding how machines learn. What we can actually do today, is “teach” machines to perform specific tasks with very good accuracy and performance. In the case of audio, these tasks are always related to some underlying electrical engineering or signal processing concept. The convergence of these principles (EE, SP and ML) is what allows us to develop products that help people make music in new or better ways.

What does it mean when you can approach software with that background in machine learning. Does it change how you solve problems?

Machine learning is just another tool in our toolbox. It’s easy to get carried away, especially with all the hype surrounding it now, and use ML to solve any kind of problem, but sometimes it’s like using a bazooka to kill a mosquito. We approach our software products from various perspectives and use the best tools for the job.

What do we mean when we talk about machine learning? What is it, for someone who isn’t a researcher/developer?

The term “machine learning” describes a set of methods and principles engineers and scientists use to teach a computer to perform a specific task. An example would be the identification of the music genre of a given song. Let’s say we’d like to know if a song we’re currently listening is an EDM song or not. The “traditional” approach would be to create a set of rules that say EDM songs are in this BPM range and have that tonal balance, etc. Then we’d have to implement specific algorithms that detect a song’s BPM value, a song’s tonal balance, etc. Then we’d have to analyze the results according to the rules we specified and decide if the song is EDM or not. You can see how this gets time-consuming and complicated, even for relatively simple tasks. The machine learning approach is to show the computer thousands of EDM songs and thousands of songs from other genres and train the computer to distinguish between EDM and other genres.

Computers can get very good at this sort of very specific task. But they don’t learn like humans do. Humans also learn by example, but don’t need thousands of examples. Sometimes a few or just one example can be enough. This is because humans can truly learn, reason and abstract information and create knowledge that helps them perform the same task in the future and also get better. If a computer could do this, it would be truly intelligent, and it would make sense to talk about Artificial Intelligence (A.I.), but we’re still far away from that. Ed.: lest the use of that term seem disingenuous, machine learning is still seen as a subset of AI. -PK

If a reader would like to read more into the subject, a great blog post by NVIDIA and a slightly more technical blog post by F. Chollet will shed more light into what machine learning actually is.

We talked a little bit on background about the math behind this. But in terms of what the effect of doing that number crunching is, how would you describe how the machine hears? What is it actually analyzing, in terms of rhythm, timbre?

I don’t think machines “hear,” at least not now, and not as we might think. I understand the need we all have to explain what’s going on and find some reference that makes sense, but what actually goes behind the scenes is more mundane. For now, there’s no way for a machine to understand what it’s listening to, and hence start hearing in the sense a human does.

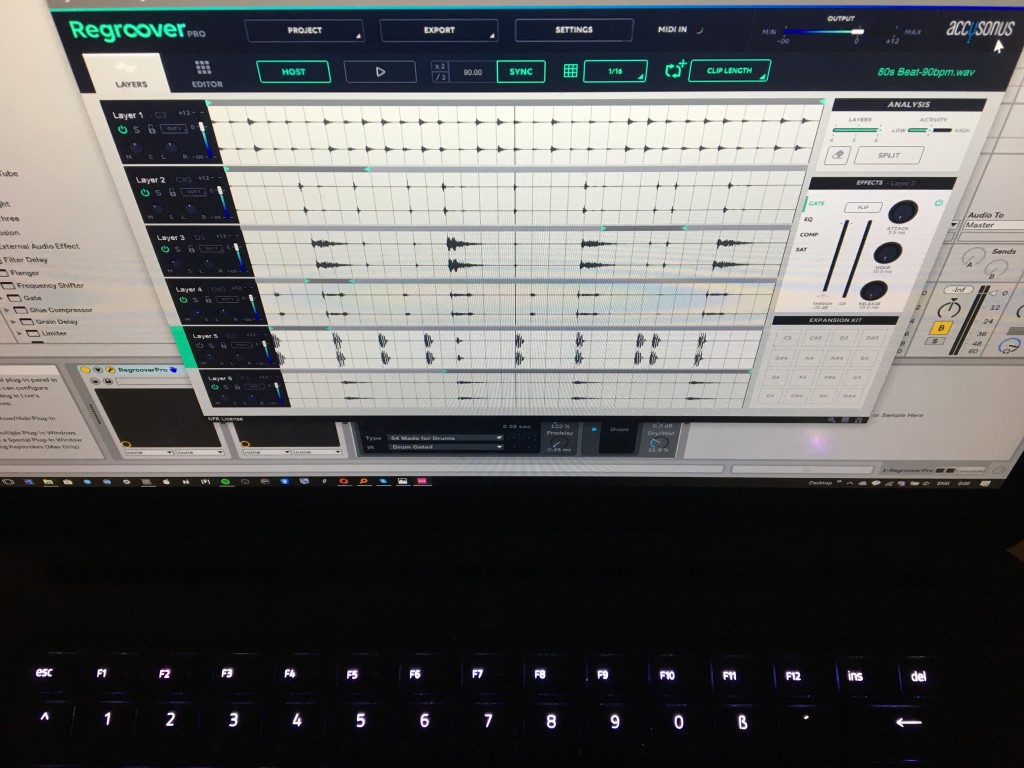

Inside Accusonus products, we have to choose what part of the audio file/data to “feed” the machine. We might send an audio track’s rhythm or pitch, along with instructions on what to look for in that data. The data we send are “representations” and are limited by our understanding of, for instance, rhythm or pitch. For example, Regroover analyses the energy of the audio loop across time and frequency. It then tries to identify patterns that are musically meaningful and extract them as individual layers.

Is all that analysis done in advance, or does it also learn as I use it?

Most of the time, the analysis is done in advance, or just when the audio files are loaded. But it is possible to have products that get better with time – i.e., “learn” as you use them. There are several technical challenges for our products to learn by using, including significant processing load and having to run inside old-school DAW and plug-in platforms that were primarily developed for more “traditional” applications. As plug-in creators, we are forced to constantly fight our way around obstacles, and this comes at a cost for the user.

What’s different about this versus another approach – what does this let me do that maybe I wasn’t able to do before?

Sampled loops and beats have been around for many years and people have many ways to edit, slice and repurpose them. Before Regroover, everything happened in one dimension, time. Now people can edit and reshape loops and beats in both time and frequency. They can also go beyond the traditional multi-band approach by using our tech to extract musical layers and original sounds. The possibilities for unique beat production and sound design are practically endless. A simple loop can be a starting point for a many musical ideas.

How would you compare this to other tools on the market – those performing these kind of analyses or solving these problems? (How particular is what you’re doing?)

The most important thing to keep in mind when developing products that rely on advanced technologies and machine learning is what the user wants to achieve. We try to “hide” as much of complexity as possible from the user and provide a familiar and intuitive user interface that allows them to focus on the music and not the science. Our single knob noise and reverb removal plug-ins are very good examples of this. The amount of parameters and options of the algorithms would be too confusing to expose to the end user, so we created a simple UI to deliver a quick result to the user.

If you take something as simple as being able to re-pitch samples, each time there’s some new audio process, various uses and abuses follow. Is there a chance to make new kinds of sounds here? Do you expect people to also abuse this to come up with creative uses? (Or has that happened already?)

Users are always the best “hackers” of our products. They come up with really interesting applications that push the boundaries of what we originally had in mind. And that’s the beauty of developing products that expand the sound processing horizons for music. Regroover is the best example of this. Stavros Gasparatos has used Regroover in an installation where he split industrial recordings routing the layers in 6 speakers inside a big venue. He tried to push the algorithm to create all kinds of crazy splits and extract inspiring layers. The effect was that in the middle of the room you could hear the whole sound and when you approached one of the speakers crazy things happened. We even had some users that extracted inspiring layers from washing machine recordings! I’m sure the CDM audience can think of even more uses and abuses!

Regroover gets used in Gasparatos’ expanded piano project:

Looking at the larger scene, do you think machine learning techniques and other analyses will expand what digital software can do in music? Does it mean we get away from just modeling analog components and things like that?

I believe machine learning can be the driving force for a much-needed paradigm shift in our industry. The computational resources available today not only on our desktop computers but also on the cloud are tremendous and machine learning is a great way to utilize them to expand what software can do in music and audio. Essentially, the only limit is our imagination. And if we keep being haunted by the analog sounds of the past, we can never imagine the sound of the future. We hope accusonus can play its part and change this.

Where do you fit into that larger scene? Obviously, your particular work here is proprietary – but then, what’s shared? Is there larger AI and machine learning knowledge (inside or outside music) that’s advancing? Do you see other music developers going this direction? (Well, starting with those you shared an AES panel on?)

I think we fit among the forward-thinking companies that try to bring this paradigm shift by actually solving problems and providing new ways of processing audio and creating music. Think of iZotope with their newest Neutron release, Adobe Audition’s Sound Remover, and Apple Logic’s Drummer. What we need to share between us (and we already do with some of those companies) is the vision of moving things forward, beyond the analog world, and our experiences on designing great products using machine learning (here’s our CEO’s keynote in a recent workshop for this).

Can you talk a little bit about your respective backgrounds in music – not just in software, but your experiences as a musician?

Elias: I started out as a drummer in my teens. I played with several bands during high school and as a student in the university. At the same time, I started getting into sound engineering, where my studies really helped. I ended up working a lot of gigs from small venues to stadiums from cabling and PA setup to mixing the show and monitors. During this time I got interested in signal processing and acoustics and I focused my studies on these fields. Towards the end of university I spent a couple of years in a small recording studio, where I did some acoustic design for the control room, recording and mixing local bands. After graduating I started working on my PhD thesis on microphone bleed reduction and general audio enhancement. Funny enough, Alex was the one who built the first version of the studio, he was the supervisor of my undergraduate thesis and we spend most of our PhDs working together in the same research group. It was almost meant to be that we would start Accusonus together!

Alex: I studied classical piano and music composition as a kid, and turned to synthesizers and electronic music later. As many students do, I formed a band with some friends, and that band happened to be one of the few abstract electronic/trip hop bands in Greece. We started making music around an old Atari computer, an early MIDI-only version of Cubase that triggered some cheap synthesizers and recorded our first demo in a crappy 4-channel tape recorder in a friend’s bedroom. Fun days!

We then bought a PC and more fancy equipment and started making our living from writing soundtracks for theater and dance shows. At that period I practically lived as a professional musician/producer and have quit my studies. But after a couple of years, I realized that I am more and more fascinated by the technology aspect of music so I returned to the university and focused in audio signal processing. After graduating from the Electrical and Computer Engineering Department, I studied Acoustics in France and then started my PhD in de-reverberation and room acoustics at the same lab with Elias. We became friends, worked together as researchers for many years and we realized the we share the same vision of how we want to create innovative products to help everyone make great music! That’s why we founded Accusonus!

So much of software development is just modeling what analog circuits or acoustic instruments do. Is there a chance for software based on machine learning to sound different, to go in different directions?

Yes, I think machine learning can help us create new inspiring sounds and lead us to different directions. Google Magenta’s NSynth is a great example of this, I think. While still mostly a research prototype, it shows the new directions that can be opened by these new techniques.

Can you recommend some resources showing the larger picture with machine learning? Where might people find more on this larger topic?

Siraj Raval’s YouTube channel:

Google Magenta’s blog for audio/music applications https://magenta.tensorflow.org/blog/

Machine learning for artists https://ml4a.github.io/

Thanks, Accusonus! Readers, if you have more questions for the developers – or the machine learning field in general, in music industry developments and in art – do sound out. For more:

Regroover is the AI-powered loop unmixer, now with drag-and-drop clips