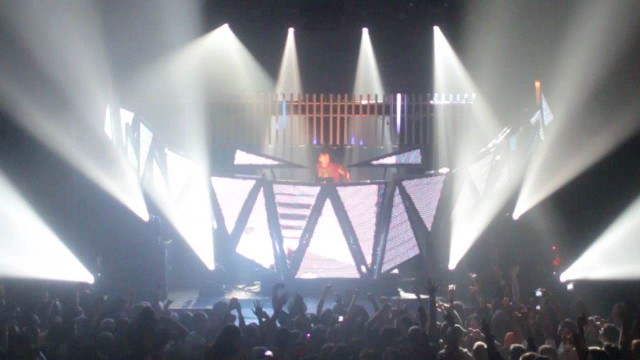

Live does lighting. Mattijs and Michel working on lighting compositions in Ableton Live. All photos courtesy Mattijs Kneppers.

Spectacular spaceship showmanship, or actually synchronizing live electronic dance music performances? For Feed Me, aka Englishman Jon Gooch, the show had to be both. Software developer Mattijs Kneppers harnessed Ableton Live, Max/MSP, and Max for Live to make it all work. You may have seen the video; Mattijs gives CDM a unique look even further into how this is working, sharing a gallery of the stage rig and some of the technical details.

CDM: So, of course, this was the summer that brought “press-play” performances into the public eye – and we’re actually I think indebted to deadmau5 for blowing open the discussion and talking about what’s really happening. One point that came up was that people are concerned that you can’t do this sort of big show with lots of visuals and keep everything running smoothly. deadmau5 specifically mentioned SMPTE [the clock signal or timecode standard often used with visual elements]. How did things work here?

Mattijs: “We all hit play”; this is exactly the problem that we wanted to solve. Jon builds his performances live, with separate audio tracks and segments, with live effects, free improvisation, etc., so every gig is different. As an example, he is completely free to change the tempo and the show tempo will change too, which is pretty much impossible if you use timecode or SMPTE.

In case you’re interested, I found a (grimy) recording of Feed Me changing the tempo live:

This didn’t come easy, of course. Both technically and conceptually, it required some serious software development. The lighting controller and video server are both developed from scratch with continuous real-time synchronization in mind. There is no lighting desk involved: the system runs on MacBook Pros connected via Ethernet that drive video, LED, and lighting fixtures, using breakout boxes for DMX. [DMX512 is a lighting protocol somewhat similar to MIDI.]

Especially the concept of mixing layers of lighting composition in real-time was quite new for us. Video layers can be blended easily, but for lighting, we spent some time finding an approach to be able to mix in a meaningful way.

The system is built around Max For Live and OSC [OpenSoundControl]; we didn’t want to juggle with MIDI data and equipment, and also, an audio performer shouldn’t have to worry about triggering lighting or video patterns alongside his audio clips. Basically, there are a few devices in his live set that send everything he does on stage to the computers in the Front of House and the sync software then matches video and lighting to what he is playing at any given moment. The MFL devices are a bit like LiveGrabber (livegrabber.sourceforge.net), only with extra tricks for low-latency synchronisation rather than direct event based control.

To be honest, we did need some creative hacks to overcome limitations in these early versions of Max For Live, but luckily, we had great support from the folks at Cycling ’74.

So yeah, people in the show industry tend to believe that live performing and synchronized shows don’t go well together, but I believe that’s because so far we don’t have the right tools. Build new tools, and the game changes. Obviously, I’d love to exchange thoughts about this with deadmau5, I have the impression that he has a great sense of the evolving emotions in a crowd and it must be frustrating not be able to adjust his set when he feels they need it. I think performances like these where music is only one aspect of the experience can be much more intense if they would be synced in real-time rather than pre-composed. So in the future I hope we can change that 🙂

Thanks, Mattijs. We’re definitely lucky to get to learn from this stuff as a community of artists, and I remain hopeful that the quality and expressiveness of our work continues to improve. Congrats on this show; hope we get to see it soon! -PK

More details on the video from Future Music Magazine:

Feed Me recently cooperated with Sober Industries and Studio Rewind to produce a remarkable Live show, displaying innovative lighting and video concepts and most notably a giant set of synchronized Teeth.

Learn about Feed Me as a character, and get a peek into the artistic and technological challenges solved in the process of developing this show from a sketch to a fully realized concept. This includes designing the giant custom LED teeth, creating the beat-accurate video and lighting content and building software to synchronize all show systems to Feed Me’s Live stage performance.

Concept

Feed MeCreative Direction

Jon Gooch

Auke Kruithof

Tim Boin

Basto ElbersProduction

Studio Rewind www.studiorewind.tv

Sober Industries www.sober-industries.comSoftware Development

Mattijs Kneppers www.arttech.nlAnimation

Auke Kruithof

Alain Maessen – www.brutesque.nl

Daniel Bruning – www.danielbruning.com

Casper Sormani – www.nosuchthing.nlLighting Design

Michel Suk – www.michelsuk.nlCamera

Daniel BruningMontage:

Thijs Albers – www.marathonworks.comSpecial thanks to:

Three Six Zero management

Ampco/Flashlight

Animator Auke Kruithof works with Live and ESP Vision. (ESP Vision, previously Windows-only, is now available for the Mac; it’s lighting pre-visualization software.)