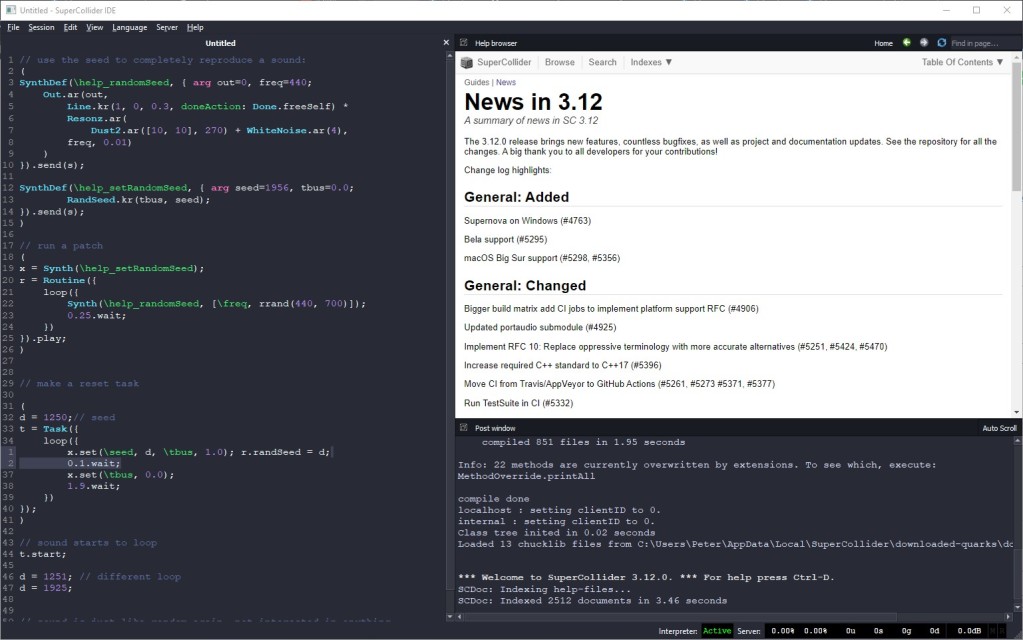

It’s the basis of a lot of the live coding scene, and an unmatched powerhouse, free and open source. And it gets a big update this week.

The main features here – expanded platform support, including Bela and macOS Big Sur, plus “countless bugfixes.” So it’s definitely a download you want to grab.

In detail, what’s new:

- Supernova support on Windows – this finally gives Windows some parity with Mac and Linux, without having to build the thing themselves, offering drop-in replacement for the default scsynth but expanded multiprocessor support and parallelization for better performance.

- Bela support – full support for the awesome embedded platform, which means you can turn your favorite SC code into high-performance mobile hardware of your own creation and even ditch the computer live if you want.

- macOS Big Sur support – yep, keeping up with the Apples

- Enhanced/updated Continuous Integration (CI) support on GitHub and GitHub Actions should make cross-platform support easier on the build side

- Fuzzy class for fuzzy array comparisons (ooh, going to play with that one)

- Updated terminology reflects an industry move to clearer, more inclusive, more accessible language – so documentation for features like MIDI sync says what sync actually does (like receiving machine and clock source) rather than referencing the oppression of humans by other humans

And there are a lot of fixes around those features and different platforms.

Even if you don’t use SuperCollider directly, this also has benefits for users of live coding environments like Tidal and Sonic Pi. Pictured at top, Sonic Pi.

Check out the release on Mac, Windows, and Linux:

https://supercollider.github.io/

https://github.com/supercollider/supercollider/releases/tag/Version-3.12.0

Image at top was in support of liveinterfaces Brighton, via our friends of the Algorave. Thanks to this story actually we get to learn the full provenance of that photo in Glasgow, via its photographer Claire Quigley:

PS – on the Sonic Pi <> SuperCollider front, Sam clues us in:

But for still more of what you can do with this family of tools, let’s check out some musical context from the streamed (in July) (Algo|Afro) Futures live from Vivid Projects, Birmingham UK:

Details:

3:23 Introduction (by Antonio Roberts)

10:13 Jae Tallawah

20:39 Rosa Francesca

49:58 Emily Mulenga

1:15:26 Samiir SaundersThe showcase event for (Algo|Afro) Futures, celebrating the work four early-career Black artists who, since April 2021, have been exploring the creative potential of live coding.

The event features pre-recorded and live performances and artworks from the above artists, and was streamed live from Vivid Projects in Birmingham, in front of a local (socially distanced) audience.

(Algo|Afro) Futures is a mentoring programme for early career Black artists who want to explore the creative potential of live coding.

Live coding is a performative practice where artists and musicians use code to create live music and live visuals. This is often done at electronic dance music events called Algoraves, but live coding is a technique rather than a genre, and has also been applied to noise music, choreography, live cinema, and many other time-based artforms.

(Algo|Afro) Futures is organised by Antonio Roberts and Alex McLean with Then Try This and Vivid Projects, in collaboration with and funded by the UKRI research project “Music and the Internet: Towards a Digital Sociology of Music”