TouchDesigner 2023 Official has landed, in a massive upgrade for the essential media production tool and visual development environment. 2023 brings major new device and sensor support, timecode and other features that help it work in broadcast and production environments, and lots of new operators and integrations.

Image at top: FLARE Festival 2023 – Hill Jiang (TEA Community) live performance.

Here’s a quick overview of some highlights.

New Operators of course are where a lot of the most creative possibilities lie. There’s a Bloom TOP for bloom effects, GLSL COMP, NVIDIA Upscaler TOP for integrated upscaling (whoa), and overhauled Engine COMP.

And then a ton of integrations and production capabilities:

- Expanded OAK-D camera support (for stereophonic images, depth sensing, IR night vision, etc.). That integrates well with machine learning-powered tracking models. (More on those below – you can also use, like, a webcam if you don’t have one of these fancy cameras!)

- Machine learning tracking capabilities not only with Kinect Azure but also Orbbec Femto Mega and Bolt – basically, as Microsoft abandons this approach, other vendors are taking it up with more or less drop-in support for the same models. Plus there’s the Body Track CHOP for NVIDIA RTX GPUs.

- Integration for Mo-Sys Startracker and Laser devices.

- Timecode, timecode, timecode! Integrated throughout – a CHOP, even a Python class. Go!

- Certified Apple ProRes codec support with Apple Silicon acceleration on macOS. (Remember when the Mac was a second-class citizen in TouchDesigner land? No more.)

- Audio and Video Devices DATs so you can … see which hardware is connected, essential.

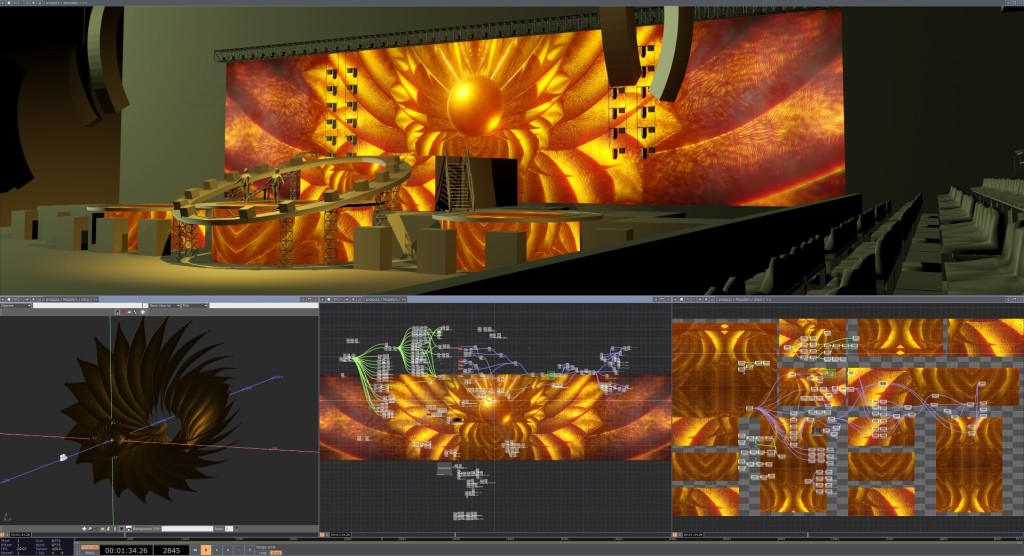

Love this image: Interactive live visual system with venue simulator and chart output for BABYMETAL “METALIZM” – Kezzardrix.

Here’s a side note for anyone investigating machine learning for motion tracking and computer vision. I was just playing with MediaPipe with JavaScript and p5.js, the free Google-developed library that uses pre-trained models for various tasks (face, hand, skeleton tracking). There’s excellent support in TouchDesigner, which I’ve only just started playing with, and it nicely complements the options above – plus you can use it with cheap webcams, like the one built into your laptop.

Derivative has posted various tutorials on the topic: https://derivative.ca/tags/mediapipe

And there’s this very nice library: https://github.com/torinmb/mediapipe-touchdesigner

As I’ve written about before, there’s also new integration with Bitwig Studio – see the TDBitwig release blog and review the TDBitwig User Guide.

And there’s growing integration for Unreal Engine, too.

Python support is upgraded to 3.11, which delivers major performance boosts. They’ve also expanded parameter access for Python.

You’ll find lots of other updates in the excellent release guide, with links to everything you’ll need to read up on before upgrading.

And remember, while some of these features do require a paid license, a whole lot of the power is accessible even with a free non-commercial license. There’s really nothing stopping you from beginning to learn and play with the environment.

Previously: