Apple’s WWDC keynote kicked off yesterday with an event mostly focused on consumer features for WatchOS, iOS, iPadOS, macOS, and home. That means you might have missed a lot of the advanced creative features, graphics, and developer tools buried in the fine print.

Long-time Apple watchers know this pattern, too. Yeah, a lot of the Mac and tech press, informed by some rumors, hyped up the possibility of a new MacBook Pro or new Apple Silicon chip. Look, the issue is – that data is often unreliable.

Unpopular opinion: new Mac hardware is important to some, but it’s not the make-or-break story this month that a lot of folks are making it out to be.

I’ll say this – the Apple Silicon available now is blindingly fast. It’s fast and reliable enough that I often don’t notice when loading plug-ins (or even all of Ableton Live) without native updates, via Rosetta 2. I understand some users may be waiting on greater memory capacity or expanded graphics capabilities, but most musicians would benefit from the current Mac lineup right away. It’s more likely that you’re waiting on Big Sur updates from your favorite software or hardware drivers than it is that you really need to wait on new Mac hardware. More on that story soon, though, as now we do have some M1-native stuff to test by comparison.

Anyway, let’s get into what did get announced. Apple is now a sprawling mega-company supporting a lot of tech across different OSes and services. I’m biased; I think the most interesting stuff with computers always happens with developers and advanced artists and people pushing the gear to its frontier. So let’s talk about what’s new for us.

And the main thing here is, just as you’d expect at WWDC, Apple’s main pitch is what they can do with their custom software for their custom silicon. That boils down to new features for device integration, graphics and audio APIs, and a whole lot more machine learning, Augmented Reality, and spatial audio.

Takeaways:

3D sight and sound

Spatial Audio is coming everywhere.

So, this stuff gets confusing as it involves a lot of different technologies, plus some proprietary formats like Dolby Atmos, which then for even more confusion become brand names applied to very different experiences depending on hardware. Phew.

But – while the teaser announcements about Atmos and Spatial Audio with Apple Music were a bit vague, the biggest news by far for me from WWDC is that Apple is fully committing to spatial audio stuff everywhere. And that could solve spatial audio’s pipeline/demand problem, by turning Apple’s empire of platforms into a reasonable combination of apps, services, headphones, and speakers that can play real 3D sound.

Where we’re seeing that:

- Physical Audio Spatialisation Engine. This is the API for games, so basically game devs focused on Apple platforms. (But see also stuff like Unreal and Unity that support those platforms seamlessly for you.) I’ll dig into this more when I’ve spent some time on it, but expect custom-developed 3D sound possibilities.

- Spatial Audio on the Mac. Ah, the walled garden – M1 chip, AirPods Pro and AirPods Max – but then you get pretty cool audio spatialization. Apple is baking this stuff into tools like FaceTime, but I think that may be a good demo for things other developers can do, too.

- Dolby Atmos and Spatial Audio stuff on Apple Music. It’s not showing up for me in Germany yet, but Apple yesterday unveiled some early Apple Music demos of Atmos-compatible stuff that works on their headphones. This seems to include existing stereo-mastered music positioned on a virtual soundstage, but also made-for-Atmos stuff that might be a bit bolder.

If you have a pair of AirPods Pro, you’ve probably already gotten the idea of how it works in this context – apps like Apple TV will position a stereo sound source where a device like the iPad is located. So if you turn your head with your AirPods Pro in your ears, you’ll get the uncanny feeling that the sound is coming from the screen. (I was afraid of waking neighbors a couple of times.) The gaming stuff, though, means that you’ll see this in more than just FaceTime or fixed stereo emitters – it opens up fascinating new hardware integration possibilities even for tools like audio plug-ins. Maybe I’m getting carried away, but I can imagine checking an Atmos-encoded or ambisonics mix on the go with Apple or Beats headphones, even. (The reason for the special hardware is, the headphones do head tracking.)

Part of why I’m bullish about this is, I don’t doubt that Apple pushing the experience will encourage other vendors, too. Apple has a long history of having an impact on the industry at large, even for those who don’t own their products.

Spatialized audio has been hard for people to experience and therefore hard to describe. But if you think about it another way, it’s weird that the headphone experience means that sound stays fixed to a left/right mix even as we move our head and bodies around and that we’re mostly still using a century-old approach to left/right panning.

It’s great to see this breaking through. And I remember 20 years ago having conversations where the consensus of audio researchers was that consumer devices were the missing link. I don’t think this is only about Apple and Dolby; I think the Apple and Dolby delivery solution is likely to invigorate toolchains across music software. Spatial audio is even more fun in physical venues so – keep those vaccines coming, basically, and we’ll keep tinkering around with the various software tools designer to help you make this stuff as a musician.

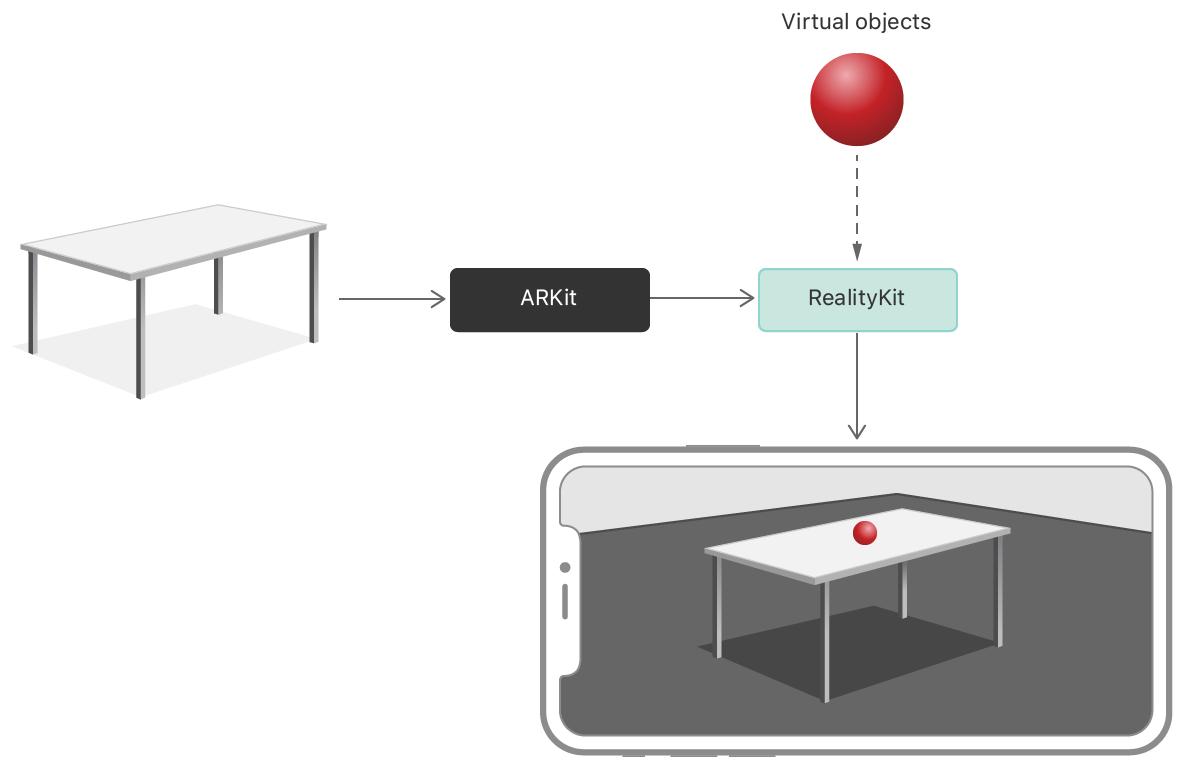

Apple’s Augmented Reality tech continues to mature – now on the Mac, too.

The latest iPhones have already become a kind of de facto punk 3D object scanner. Now Apple has RealityKit 2, which promises a new Mac API for Object Capture from iPhone, iPad, or even a DSLR image and making AR-optimized 3D models. Look for support in Xcode, Reality Composer (seen above), plus Cinema 4D and Unity MARS.

But yeah, for anyone expecting seamless iPhone/iPad – to – Mac capture workflows, those are clearly inbound later this year.

I do mean de facto capture device, because I expect while it’s unrelated to this announcement, we’ll also see more capture pipelines with software like Unreal using the iPhone as capture device.

AR developers get a lot more goodies, too, including new controls across visual, audio, and animation APIs and enhanced rendering and dynamic shader support. For releasing AR apps, it’s just hard to beat iOS right now, and one of the advantages of the Mac is, some of those same features are propagating up to the desktop.

Check out Reality Kit 2:

https://developer.apple.com/augmented-reality/realitykit/

Developer improvements and serious iPad stuff

Metal updates. This is a big graphics update, as Apple continues to focus on Metal. And now as they’re rolling out their own Apple Silicon, of course that means even more hardware/OS integration. For Monterey, it seems we get “enhanced graphics and compute integration, function stitching, updates to Ray Tracing, GPU-accelerated ML training, and more.” So yes, it’s all about ray tracing, machine learning, and graphics and computation features that work on their hardware.

In the old days, that would mean having to write your app exclusively for this platform, but nowadays I expect it’ll mean cross-platform tools allow these features on the Mac when that’s the hardware you run on, as well.

Machine learning updates. In the same vein, yes, Create ML APIs also get new features with specific templates and training capabilities for vision, natural language, and even how users behave on a device. Apple seems to be all-in on giving developers and researchers an integrated set of tools across their chips, OS, and devices, with a lot of sample content to get going.

https://developer.apple.com/machine-learning/

Maybe Catalyst starts to get useful? So, the basic idea of Catalyst was nice enough – allowing you to target both macOS and iOS. But it also illustrated how different the UX is for these different platforms. Now there are some new APIs to make these cross-platform apps start to look more usable, with pop-up buttons, tooltips, Touch alternatives (since losing touch made a lot of apps fairly unusable), and other features. Oh, and printing finally works properly.

The iPad integrates really well with the Mac now!

Universal Control is cool, as an addition to what Continuity already let you do with an iPad and a Mac. One mouse/trackpad and one keyboard let you control both the iPad and the Mac, and you can hand-off files between devices. You’ll need recent hardware and the latest OS, but the fluidity of integration is great. Just those of us straddling OSes (a Mac and a Windows or Linux box) probably want to stick with Synergy and Flow which are more flexible, if not quite as effortless to configure and use.

So this sounds useful, if not quite as jaw-dropping to anyone who did use something like Synergy. But let’s talk about this:

Swift Playgrounds 4 will let you code directly on the iPad.

One project built in the rapid-coding Swift Playground environment will open both on your Mac and your iPad. (You can even submit to the App Store from the iPad, though I think the development angle is what’s really interesting.) You can code and test on the device, run it full-screen, and adjust your code. And that goes along with:

Xcode Cloud now supports new testing and collaboration across devices in parallel. Somehow, again this means Apple is adding on some as-yet-to-be-announced subscription pricing, but okay.

It does look really nice and Apple-y, so while there are third-party options available, this one may appeal to Apple-centric shops. And yeah it looks useful for testing and has TestFlight integration. See the Xcode Cloud site.

Xcode is better for collaboration and usability. The good news is, with or without that cloud subscription thing, Xcode has improved collaboration features. Think inline comments with identifier of the coder, a really nice new Quick compare feature, custom documentation in Markdown, and hey, Vim mode. See the Xcode site for more on Xcode 13.

Mac power features

AirPlay is more useful, too. It also works on the Mac, now, so in case you need to justify one of those very pretty new iMacs.

Apple has vastly improved accessibility features. Some of this was announced in May, but long story short – Apple continues to lead the industry in accessibility features. I know that’s also relevant to a lot of musicians. (In this case, Full Keyboard Access, cursors, and Markup all get some love.)

Shortcuts on the Mac add new automation features. It’s really sad that Automator on Mac OS X got limited attention from Apple and third parties. But long-time Mac users who used that (or AppleScript) will clearly love Automator in macOS Monterey. Interestingly it even imports Automator workflows. Apple is only talking about Mac features, so I’m keen to see what is available to third parties. A lot of what we do in audio and graphics can benefit from automation, not just the “spam your friends with your food porn” demo Apple showed.

Collaboration after a year at home

FaceTime gets some expected improvements. SharePlay is intended as a consumer feature, but it could be useful to collaboration, too – since you can run a video cut or the latest track bounce by someone and listen/watch together.

It also runs in the browser, which means finally you can work across OSes with Android and PC (though you need at least one iOS device involved).

Spatial Audio also provides some separation in the 3D audio field of participants, which I imagine might also prove useful to some creative use by musicians.

Wide Spectrum Audio mode should also give a higher-quality audio option, which could make it more useful to music.

I’ll be a little more cautious than some of my colleagues in talking about how much this will allow collaboration – I think specialized tools for music remain necessary for a lot of use cases. But as with a lot of the consumer features of iOS, I’m sure musicians will find some ways of using it.

More on Monterey:

https://www.apple.com/macos/monterey-preview/