How do you improve upon a sound that is already shorthand for noises that melt audiences’ faces off? And how do you revisit sound code decades after the machines that ran it are scrapped?

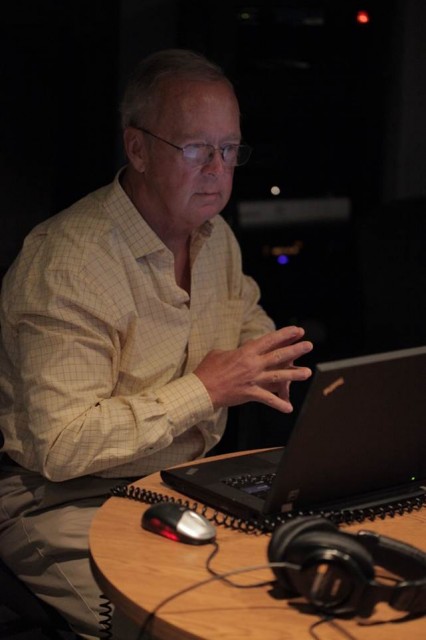

We get a chance to find out, as the man behind the THX “Deep Note” sound talks about its history and reissue. Dr. Andy Moorer, the character I called “the most interesting digital audio engineer in the world,” has already been terrifically open in talking about his sonic invention. He’s got more to say – and the audience is listening. (Sorry, I sort of had to do that.)

CDM: First, my big question is – how did you go about reconstructing something like this? Since the SoundDroid / Audio Signal Processor (ASP) is gone, that’s obviously out. Was it difficult to match the original? Was there any use of the original recordings?

Andy: I had two computer files from 1983. One was the original C program that generated the score, and the other was the “patch” file for the ASP that was written in Cleo, an audio-processing language that doesn’t exist anymore. The first thing I did was to resurrect the C program and make sure that it did generate the score properly. Then I wrote a set of special-purpose sound synthesis routines to interpret the score and produce the sound.

This wasn’t as difficult as it sounds. First off, the original program didn’t use a lot of different kinds of processing elements – maybe a total of 6 different elements. Next, this is the kind of software I have been writing for 45 years – I could write it in my sleep. It was not a problem. It took about a week to write the synthesis engine software and plug it into the original 30-year-old C program and get some sound out of it. There were also some calibration issues that I had to deal with, since the original ASP used some fixed-point scaling that I no longer recall. I had to experiment a bit to get the scaling right.

Then I was back at zero – I could start making the top-quality modern version. It took about another week to “tune” the new version and get it the way I wanted it. I spent more time in San Francisco with the THX folks making sure it met their needs as well. We then took the resulting sound files to Skywalker Sound to make the final mixes. It was a real thrill to finally hear the various versions in the Skywalker Stag theater. It was breathtaking.

I used the original only as an audio reference when I was bringing up the synthesis engine. Otherwise, the original sound was not used.

What’s it like making this sound with today’s tech? It seems one advantage, apart from the clear affordability and accessibility of hardware, is the ability to control sound in real-time. But how did you work with it now? What’s your current tool setup?

Boy, it is night and day. In 1983, it took a rack of digital hardware 6 feet [1.8m] tall to synthesize 30 voices in real time. Today I can do 70 voices on my laptop at close to real time. It is an absolute joy the power we have at our fingertips today. For this particular project, I just used a standard Dell W3 laptop. Nothing fancy. I have an M-Audio Fast Track Ultra 8R for the multi-channel D/A output for monitoring and testing. That gives me 8 channels of output, so I can do up to 7.1 but not Dolby Atmos. I didn’t actually hear the Atmos version I had synthesized until I got to Skywalker. I had a pretty good idea of what it would sound like, though.

A couple of people have already asked me about how you wound up with 20,000 lines of code in the original. I expect there was a fair bit of manual mucking about?

Actually I made a mistake with that 20,000 lines of code statement – that was just off the top of my head. I need to correct that if I can figure out how, but it also depends a bit on what lines of code you count. The original 30-year-old C program is 325 lines, and the “patch” file for the synthesizer was 298 more lines. I guess it just felt like 20,000 lines when I did it.

Given that it was written and debugged in 4 days, I can’t claim the programming chops to make 20,000 lines of working code that quickly. But, to synthesize it in real time, in 1983, took 2 years to design and build a 19” rack full of digital hardware and 200,000 lines of system code to run the synthesizer. All that was already done, so I was building on a large foundation of audio processing horsepower, both hardware and software. Consequently, a mere 325 lines of C code and 298 lines of audio patching setup for the 30 voices was enough to invoke the audio horsepower to make the piece.

What state is that code in, presently? Some people were curious to see it, but it seems efforts like Batuhan Bozkurt’s do a good job. I was actually unclear in the other correspondence I read what you meant my musical notation.

I guess you are asking who owns the code. THX, Ltd is the owner of the code that I produced. As you note, Mr. Bozkurt and a number of other folks have done perfectly marvelous versions of the piece as well. Around 1984, folks at the trademark division of Lucasfilm asked me to make a “score” for the piece in musical notation – you know – treble clefs and staff lines and that stuff. I took out my Rapidograph india-ink pens and drafted something that kind of suggests how the piece works as well as it can be expressed in traditional music notation. That was apparently necessary to copyright the piece in 1984.

This time, THX wanted a whole lot of versions. How did you approach all of these different surround formats?

It was a bit tricky, since it was not just the additional formats, but it was also fact that we wanted it to work well in the living room as well as the modern cinema. In 1983, home theater systems were not even a gleam in the eye. In the living room, you are relatively close to the speakers, so to make it sound rich and “enveloping”, I wanted the sound in each speaker to be as different as possible. In the cinema, you have a different problem – if you sit to one side, you will be close to one speaker and a long ways away from the others, so that speaker will dominate. In this case, the sound in each speaker had to be as rich as possible so it could stand on its own.

My first couple of tries sounded OK in the living room, but when I listened to each channel separately, they sounded thin and cheezy. If I just ran up the number of voices to 70, 80, or 90, each speaker sounded fine, but the overall impression got “mushy” and diffused. What I ended up doing is to put about 8 distinct voices into each speaker, so the 5.1 has about 40 voices (plus the subsonic ones in the subwoofer), the 7.1 version about 56 voices, and the Atmos 9.1 bed has over 70 voices.

The different versions do sound different. The 5.1 has a lot of “clarity” – you can really hear the individual voices move around. The 7.1 is still pretty crisp, since the voices come from different directions, you can still make them out separately, but there are some new voices moving in different directions that aren’t in the 5.1 version. The Atmos 9.1 adds two more overhead. These are far enough away that you don’t hear them clearly, but they just add to the richness.

In short, it was a challenge, but I think we came up with a viable solution that provides an experience that scales with the setup but preserves a lot of clarity and precision.

Are you involved in music and sound today? (Still playing the banjo?)

Is there air? Yes, of course. I play and make music every chance I get. I did some sax and banjo tracks for an album of spoken poetry a couple years ago and I play at the local watering holes from time to time.

Thanks, Andy! Really an inspiration to get to hear some of the nitty-gritty details – and to see a sound like this have such power. Now, up to us to work on our sound design coding chops – and our banjo licks, both, perhaps!

Shown: Andy Moorer at THX Ltd. San Francisco headquarters in 2014 during his visit to work on the regenerated THX Deep Note. (Photos: © THX Ltd.)

Previously: THX Just Remade the Deep Note Sound to be More Awesome