Just when you think software has lost its wonder, you’re singing into your iPhone with some cute animated blobs. Chantlings is a joy – and a demonstration of how even existing vocal technologies can be employed in fanciful new ways.

Chantlings is a collaboration between developer Iorama, whose ingenious animation app I looked at recently, and digital audio engineer Sventana aka Svante Stadler aka Heart of Noise.

And first, I mean – you want this thing. Singing into it is a total joy. It sounds so good I’ve even used it in place of much more “professional” harmonizers and made some fast samples to then mangle. (This is why I really don’t get the point of sample banks, to be honest!)

I mean, just watch:

You can’t help falling in love with this app.

The little forest blobs not only mimic your singing and intonation, but even words and vocal shapes. (They also make an adorable expression like they’re puzzling over your singing as they sometimes wait to copy you.) It’s fanciful, unpredictable, endearing – they almost seem alive.

You can then simply record in the app (with or without video), or use various methods to route audio directly from the iPhone/iPad to your computer. iOS devices now work as microphone inputs on macOS via Audio MIDI Setup; I’ll write a separate refresher tutorial on that shortly.

It’s easy to just see apps like this as a simple novelty or kids’ toy, but I see a real breakthrough in interaction. And anyone working in vocal processing will see plenty of inspiration (plus maybe something to mess around with yourself in Pd or SuperCollider, etc.). Heart of Noise and Iorama Studio began in this app trying to contemplate how to create a UI for a touchless app. That in turn has significant accessibility implications for a wide range of users, both inside and outside music.

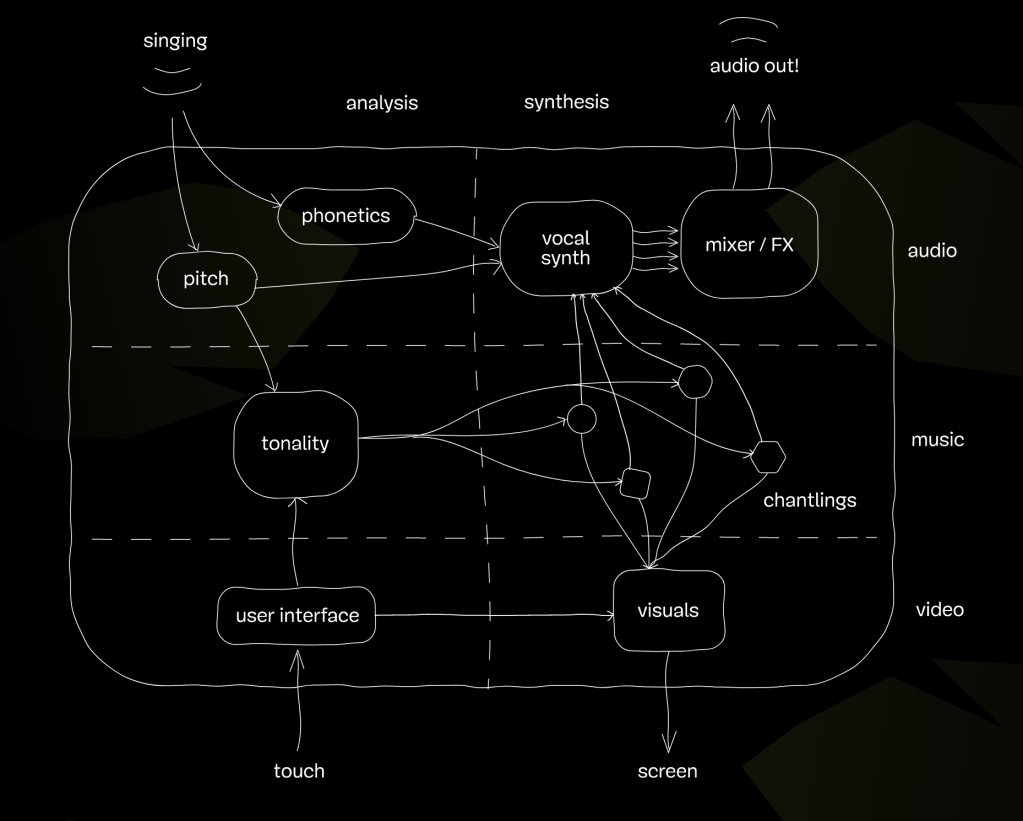

There’s some fairly sophisticated work going on here through wonderfully simple approaches – analyze pitch and phonetics, respond with a vocal synth.

It’s not especially new technology, at its core (though machine learning could arguably afford some other ways of doing this). But it doesn’t have to be – there’s plenty you can do with applying these tried-and-trusted techniques in novel ways and allowing the user to experiment.

Developer Sventana talks more about their approach to voice analysis and synthesis, so you see some of the other work they’ve done. That includes making an AutoTune-style auto-tuner for Radiotjänst’s “I’m A Star” campaign (for Swedish public service media) – but I’ll let them brag in song.

It’s one of the few times you’ll hear auto-tuned pop about, uh, C++ chops:

The vocal processor is written from scratch in c++ and includes auto-tuning, filter-distortion-tempo-delay, and a multiband normalizer/compressor. Initially, I wrote a command line VST host to run 3rd party tuners (Antares, Yamaha, etc), but they turned out to sound bad for regular speech input, because they are designed for singing voice, which is more periodic and predictable. My tuner is superior because it can find the globally optimal pitch track using dynamic programming.

Go here to make your own silly music video, and click here to watch mine on the original site.

There was also a project working on an interactive music video for pop megastar Robyn, with not-at-all-secret-sauce being the open source speech synth flite and the free mda vocoder.

Good stuff. We need more voice interfaces – for accessibility, and because they’re damned fun.

https://www.iorama.studio/chantlings